VLMEvalKit Save

Open-source evaluation toolkit of large vision-language models (LVLMs), support GPT-4v, Gemini, QwenVLPlus, 30+ HF models, 15+ benchmarks

VLMEvalKit (the python package name is vlmeval) is an open-source evaluation toolkit of large vision-language models (LVLMs). It enables one-command evaluation of LVLMs on various benchmarks, without the heavy workload of data preparation under multiple repositories. In VLMEvalKit, we adopt generation-based evaluation for all LVLMs, and provide the evaluation results obtained with both exact matching and LLM-based answer extraction.

🆕 News

- [2024-04-21] We have notices a minor issue of the MathVista evaluation script (which may negatively affect the performance). We have fixed it and updated the leaderboard accordingly

- [2024-04-17] We have supported InternVL-Chat-V1.5 🔥🔥🔥

- [2024-04-15] We have supported RealWorldQA, a multimodal benchmark for real-world spatial understanding 🔥🔥🔥

- [2024-04-09] We have refactored the inference interface of VLMs to a more unified version, check #140 for more details

- [2024-04-09] We have supported MMStar, a challenging vision-indispensable multimodal benchmark. The full evaluation results will be released soon 🔥🔥🔥

- [2024-04-08] We have supported InfoVQA and the test split of DocVQA. Great thanks to DLight 🔥🔥🔥

- [2024-03-28] Now you can use local OpenSource LLMs as the answer extractor or judge (see #132 for details). Great thanks to StarCycle 🔥🔥🔥

- [2024-03-22] We have supported LLaVA-NeXT 🔥🔥🔥

- [2024-03-21] We have supported DeepSeek-VL 🔥🔥🔥

-

[2024-03-20] We have supported users to use a

.envfile to manage all environment variables used in VLMEvalKit, see Quickstart for more details

📊 Datasets, Models, and Evaluation Results

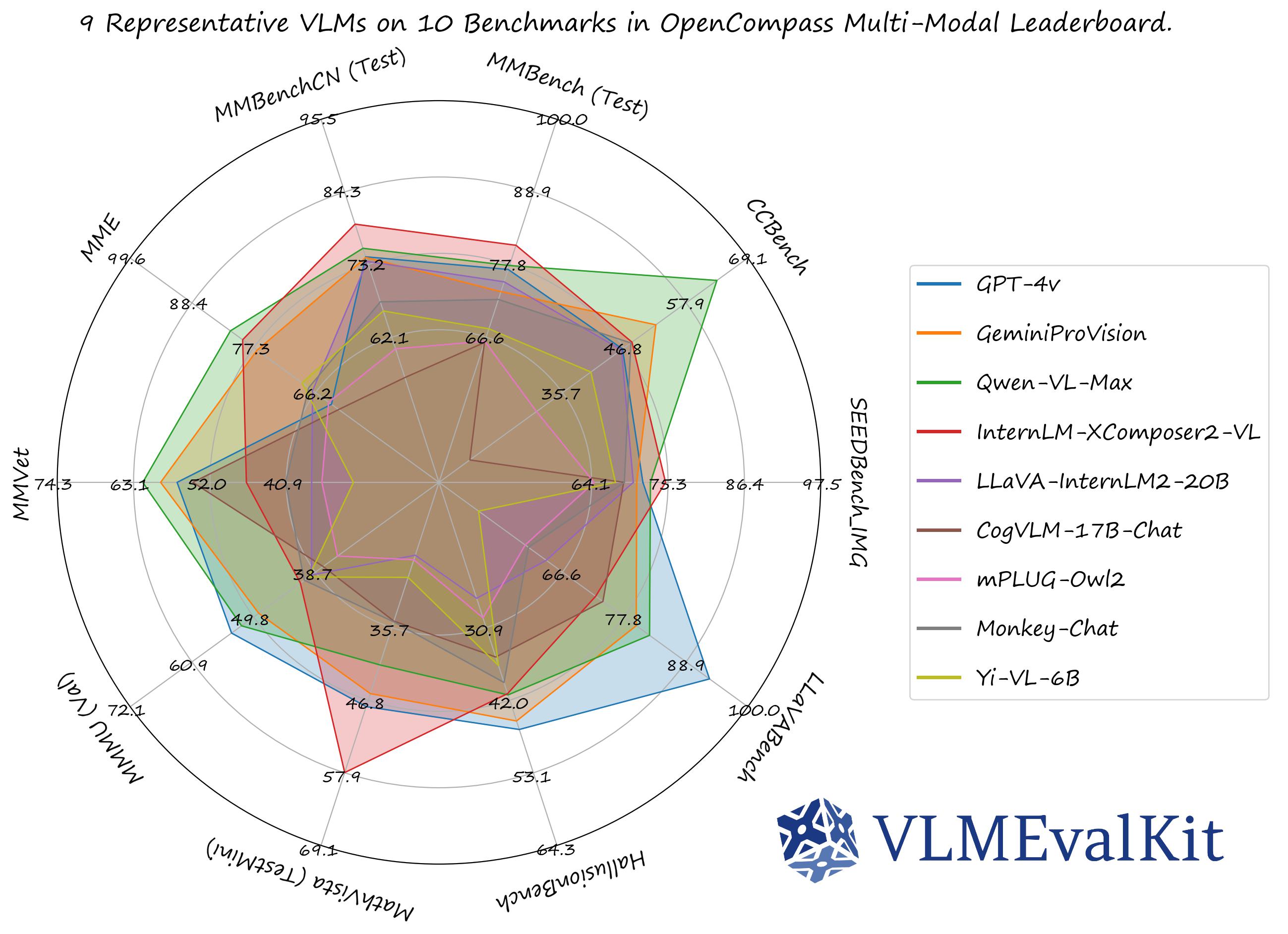

The performance numbers on our official multi-modal leaderboards can be downloaded from here!

OpenCompass Multi-Modal Leaderboard: Download All DETAILED Results.

Supported Dataset

| Dataset | Dataset Names (for run.py) | Task | Inference | Evaluation | Results |

|---|---|---|---|---|---|

| MMBench Series: MMBench, MMBench-CN, CCBench |

MMBench_DEV_[EN/CN] MMBench_TEST_[EN/CN] CCBench |

Multi-choice | ✅ | ✅ | MMBench Leaderboard |

| MMStar | MMStar | Multi-choice | ✅ | ✅ | Open_VLM_Leaderboard |

| MME | MME | Yes or No | ✅ | ✅ | Open_VLM_Leaderboard |

| SEEDBench_IMG | SEEDBench_IMG | Multi-choice | ✅ | ✅ | Open_VLM_Leaderboard |

| MM-Vet | MMVet | VQA | ✅ | ✅ | Open_VLM_Leaderboard |

| MMMU | MMMU_DEV_VAL/MMMU_TEST | Multi-choice | ✅ | ✅ | Open_VLM_Leaderboard |

| MathVista | MathVista_MINI | VQA | ✅ | ✅ | Open_VLM_Leaderboard |

| ScienceQA_IMG | ScienceQA_[VAL/TEST] | Multi-choice | ✅ | ✅ | Open_VLM_Leaderboard |

| COCO Caption | COCO_VAL | Caption | ✅ | ✅ | Open_VLM_Leaderboard |

| HallusionBench | HallusionBench | Yes or No | ✅ | ✅ | Open_VLM_Leaderboard |

| OCRVQA | OCRVQA_[TESTCORE/TEST] | VQA | ✅ | ✅ | TBD. |

| TextVQA | TextVQA_VAL | VQA | ✅ | ✅ | TBD. |

| ChartQA | ChartQA_TEST | VQA | ✅ | ✅ | TBD. |

| AI2D | AI2D_TEST | Multi-choice | ✅ | ✅ | Open_VLM_Leaderboard |

| LLaVABench | LLaVABench | VQA | ✅ | ✅ | Open_VLM_Leaderboard |

| DocVQA | DocVQA_[VAL/TEST] | VQA | ✅ | ✅ | TBD. |

| InfoVQA | InfoVQA_[VAL/TEST] | VQA | ✅ | ✅ | TBD. |

| OCRBench | OCRBench | VQA | ✅ | ✅ | Open_VLM_Leaderboard |

| Core-MM | CORE_MM | VQA | ✅ | N/A | |

| RealWorldQA | RealWorldQA | VQA | ✅ | ✅ | TBD. |

VLMEvalKit will use an judge LLM to extract answer from the output if you set the key, otherwise it uses the exact matching mode (find "Yes", "No", "A", "B", "C"... in the output strings). The exact matching can only be applied to the Yes-or-No tasks and the Multi-choice tasks.

Supported API Models

| GPT-4-Vision-Preview🎞️🚅 | GeminiProVision🎞️🚅 | QwenVLPlus🎞️🚅 | QwenVLMax🎞️🚅 | Step-1V🎞️🚅 |

|---|

Supported PyTorch / HF Models

🎞️: Support multiple images as inputs.

🚅: Model can be used without any additional configuration / operation.

Transformers Version Recommendation:

Note that some VLMs may not be able to run under certain transformer versions, we recommend the following settings to evaluate each VLM:

-

Please use

transformers==4.33.0for:Qwen series,Monkey series,InternLM-XComposer Series,mPLUG-Owl2,OpenFlamingo v2,IDEFICS series,VisualGLM,MMAlaya,SharedCaptioner,MiniGPT-4 series,InstructBLIP series,PandaGPT. -

Please use

transformers==4.37.0for:LLaVA series,ShareGPT4V series,TransCore-M,LLaVA (XTuner),CogVLM Series,EMU2 Series,Yi-VL Series,MiniCPM-V series,OmniLMM-12B,DeepSeek-VL series,InternVL series. -

Please use

transformers==4.39.0for:LLaVA-Next series. -

Please use

pip install git+https://github.com/huggingface/transformersfor:IDEFICS2.

# Demo

from vlmeval.config import supported_VLM

model = supported_VLM['idefics_9b_instruct']()

# Forward Single Image

ret = model.generate(['assets/apple.jpg', 'What is in this image?'])

print(ret) # The image features a red apple with a leaf on it.

# Forward Multiple Images

ret = model.generate(['assets/apple.jpg', 'assets/apple.jpg', 'How many apples are there in the provided images? '])

print(ret) # There are two apples in the provided images.

🏗️ QuickStart

See QuickStart for a quick start guide.

🛠️ Development Guide

To develop custom benchmarks, VLMs, or simply contribute other codes to VLMEvalKit, please refer to Development_Guide.

🎯 The Goal of VLMEvalKit

The codebase is designed to:

- Provide an easy-to-use, opensource evaluation toolkit to make it convenient for researchers & developers to evaluate existing LVLMs and make evaluation results easy to reproduce.

- Make it easy for VLM developers to evaluate their own models. To evaluate the VLM on multiple supported benchmarks, one just need to implement a single

generatefunction, all other workloads (data downloading, data preprocessing, prediction inference, metric calculation) are handled by the codebase.

The codebase is not designed to:

- Reproduce the exact accuracy number reported in the original papers of all 3rd party benchmarks. The reason can be two-fold:

- VLMEvalKit uses generation-based evaluation for all VLMs (and optionally with LLM-based answer extraction). Meanwhile, some benchmarks may use different approaches (SEEDBench uses PPL-based evaluation, eg.). For those benchmarks, we compare both scores in the corresponding result. We encourage developers to support other evaluation paradigms in the codebase.

- By default, we use the same prompt template for all VLMs to evaluate on a benchmark. Meanwhile, some VLMs may have their specific prompt templates (some may not covered by the codebase at this time). We encourage VLM developers to implement their own prompt template in VLMEvalKit, if that is not covered currently. That will help to improve the reproducibility.

🖊️ Citation

If you use VLMEvalKit in your research or wish to refer to the published OpenSource evaluation results, please use the following BibTeX entry and the BibTex entry corresponding to the specific VLM / benchmark you used.

@misc{2023opencompass,

title={OpenCompass: A Universal Evaluation Platform for Foundation Models},

author={OpenCompass Contributors},

howpublished = {\url{https://github.com/open-compass/opencompass}},

year={2023}

}

💻 Other Projects in OpenCompass

- opencompass: An LLM evaluation platform, supporting a wide range of models (LLaMA, LLaMa2, ChatGLM2, ChatGPT, Claude, etc) over 50+ datasets.

- MMBench: Official Repo of "MMBench: Is Your Multi-modal Model an All-around Player?"

- BotChat: Evaluating LLMs' multi-round chatting capability.

- LawBench: Benchmarking Legal Knowledge of Large Language Models.