OpenShift Guide Save

OpenShift Guide. Learn about the Red Hat OpenShift Container Platform, Data Science, Code Ready Containers, Podman, Buildah, and Kubernetes.

OpenShift Guide

A guide for getting started with OpenShift including the Tools and Applications that will make you a better and more efficient engineer with OpenShift.

Note: You can easily convert this markdown file to a PDF in VSCode using this handy extension Markdown PDF.

Table of Contents

Getting Started with OpenShift

What is OpenShift?

Red Hat OpenShift is an open source container application platform based on the Kubernetes container orchestrator for enterprise app development and deployment in the hybrid cloud Red Hat OpenShift, the open hybrid cloud platform built on Kubernetes. OpenShift can manage applications written in different languages and frameworks, such as Ruby, Node.js, Java, Perl, and Python.

Red Hat OpenShift Development Architecture. Source: Red Hat

Developer Resources

Certifications & Courses

-

Kubernetes and OpenShift: Community, Standards and Certifications

-

Introduction to Containers w/ Docker, Kubernetes & OpenShift course | Coursera

-

Fundamentals of Containers, Kubernetes, and Red Hat OpenShift | edX

Books

-

OpenShift for Developers, Second Edition by Joshua Wood & Brian Tannous

-

Introducing Istio Service Mesh for Microservices by Burr Sutter and Christian Posta

-

DevOps with OpenShift by Stefano Picozzi, Mike Hepburn & Noel O'Connor

-

Migrating to Microservice Databases: From Relational Monolith to Distributed Data by Edson Yanaga

-

OpenShift 3 for Developers: A Guide for Impatient Beginners by Grant Shipley, Graham Dumpleton

-

Using the IBM Block Storage CSI Driver in a Red Hat OpenShift Environment

-

Storage Multi-tenancy for Red Hat OpenShift Container Platform with IBM Storage

-

An Implementation of Red Hat OpenShift Network Isolation Using Multiple Ingress Controllers

-

IBM Spectrum Scale as a Persistent Storage for Red Hat OpenShift on IBM Z Quick Installation Guide

-

Innovate at Scale and Deploy with Confidence in a Hybrid Cloud Environment

Source-to-Image (S2I) images for programming/buildng your Apps

Java

Python

Golang

Ruby

.NET Core

- [.NET Core - Source-to-Image (S2I) Builder Images for OpenShift(https://docs.openshift.com/online/pro/using_images/s2i_images/dot_net_core.html).

Node.js

Perl

PHP

Builder Images for setting up Databases

MySQL

PostgreSQL

MongoDB

MariaDB

Redis

Setting up on Microsoft Azure

Microsoft Azure Red Hat OpenShift is a fully managed offering of OpenShift running in Azure. This service is jointly managed and supported by Microsoft and Red Hat.

Requirements:

-

Azure CLI version 2.6.0 or later.

-

56 vCPUs, so you must increase the account limit.

By default, each cluster creates the following instances:

-

One bootstrap machine, which is removed after installation

-

Three control plane machines

-

Three compute machines

Because the bootstrap, control plane, and worker machines use Standard_DS4_v2 virtual machines, which use 8 vCPUs, a default cluster requires 56 vCPUs. The bootstrap node VM is used only during installation. To deploy more worker nodes, enable autoscaling, deploy large workloads, or use a different instance type, you must further increase the vCPU limit for your account to ensure that your cluster can deploy the machines that you require.

-

1 VNet. Each default cluster requires one Virtual Network (VNet), which contains two subnets.

-

7 Network interfaces. Each default cluster requires seven network interfaces. If you create more machines or your deployed workloads create load balancers, your cluster uses more network interfaces.

-

2 Network security groups. Each cluster creates network security groups for each subnet in the VNet. The default cluster creates network security groups for the control plane and for the compute node subnets: controlplane

-

Allows the control plane machines to be reached on port 6443 from anywhere. node

-

Allows worker nodes to be reached from the internet on ports 80 and 443.

-

-

3 Network load balancers. Each cluster creates the following load balancers: default

-

Public IP address that load balances requests to ports 80 and 443 across worker machines internal

-

Private IP address that load balances requests to ports 6443 and 22623 across control plane machines external

-

Public IP address that load balances requests to port 6443 across control plane machines

-

-

Note: If your applications create more Kubernetes LoadBalancer service objects, your cluster uses more load balancers.

-

2 Public IP addresses. The public load balancer uses a public IP address. The bootstrap machine also uses a public IP address so that you can SSH into the machine to troubleshoot issues during installation. The IP address for the bootstrap node is used only during installation.

-

7 Private IP addresses. The internal load balancer, each of the three control plane machines, and each of the three worker machines each use a private IP address.

Ingress traffic to an Azure Red Hat OpenShift cluster. Image Credit: Red Hat

Egress traffic from an Azure Red Hat OpenShift cluster and connection to the cluster. Image Credit: Red Hat

Register the Resource Providers

If you have multiple Azure subscriptions, specify the relevant subscription ID:

az account set --subscription <SUBSCRIPTION ID>

Register the Microsoft.RedHatOpenShift resource provider:

az provider register -n Microsoft.RedHatOpenShift --wait

Register the Microsoft.Compute resource provider:

az provider register -n Microsoft.Compute --wait

Register the Microsoft.Storage resource provider:

az provider register -n Microsoft.Storage --wait

Register the Microsoft.Authorization resource provider:

az provider register -n Microsoft.Authorization --wait

Create a Resource Group:

az group create \

--name $RESOURCEGROUP \

--location $LOCATION

Creating a Virtual Network:

az network vnet create \

--resource-group $RESOURCEGROUP \

--name aro-vnet \

--address-prefixes 10.0.0.0/22

Adding empty subnet for the master nodes.

az network vnet subnet create \

--resource-group $RESOURCEGROUP \

--vnet-name aro-vnet \

--name master-subnet \

--address-prefixes 10.0.0.0/23 \

--service-endpoints Microsoft.ContainerRegistry

Adding empty subnet for the worker nodes.

az network vnet subnet create \

--resource-group $RESOURCEGROUP \

--vnet-name aro-vnet \

--name worker-subnet \

--address-prefixes 10.0.2.0/23 \

--service-endpoints Microsoft.ContainerRegistry

Disable subnet private endpoint policies on the master subnet.

az network vnet subnet update \

--name master-subnet \

--resource-group $RESOURCEGROUP \

--vnet-name aro-vnet \

--disable-private-link-service-network-policies true

Creating a Cluster

az aro create \

--resource-group $RESOURCEGROUP \

--name $CLUSTER \

--vnet aro-vnet \

--master-subnet master-subnet \

--worker-subnet worker-subnet

Setting up on Google Cloud (GCP)

Minimum Requirements:

Master Nodes:

-

Minimum 4 vCPU (additional are strongly recommended).

-

Minimum 16 GB RAM (additional memory is strongly recommended, especially if etcd is co-located on masters).

-

Minimum 40 GB hard disk space for the file system .

Worker Nodes:

-

1 vCPU.

-

Minimum 8 GB RAM.

-

Minimum 15 GB hard disk space for the file system.

-

If you don’t have a GCP account already, sign-up for Cloud Platform, setup billing and activate APIs.

-

Setup a service account. A service account is a way to interact with your GCP resources by using a different identity than your primary login and is generally intended for server-to-server interaction. From the GCP Navigation Menu, click on "Permissions."

- Click on "Service accounts."

Click on "Create service account," which will prompt you to enter a service account name. Provide a name for your project and click on "Furnish a new private key." The default "JSON" Key type should be left selected.

Once you click "Create," a service account “.json” will be downloaded to your browser’s downloads location.

- Important: Like any credential, this represents an access mechanism to authenticate and use resources in your GCP account. Never place this file in a publicly accessible source repo (Public GitHub or GitLab).

using the JSON credential via a Kubernetes secret deployed to your OpenShift cluster. To do so, first perform a base64 encoding of your JSON credential file:

base64 -i ~/path/to/downloads/credentials.json

Keep the output (a very long string) ready for use in the next step, where you’ll replace ‘BASE64_CREDENTIAL_STRING’ in the pod example (below) with the output just captured from base64 encoding.

- Note: base64 is encoded (not encrypted) and can be readily reversed, so this file (with the base64 string) is just as confidential as the credential file above.

Create the Kubernetes secret inside your OpenShift Cluster. A secret is the proper place to make sensitive information available to pods running in your cluster (like passwords or the credentials downloaded in the previous step).

apiVersion: v1

kind: Secret

metadata:

name: google-services-secret

type: Opaque

data:

google-services.json: BASE64_CREDENTIAL_STRING

Note: Replace ‘BASE64_CREDENTIAL_STRING’ with the base64 output from the prior step.

Deploy the secret to the cluster:

oc create -f google-secret.yaml

Setting up Red Hat OpenShift Data Science

Red Hat® OpenShift® Data Science is a fully managed cloud service for data scientists and developers of intelligent applications on Red Hat OpenShift Dedicated or Red Hat OpenShift Service on AWS. It provides a fully supported sandbox in which to rapidly develop, train, and test machine learning (ML) models in the public cloud before deploying in production.

Installing Red Hat OpenShift Data Science

Opening Red Hat OpenShift Data Science

JuypterHub on Red Hat OpenShift Data Science

Exploring Tools on Red Hat OpenShift Data Science

Setting up JupyterHub Notebook Server

Creating a new Python 3 Notebook

Python 3 JupyterHub Notebook

JupyterHub Notebook Sample Demo

OpenShift Project Models

How OpenShift integrates with JupyterHub using Python - Source-to-Image (S2I)

Red Hat CodeReady Containers (CRC)

Red Hat CodeReady Containers (CRC) is a tool that provides a minimal, preconfigured OpenShift 4 cluster on a laptop or desktop machine for development and testing purposes. CRC is delivered as a platform inside of the VM.

- odo (OpenShift Do), a CLI tool for developers, to manage application components on the OpenShift Container Platform.

System Requirements:

- OS: CentOS Stream 8/RHEL 8/Fedora or later (the latest 2 releases).

- Download: pull-secret

- Login: Red Hat account

Other physical requirements include:

- Four virtual CPUs (4 vCPUs)

- 10GB of memory (RAM)

- 40GB of storage space

To set up CodeReady Containers, start by creating the crc directory, and then download and extract the crc package:

mkdir /home/<user>/crc

wget https://mirror.openshift.com/pub/openshift-v4/clients/crc/latest/crc-linux-amd64.tar.xz

tar -xvf crc-linux-amd64.tar.xz

Next, move the files to the crc directory and remove the downloaded package(s):

mv /home/<user>/crc-linux-<version>-amd64/* /home/<user>/crc

rm /home/<user>/crc-linux-amd64.tar.xz

rm -r /home/<user>/crc-linux-<version>-amd64

Change to the crc directory, make crc executable, and export your PATH like this:

cd /home/<user>/crc

chmod +x crc

export PATH=$PATH:/home/<user>/crc

Set up and start the cluster:

crc setup

crc start -p /<path-to-the-pull-secret-file>/pull-secret.txt

Set up the OC environment:

crc oc-env

eval $(crc oc-env)

Log in as the developer user:

oc login -u developer -p developer https://api.crc.testing:6443

oc logout

And then, log in as the platform’s admin:

oc login -u kubeadmin -p password https://api.crc.testing:6443

oc logout

Interacting with the cluster. The most common ways include:

Starting the graphical web console:

crc console

Display the cluster’s status:

crc status

Shut down the OpenShift cluster:

crc stop

Delete or kill the OpenShift cluster:

crc delete

Setting up Podman

Podman (the POD manager) is an open source tool for developing, managing, and running containers on your Linux systems. It also manages the entire container ecosystem using the libpod library. Podman’s daemonless and inclusive architecture makes it a more secure and accessible option for container management, and its accompanying tools and features, such as Buildah and Skopeo, allow developers to customize their container environments to best suit their needs.

- Libpod provides a library for applications looking to use the Container Pod concept made popular by Kubernetes.

Installing Podman:

- Fedora:

sudo dnf install podman - CentOS Stream:

sudo dnf install buildah - Ubuntu 20.04 or later:

sudo apt install podman - Debian 11 (bullseye) or later, or sid/unstable:

sudo apt install podman - openSUSE:

sudo zypper install podman - ArchLinux:

sudo pacman -S podmanand then tweaks for rootless

Podman Desktop is a tool to manage Podman and other container engines from a single UI and tray local environment.

Podman Desktop

Podman

Setting up Buildah

Buildah is an open source, Linux-based tool that can build Docker- and Kubernetes-compatible images, and is easy to incorporate into scripts and build pipelines. In addition, Buildah has overlap functionality with Podman, Skopeo, and CRI-O.

- Fedora:

sudo dnf -y install buildah - CentOS Stream:

sudo dnf -y install buildah - Ubuntu 20.04 or later:

sudo apt install buildah - Debian 11 (bullseye) or later, or sid/unstable:

sudo apt install -y buildah - openSUSE:

sudo zypper install buildah - ArchLinux:

sudo pacman -S buildahand then tweaks for rootless

Buildah

Setting up Skopeo

Skopeo is a tool for manipulating, inspecting, signing, and transferring container images and image repositories on Linux systems, Windows and MacOS. In addition, Skopeo has overlap functionality with Podman, Buildah, and CRI-O.

Installing Skopeo:

- Fedora:

sudo dnf install skopeo - CentOS Stream:

sudo dnf -y install skopeo - Ubuntu 20.04 or later:

sudo apt install skopeo - Debian 11 (bullseye) or later, or sid/unstable:

sudo apt install skopeo - openSUSE:

sudo zypper install skopeo - Alpine Linux:

sudo apk add skopeo - ArchLinux:

sudo pacman -S skopeoand then tweaks for rootless - Nix/NixOS:

$ nix-env -i skopeo - MacOS:

brew install skopeo

Skopeo Usage:

$ skopeo --help

Various operations with container images and container image registries

Usage:

skopeo [command]

Available Commands:

copy Copy an IMAGE-NAME from one location to another

delete Delete image IMAGE-NAME

help Help about any command

inspect Inspect image IMAGE-NAME

list-tags List tags in the transport/repository specified by the REPOSITORY-NAME

login Login to a container registry

logout Logout of a container registry

manifest-digest Compute a manifest digest of a file

standalone-sign Create a signature using local files

standalone-verify Verify a signature using local files

sync Synchronize one or more images from one location to another

File systems

CIFS (Common Internet File System) is a network filesystem protocol used for providing shared access to files and printers between machines on the network. The client application can read, write, edit and even remove files on the remote server.

Network File System (NFS) is a protocol that provides a file sharing solution for enterprises that have heterogeneous environments that include both Windows and non-Windows computers. It's most notable for its host authentication, it’s simple to setup, and makes it possible to connect to another service using an IP address only.

Additional benefits of NFS file share include:

- NFS provides a central management.

- NFS allows for a user to log into any server and have access to their files transparently.

- It’s been around for a long time, so it comes with familiarity in terms of applications.

- No manual refresh needed for new files.

- It Can be secured with firewalls and Kerberos.

GlusterFS is a free and open source scalable network filesystem. Gluster is a scalable network filesystem. Using common off-the-shelf hardware, you can create large, distributed storage solutions for media streaming, data analysis, and other data- and bandwidth-intensive tasks.

Ceph is a software-defined storage solution designed to address the object, block, and file storage needs of data centers adopting open source as the new norm for high-growth block storage, object stores and data lakes. Ceph provides enterprise scalable storage while keeping CAPEX and OPEX costs in line with underlying bulk commodity disk prices.

Hadoop Distributed File System (HDFS) is a distributed file system that handles large data sets running on commodity hardware. It is used to scale a single Apache Hadoop cluster to hundreds (and even thousands) of nodes. HDFS is one of the major components of Apache Hadoop, the others being MapReduce and YARN.

ZFS is an enterprise-ready open source file system and volume manager with unprecedented flexibility and an uncompromising commitment to data integrity.

OpenZFS is an open-source storage platform. It includes the functionality of both traditional file systems and volume manager. It has many advanced features including:

- Protection against data corruption.

- Integrity checking for both data and metadata.

- Continuous integrity verification and automatic "self-healing" repair.

Btrfs is a modern copy on write (CoW) filesystem for Linux aimed at implementing advanced features while also focusing on fault tolerance, repair and easy administration. Its main features and benefits are:

- Snapshots which do not make the full copy of files

- RAID - support for software-based RAID 0, RAID 1, RAID 10

- Self-healing - checksums for data and metadata, automatic detection of silent data corruptions

Bcachefs is an advanced new filesystem for Linux, with an emphasis on reliability and robustness and the complete set of features one would expect from a modern filesystem. Scalability has been tested to 50+ TB, will eventually scale far higher.

Ext4 is a journaling file system for Linux, developed as the successor to ext3

Squashfs is a compressed read-only filesystem for Linux. It uses zlib, lz4, lzo, or xz compression to compress files, inodes and directories. Inodes in the system are very small and all blocks are packed to minimize data overhead.

NTFS(New Technology File System) is the primary file system for recent versions of Windows and Windows Server—provides a full set of features including security descriptors, encryption, disk quotas, and rich metadata, and can be used with Cluster Shared Volumes (CSV) to provide continuously available volumes that can be accessed simultaneously from multiple nodes of a failover cluster.

OpenShift Tools

OpenShift CLI (oc) is a command line interface tool that extends the capabilities of kubectl with many convenience functions that make interacting with both Kubernetes and OpenShift clusters easier.

OpenShift Serverless CLI (kn) is a command line interface tool to deploy serverless applications, then you’ll want access and control via the kn command.

OpenShift Pipelines CLI (tkn) is a command line interface tool for using Tekton to provide cloud-native CI/CD functionality within the cluster. The tkn command is used to manage the functionality from the CLI.

Red Hat CodeReady Containers is an option to host a local, all-in-one OpenShift 4 cluster on your workstation. CodeReady Containers replaces minishift, used to run OpenShift 3 clusters on your workstation, as a quick and easy method of creating test and development clusters.

Helm CLI is a command line interface tool for deploying and managing Kubernetes applications to your clusters.

OpenShift Hive is an operator which runs as a service on top of Kubernetes/OpenShift. The Hive service can be used to provision and perform initial configuration of OpenShift 4 clusters.

OpenShift Service Mesh is a tool that provides a layer on top of OpenShift for securely connecting services in a consistent manner. This provides centralized control, security and observability across your services without having to modify your applications.

Azure Red Hat OpenShift is a flexible, self-service deployment of fully managed OpenShift clusters. Maintain regulatory compliance and focus on your application development, while your master, infrastructure, and application nodes are patched, updated, and monitored by both Microsoft and Red Hat.

Red Hat OpenShift Service on AWS (ROSA) is a fully-managed and jointly supported Red Hat OpenShift offering that combines the power of Red Hat OpenShift, the industry's most comprehensive enterprise Kubernetes platform, and the AWS public cloud.

Red Hat OpenShift on Google Cloud is a fully-managed and jointly supported Red Hat OpenShift offering that enables you to deploy stateful and stateless apps with nearly any language, framework, database, or service. It gives you a hosted environment entirely on Google Cloud. A hybrid environment where you maintain part of your workload on-premises or in a private hosting environment and migrate the rest to Google Cloud.

Red Hat® Quay is a secure, private container registry that builds, analyzes and distributes container images. It provides a high level of automation and customization.

Kata Operator is an operator to perform lifecycle management (install/upgrade/uninstall) of Kata Runtime on Openshift as well as Kubernetes cluster.

Open Container Initiative is an open governance structure for the express purpose of creating open industry standards around container formats and runtimes.

Buildah is a command line tool to build Open Container Initiative (OCI) images. It can be used with Docker, Podman, Kubernetes.

Podman is a daemonless, open source, Linux native tool designed to make it easy to find, run, build, share and deploy applications using Open Containers Initiative (OCI) Containers and Container Images. Podman provides a command line interface (CLI) familiar to anyone who has used the Docker Container Engine.

Containerdis a daemon that manages the complete container lifecycle of its host system, from image transfer and storage to container execution and supervision to low-level storage to network attachments and beyond. It is available for Linux and Windows.

OKD is a community distribution of Kubernetes optimized for continuous application development and multi-tenant deployment. OKD adds developer and operations-centric tools on top of Kubernetes to enable rapid application development, easy deployment and scaling, and long-term lifecycle maintenance for small and large teams.

OpenShift DevOps Tools Integration

Ansibleis a simple IT automation engine that automates cloud provisioning, configuration management, application deployment, intra-service orchestration, and many other IT needs. It uses a very simple language (YAML, in the form of Ansible Playbooks) that allows you to describe your automation jobs in a way that approaches plain English. Anisble works on Linux (Red Hat EnterPrise Linux(RHEL) and Ubuntu) and Microsoft Windows.

Ansible cmdb is a tool that takes the output of Ansible’s fact gathering and converts it into a static HTML overview page containing system configuration information.

Ansible Inventory Grapher visually displays inventory inheritance hierarchies and at what level a variable is defined in inventory.

Ansible Playbook Grapher is a command line tool to create a graph representing your Ansible playbook tasks and roles.

Ansible Shell is an interactive shell for Ansible with built-in tab completion for all the modules.

Ansible Silo is a self-contained Ansible environment by Docker.

Ansigenome is a command line tool designed to help you manage your Ansible roles.

ARA is a records Ansible playbook runs and makes the recorded data available and intuitive for users and systems by integrating with Ansible as a callback plugin.

OAuth2 for Go is an oauth2 package contains a client implementation for OAuth 2.0 spec.

kube-bench is a tool that checks whether Kubernetes is deployed securely by running the checks documented in the CIS Kubernetes Benchmark.

GitHub provides hosting for software development version control using Git. It offers all of the distributed version control and source code management functionality of Git as well as adding its own features. It provides access control and several collaboration features such as bug tracking, feature requests, task management, and wikis for every project.

GitHub Codespaces is an integrated development environment(IDE) on GitHub. That allows developers to develop entirely in the cloud using Visual Studio and Visual Studio Code.

GitHub Actions will automate, customize, and execute your software development workflows right in your repository with GitHub Actions. You can discover, create, and share actions to perform any job you'd like, including CI/CD, and combine actions in a completely customized workflow.GitHub Actions for Azure you can create workflows that you can set up in your repository to build, test, package, release and deploy to Azure.Learn more about all other integrations with Azure.

GitLab is a web-based DevOps lifecycle tool that provides a Git-repository manager providing wiki, issue-tracking and CI/CD pipeline features, using an open-source license, developed by GitLab Inc.

Jenkins is a free and open source automation server. Jenkins helps to automate the non-human part of the software development process, with continuous integration and facilitating technical aspects of continuous delivery.

Bitbucket is a web-based version control repository hosting service owned by Atlassian, for source code and development projects that use either Mercurial or Git revision control systems. Bitbucket offers both commercial plans and free accounts. It offers free accounts with an unlimited number of private repositories. Bitbucket integrates with other Atlassian software like Jira, HipChat, Confluence and Bamboo.

Bamboo is a continuous integration (CI) server that can be used to automate the release management for a software application, creating a continuous delivery pipeline.

Codecov is the leading, dedicated code coverage solution. It provides highly integrated tools to group, merge, archive and compare coverage reports. Whether your team is comparing changes in a pull request or reviewing a single commit, Codecov will improve the code review workflow and quality.

Drone is a Continuous Delivery system built on container technology. Drone uses a simple YAML configuration file, a superset of docker-compose, to define and execute Pipelines inside Docker containers.

Travis CI is a hosted continuous integration service used to build and test software projects hosted at GitHub.

Circle CI is a continuous integration and continuous delivery platform that helps software teams work smarter, faster.

Zuul-CI is a program that drives continuous integration, delivery, and deployment systems with a focus on project gating and interrelated projects. Using the same Ansible playbooks to deploy your system and run your tests.

Artifactory is a Universal Artifact Repository Manager developed by JFrog. It supports all major packages, enterprise ready security, clustered, HA, Docker registry, multi-site replication and scalable.

Azure DevOps is a set of services for teams to share code, track work, and ship software; CLIs Build, deploy, diagnose, and manage multi-platform, scalable apps and services; Azure Pipelines Continuously build, test, and deploy to any platform and cloud; Azure Lab Services Set up labs for classrooms, trials, development and testing, and other scenarios.

Team City is a build management and continuous integration server from JetBrains.

Shippable simplifies DevOps and makes it systematic with an Assembly Line platform that is heterogeneous, flexible, and provides complete visibility across your DevOps workflows.

Spinnaker is an open source, multi-cloud continuous delivery platform for releasing software changes with high velocity and confidence.

AWS CodeBuild is a fully managed continuous integration service that compiles source code, runs tests, and produces software packages that are ready to deploy. With CodeBuild, you don't need to provision, manage, and scale your own build servers.

Selenium is a free (open source) automated testing suite for web applications across different browsers and platforms.

Cucumber is a tool based on Behavior Driven Development (BDD) framework which is used to write acceptance tests for the web application. It allows automation of functional validation in easily readable and understandable format (like plain English) to Business Analysts, Developers, and Testers.

JUnit is a unit testing framework for the Java programming language.

Mocha is a JavaScript test framework for Node.js programs, featuring browser support, asynchronous testing, test coverage reports, and use of any assertion library.

Karma is a simple tool that allows you to execute JavaScript code in multiple real browsers.

Jasmine is an open source testing framework for JavaScript. It aims to run on any JavaScript-enabled platform, to not intrude on the application nor the IDE, and to have easy-to-read syntax.

Maven is a build automation tool used primarily for Java projects. Maven can also be used to build and manage projects written in C#, Ruby, Scala, and other languages. The Maven project is hosted by the Apache Software Foundation.

Gradle is an open-source build-automation system that builds upon the concepts of Apache Ant and Apache Maven and introduces a Groovy-based domain-specific language instead of the XML form used by Apache Maven for declaring the project configuration.

Chef is an effortless Infrastructure Suite offers visibility into security and compliance status across all infrastructure and makes it easy to detect and correct issues long before they reach production.

Puppet is an open source tool that makes continuous integration and delivery of your software on traditional or containerized infrastructure easy by pulling together all your existing tools and giving you flexibility to deploy your way.

KubeInit provides Ansible playbooks and roles for the deployment and configuration of multiple Kubernetes distributions.

Salt is Python-based, open-source software for event-driven IT automation, remote task execution, and configuration management. Supporting the "Infrastructure as Code" approach to data center system and network deployment and management, configuration automation, SecOps orchestration, vulnerability remediation, and hybrid cloud control.

Terraform is an open-source infrastructure as code software tool created by HashiCorp.It enables users to define and provision a datacenter infrastructure using a high-level configuration language known as Hashicorp Configuration Language (HCL), or optionally JSON.

Consul is a service networking solution to connect and secure services across any runtime platform and public or private cloud.

Packer is lightweight, runs on every major operating system, and is highly performant, creating machine images for multiple platforms in parallel. Packer does not replace configuration management like Chef or Puppet. In fact, when building images, Packer is able to use tools like Chef or Puppet to install software onto the image.

Nomad is a highly available, distributed, data-center aware cluster and application scheduler designed to support the modern datacenter with support for long-running services, batch jobs, and much more.

Vagrant is a tool for building and managing virtual machine environments in a single workflow. With an easy-to-use workflow and focus on automation, Vagrant lowers development environment setup time and increases production parity.

Vault is a tool for securely accessing secrets. A secret is anything that you want to tightly control access to, such as API keys, passwords, certificates, and more. Vault provides a unified interface to any secret, while providing tight access control and recording a detailed audit log.

CFEngine is an open-source configuration management system, written by Mark Burgess.Its primary function is to provide automated configuration and maintenance of large-scale computer systems, including the unified management of servers, desktops, consumer and industrial devices, embedded networked devices, mobile smartphones, and tablet computers.

Octpus Deploy is the deployment automation server for your entire team, designed to make it easy to orchestrate releases and deploy applications, whether on-premises or in the cloud.

AWS CodeDeploy is a fully managed deployment service that automates software deployments to a variety of compute services such as Amazon EC2, AWS Fargate, AWS Lambda, and your on-premises servers. AWS CodeDeploy makes it easier for you to rapidly release new features, helps you avoid downtime during application deployment, and handles the complexity of updating your applications.

Kubernetes is an open-source container-orchestration system for automating application deployment, scaling, and management. It was originally designed by Google, and is now maintained by the Cloud Native Computing Foundation.

Docker is a set of platform as a service products that use OS-level virtualization to deliver software in packages called containers. Containers are isolated from one another and bundle their own software, libraries and configuration files; they can communicate with each other through well-defined channels. All containers are run by a single operating-system kernel and are thus more lightweight than virtual machines.

PowerShell/PowerShell Core is a cross-platform (Windows, Linux, and macOS) automation and configuration tool/framework that works well with your existing tools and is optimized for dealing with structured data (e.g. JSON, CSV, XML, etc.), REST APIs, and object models. It includes a command-line shell, an associated scripting language and a framework for processing cmdlets.

Hyper-V creates virtual machines on Windows 10. Hyper-V can be enabled in many ways including using the Windows 10 control panel, PowerShell or using the Deployment Imaging Servicing and Management tool (DISM).

Cloud Hypervisor is an open source Virtual Machine Monitor (VMM) that runs on top of KVM. The project focuses on exclusively running modern, cloud workloads, on top of a limited set of hardware architectures and platforms. Cloud workloads refers to those that are usually run by customers inside a cloud provider. Cloud Hypervisor is implemented in Rust and is based on the rust-vmm crates.

VMware vSphere Hypervisor is a bare-metal hypervisor that virtualizes servers; allowing you to consolidate your applications while saving time and money managing your IT infrastructure.

VMware vSphere is the industry-leading compute virtualization platform, and your first step to application modernization. It has been rearchitected with native Kubernetes to allow customers to modernize the 70 million+ workloads now running on vSphere.

VMware Tanzu is a centralized management platform for consistently operating and securing your Kubernetes infrastructure and modern applications across multiple teams and private/public clouds.

Rancher is a complete software stack for teams adopting containers. It addresses the operational and security challenges of managing multiple Kubernetes clusters, while providing DevOps teams with integrated tools for running containerized workloads.

K3s is a highly available, certified Kubernetes distribution designed for production workloads in unattended, resource-constrained, remote locations or inside IoT appliances.

Rook is an open source cloud-native storage orchestrator for Kubernetes that turns distributed storage systems into self-managing, self-scaling, self-healing storage services. It automates the tasks of a storage administrator: deployment, bootstrapping, configuration, provisioning, scaling, upgrading, migration, disaster recovery, monitoring, and resource management.

Google Kubernetes Engine (GKE) is a managed, production-ready environment for deploying containerized applications.

Anthos is a modern application management platform that provides a consistent development and operations experience for cloud and on-premises environments.

AWS ECS is a highly scalable, high-performance container orchestration service that supports Docker containers and allows you to easily run and scale containerized applications on AWS. Amazon ECS eliminates the need for you to install and operate your own container orchestration software, manage and scale a cluster of virtual machines, or schedule containers on those virtual machines.

Apache Mesos is a cluster manager that provides efficient resource isolation and sharing across distributed applications, or frameworks. It can run Hadoop, Jenkins, Spark, Aurora, and other frameworks on a dynamically shared pool of nodes.

Apache Spark is a unified analytics engine for big data processing, with built-in modules for streaming, SQL, machine learning and graph processing.

Apache Hadoop is a framework that allows for the distributed processing of large data sets across clusters of computers using simple programming models. It is designed to scale up from single servers to thousands of machines, each offering local computation and storage. Rather than rely on hardware to deliver high-availability, the library itself is designed to detect and handle failures at the application layer, so delivering a highly-available service on top of a cluster of computers, each of which may be prone to failures.

Microsoft Azure is a cloud computing service created by Microsoft for building, testing, deploying, and managing applications and services through Microsoft-managed data centers.

Azure Functions is a solution for easily running small pieces of code, or "functions," in the cloud. You can write just the code you need for the problem at hand, without worrying about a whole application or the infrastructure to run it.

Rkt is a pod-native container engine for Linux. It is composable, secure, and built on standards.

AWS Lambda is an event-driven, serverless computing platform provided by Amazon as a part of the Amazon Web Services. It is a computing service that runs code in response to events and automatically manages the computing resources required by that code.

Helm is the Kubernetes Package Manager.

Kubespray is a tool that combines Kubernetes and Ansible to easily install Kubernetes clusters that can be deployed on AWS, GCE, Azure, OpenStack, vSphere, Packet (bare metal), Oracle Cloud Infrastructure (Experimental), or Baremetal

OKD is a community distribution of Kubernetes optimized for continuous application development and multi-tenant deployment. OKD adds developer and operations-centric tools on top of Kubernetes to enable rapid application development, easy deployment and scaling, and long-term lifecycle maintenance for small and large teams.

Odo is a fast, iterative, and straightforward CLI tool for developers who write, build, and deploy applications on Kubernetes and OpenShift.

Knative is a Kubernetes-based platform to build, deploy, and manage modern serverless workloads. Knative takes care of the operational overhead details of networking, autoscaling (even to zero), and revision tracking.

Kubebox is a Terminal and Web console for Kubernetes.

Kubsec is a Security risk analysis for Kubernetes resources.

Replex is a Kubernetes Governance and Cost Management for the Cloud-Native Enterprise.

Virtual Kubelet is an open-source Kubernetes kubelet implementation that masquerades as a kubelet.

Telepresence is a fast, local development for Kubernetes and OpenShift microservices.

Weave Scope is a tool that automatically detects processes, containers, hosts. No kernel modules, no agents, no special libraries, no coding. It seamless integration with Docker, Kubernetes, DCOS and AWS ECS.

Nuclio is a high-performance "serverless" framework focused on data, I/O, and compute intensive workloads. It is well integrated with popular data science tools, such as Jupyter and Kubeflow; supports a variety of data and streaming sources; and supports execution over CPUs and GPUs.

Supergiant Control is a tool that manages the lifecycle of clusters on your infrastructure and allows deployment of applications via HELM. Its deployment and configuration workflows will help you to get up and running with Kubernetes faster.

Supergiant Capacity - Beta is a tool that ensures that the right hardware is available for the required resource load of your Kubernetes cluster at any given time. This helps prevent over-provisioning of your container environment and overspending on your hardware budget.

Test suite for Kubernetes is a test suite consists of two Helm charts for network bandwith testing and load testing a Kuberntes cluster.

Keel is a Kubernetes Operator to automate Helm, DaemonSet, StatefulSet & Deployment updates.

Kube Monkey is an implementation of Netflix's Chaos Monkey for Kubernetes clusters. It randomly deletes Kubernetes (k8s) pods in the cluster encouraging and validating the development of failure-resilient services.

Kube State Metrics (KSM) is a simple service that listens to the Kubernetes API server and generates metrics about the state of the objects. It's not focused on the health of the individual Kubernetes components, but rather on the health of the various objects inside, such as deployments, nodes and pods.

Sonobuoy is a diagnostic tool that makes it easier to understand the state of a Kubernetes cluster by running a choice of configuration tests in an accessible and non-destructive manner.

PowerfulSeal is a powerful testing tool for your Kubernetes clusters, so that you can detect problems as early as possible.

Test Infra is a repository contains tools and configuration files for the testing and automation needs of the Kubernetes project.

cAdvisor (Container Advisor) is a tool that provides container users an understanding of the resource usage and performance characteristics of their running containers. It is a running daemon that collects, aggregates, processes, and exports information about running containers. Specifically, for each container it keeps resource isolation parameters, historical resource usage, histograms of complete historical resource usage and network statistics.

Etcd is a distributed key-value store that provides a reliable way to store data that needs to be accessed by a distributed system or cluster of machines. Etcd is used as the backend for service discovery and stores cluster state and configuration for Kubernetes.

OpenStack is a free and open-source software platform for cloud computing, mostly deployed as infrastructure-as-a-service that controls large pools of compute, storage, and networking resources throughout a datacenter, managed through a dashboard or via the OpenStack API. OpenStack works with popular enterprise and open source technologies making it ideal for heterogeneous infrastructure.

Cloud Foundry is an open source, multi cloud application platform as a service that makes it faster and easier to build, test, deploy and scale applications, providing a choice of clouds, developer frameworks, and application services. It is an open source project and is available through a variety of private cloud distributions and public cloud instances.

Splunk software is used for searching, monitoring, and analyzing machine-generated big data, via a Web-style interface.

Prometheus is a free software application used for event monitoring and alerting. It records real-time metrics in a time series database (allowing for high dimensionality) built using a HTTP pull model, with flexible queries and real-time alerting.

Loki is a horizontally-scalable, highly-available, multi-tenant log aggregation system inspired by Prometheus. It is designed to be very cost effective and easy to operate. It does not index the contents of the logs, but rather a set of labels for each log stream.

Thanos is a set of components that can be composed into a highly available metric system with unlimited storage capacity, which can be added seamlessly on top of existing Prometheus deployments.

Container Storage Interface (CSI) is an API that lets container orchestration platforms like Kubernetes seamlessly communicate with stored data via a plug-in.

OpenEBS is a Kubernetes-based tool to create stateful applications using Container Attached Storage.

ElasticSearch is a search engine based on the Lucene library. It provides a distributed, multitenant-capable full-text search engine with an HTTP web interface and schema-free JSON documents. Elasticsearch is developed in Java.

Logstash is a tool for managing events and logs. When used generically, the term encompasses a larger system of log collection, processing, storage and searching activities.

Kibana is an open source data visualization plugin for Elasticsearch. It provides visualization capabilities on top of the content indexed on an Elasticsearch cluster. Users can create bar, line and scatter plots, or pie charts and maps on top of large volumes of data.

New Relic is a SaaS-based monitoring tool that fully supports the way DevOps teams work in the modern enterprise by streamlining your workflows with today's collaboration software and orchestration tools like Puppet, Chef, and Ansible.

Nagios is a free and open source computer-software application that monitors systems, networks and infrastructure. Nagios offers monitoring and alerting services for servers, switches, applications and services. It alerts users when things go wrong and alerts them a second time when the problem has been resolved.

SonarQube is an open-source platform developed by SonarSource for continuous inspection of code quality to perform automatic reviews with static analysis of code to detect bugs, code smells, and security vulnerabilities on 20+ programming languages.

Genie is a federated job orchestration engine developed by Netflix. Genie provides REST APIs to run a variety of big data jobs like Hadoop, Pig, Hive, Spark, Presto, Sqoop and more. It also provides APIs for managing the metadata of many distributed processing clusters and the commands and applications which run on them.

Inviso is a lightweight tool that provides the ability to search for Hadoop jobs, visualize the performance, and view cluster utilization.

Fenzo is a scheduler Java library for Apache Mesos frameworks that supports plugins for scheduling optimizations and facilitates cluster autoscaling.

Dynomite is a thin, distributed dynamo layer for different storage engines and protocols, which includes Redis and Memcached. Dynomite supports multi-datacenter replication and is designed for High Availability(HA).

Dyno is a tool that is used to scale a Java client application utilizing Dynomite.

Raigad is a process/tool that runs alongside Elasticsearch to automate backup/recovery, Deployments and Centralized Configuration management.

Priam is a process/tool that runs alongside Apache Cassandra to automate backup/recovery, Deployments and Centralized Configuration management.

Chaos Monkey is a resiliency tool used to randomly terminates virtual machine instances and containers that run inside of your production environment. Chaos Monkey should work with any backend that Spinnaker supports (AWS, Google Compute Engine, Microsoft Azure, Kubernetes, and Cloud Foundry).

Falcor is a JavaScript library for efficient data fetching. Falcor lets you represent all your remote data sources as a single domain model via a virtual JSON graph, whether in memory on the client or over the network on the server.

Restify is a framework, utilizing connect style middleware for building REST APIs.

Traefik is an open source Edge Router that makes publishing your services a fun and easy experience. It receives requests on behalf of your system and finds out which components are responsible for handling them. What sets Traefik apart, besides its many features, is that it automatically discovers the right configuration for your services.

Jira is a proprietary issue tracking product developed by Atlassian that allows bug tracking and agile project management.

Pivotal Tracker is the agile project management tool of choice for developers around the world for real-time collaboration around a shared, prioritized backlog.

Networking

Networking Learning Resources

AWS Certified Security - Specialty Certification

Microsoft Certified: Azure Security Engineer Associate

Google Cloud Certified Professional Cloud Security Engineer

The Red Hat Certified Specialist in Security: Linux

Linux Professional Institute LPIC-3 Enterprise Security Certification

Cybersecurity Training and Courses from IBM Skills

Cybersecurity Courses and Certifications by Offensive Security

Citrix Certified Associate – Networking(CCA-N)

Citrix Certified Professional – Virtualization(CCP-V)

Certified Information Security Manager(CISM)

Wireshark Certified Network Analyst (WCNA)

Juniper Networks Certification Program Enterprise (JNCP)

Networking courses and specializations from Coursera

Network & Security Courses from Udemy

Network & Security Courses from edX

Tools & Networking Concepts

• Connection: In networking, a connection refers to pieces of related information that are transferred through a network. This generally infers that a connection is built before the data transfer (by following the procedures laid out in a protocol) and then is deconstructed at the at the end of the data transfer.

• Packet: A packet is, generally speaking, the most basic unit that is transferred over a network. When communicating over a network, packets are the envelopes that carry your data (in pieces) from one end point to the other.

Packets have a header portion that contains information about the packet including the source and destination, timestamps, network hops. The main portion of a packet contains the actual data being transferred. It is sometimes called the body or the payload.

• Network Interface: A network interface can refer to any kind of software interface to networking hardware. For instance, if you have two network cards in your computer, you can control and configure each network interface associated with them individually.

A network interface may be associated with a physical device, or it may be a representation of a virtual interface. The "loop-back" device, which is a virtual interface to the local machine, is an example of this.

• LAN: LAN stands for "local area network". It refers to a network or a portion of a network that is not publicly accessible to the greater internet. A home or office network is an example of a LAN.

• WAN: WAN stands for "wide area network". It means a network that is much more extensive than a LAN. While WAN is the relevant term to use to describe large, dispersed networks in general, it is usually meant to mean the internet, as a whole.

If an interface is connected to the WAN, it is generally assumed that it is reachable through the internet.

• Protocol: A protocol is a set of rules and standards that basically define a language that devices can use to communicate. There are a great number of protocols in use extensively in networking, and they are often implemented in different layers.

Some low level protocols are TCP, UDP, IP, and ICMP. Some familiar examples of application layer protocols, built on these lower protocols, are HTTP (for accessing web content), SSH, TLS/SSL, and FTP.

• Port: A port is an address on a single machine that can be tied to a specific piece of software. It is not a physical interface or location, but it allows your server to be able to communicate using more than one application.

• Firewall: A firewall is a program that decides whether traffic coming into a server or going out should be allowed. A firewall usually works by creating rules for which type of traffic is acceptable on which ports. Generally, firewalls block ports that are not used by a specific application on a server.

• NAT: Network address translation is a way to translate requests that are incoming into a routing server to the relevant devices or servers that it knows about in the LAN. This is usually implemented in physical LANs as a way to route requests through one IP address to the necessary backend servers.

• VPN: Virtual private network is a means of connecting separate LANs through the internet, while maintaining privacy. This is used as a means of connecting remote systems as if they were on a local network, often for security reasons.

Network Layers

While networking is often discussed in terms of topology in a horizontal way, between hosts, its implementation is layered in a vertical fashion throughout a computer or network. This means is that there are multiple technologies and protocols that are built on top of each other in order for communication to function more easily. Each successive, higher layer abstracts the raw data a little bit more, and makes it simpler to use for applications and users. It also allows you to leverage lower layers in new ways without having to invest the time and energy to develop the protocols and applications that handle those types of traffic.

As data is sent out of one machine, it begins at the top of the stack and filters downwards. At the lowest level, actual transmission to another machine takes place. At this point, the data travels back up through the layers of the other computer. Each layer has the ability to add its own "wrapper" around the data that it receives from the adjacent layer, which will help the layers that come after decide what to do with the data when it is passed off.

One method of talking about the different layers of network communication is the OSI model. OSI stands for Open Systems Interconnect.This model defines seven separate layers. The layers in this model are:

• Application: The application layer is the layer that the users and user-applications most often interact with. Network communication is discussed in terms of availability of resources, partners to communicate with, and data synchronization.

• Presentation: The presentation layer is responsible for mapping resources and creating context. It is used to translate lower level networking data into data that applications expect to see.

• Session: The session layer is a connection handler. It creates, maintains, and destroys connections between nodes in a persistent way.

• Transport: The transport layer is responsible for handing the layers above it a reliable connection. In this context, reliable refers to the ability to verify that a piece of data was received intact at the other end of the connection. This layer can resend information that has been dropped or corrupted and can acknowledge the receipt of data to remote computers.

• Network: The network layer is used to route data between different nodes on the network. It uses addresses to be able to tell which computer to send information to. This layer can also break apart larger messages into smaller chunks to be reassembled on the opposite end.

• Data Link: This layer is implemented as a method of establishing and maintaining reliable links between different nodes or devices on a network using existing physical connections.

• Physical: The physical layer is responsible for handling the actual physical devices that are used to make a connection. This layer involves the bare software that manages physical connections as well as the hardware itself (like Ethernet).

The TCP/IP model, more commonly known as the Internet protocol suite, is another layering model that is simpler and has been widely adopted.It defines the four separate layers, some of which overlap with the OSI model:

• Application: In this model, the application layer is responsible for creating and transmitting user data between applications. The applications can be on remote systems, and should appear to operate as if locally to the end user.

The communication takes place between peers network.

• Transport: The transport layer is responsible for communication between processes. This level of networking utilizes ports to address different services. It can build up unreliable or reliable connections depending on the type of protocol used.

• Internet: The internet layer is used to transport data from node to node in a network. This layer is aware of the endpoints of the connections, but does not worry about the actual connection needed to get from one place to another. IP addresses are defined in this layer as a way of reaching remote systems in an addressable manner.

• Link: The link layer implements the actual topology of the local network that allows the internet layer to present an addressable interface. It establishes connections between neighboring nodes to send data.

Interfaces

Interfaces are networking communication points for your computer. Each interface is associated with a physical or virtual networking device. Typically, your server will have one configurable network interface for each Ethernet or wireless internet card you have. In addition, it will define a virtual network interface called the "loopback" or localhost interface. This is used as an interface to connect applications and processes on a single computer to other applications and processes. You can see this referenced as the "lo" interface in many tools.

Protocols

Networking works by piggybacks on a number of different protocols on top of each other. In this way, one piece of data can be transmitted using multiple protocols encapsulated within one another.

Media access control is a communications protocol that is used to distinguish specific devices. Each device is supposed to get a unique MAC address during the manufacturing process that differentiates it from every other device on the internet. Addressing hardware by the MAC address allows you to reference a device by a unique value even when the software on top may change the name for that specific device during operation. Media access control is one of the only protocols from the link layer that you are likely to interact with on a regular basis.

The IP protocol is one of the fundamental protocols that allow the internet to work. IP addresses are unique on each network and they allow machines to address each other across a network. It is implemented on the internet layer in the IP/TCP model. Networks can be linked together, but traffic must be routed when crossing network boundaries. This protocol assumes an unreliable network and multiple paths to the same destination that it can dynamically change between. There are a number of different implementations of the protocol. The most common implementation today is IPv4, although IPv6 is growing in popularity as an alternative due to the scarcity of IPv4 addresses available and improvements in the protocols capabilities.

ICMP: internet control message protocol is used to send messages between devices to indicate the availability or error conditions. These packets are used in a variety of network diagnostic tools, such as ping and traceroute. Usually ICMP packets are transmitted when a packet of a different kind meets some kind of a problem. Basically, they are used as a feedback mechanism for network communications.

TCP: Transmission control protocol is implemented in the transport layer of the IP/TCP model and is used to establish reliable connections. TCP is one of the protocols that encapsulates data into packets. It then transfers these to the remote end of the connection using the methods available on the lower layers. On the other end, it can check for errors, request certain pieces to be resent, and reassemble the information into one logical piece to send to the application layer. The protocol builds up a connection prior to data transfer using a system called a three-way handshake. This is a way for the two ends of the communication to acknowledge the request and agree upon a method of ensuring data reliability. After the data has been sent, the connection is torn down using a similar four-way handshake. TCP is the protocol of choice for many of the most popular uses for the internet, including WWW, FTP, SSH, and email. It is safe to say that the internet we know today would not be here without TCP.

UDP: User datagram protocol is a popular companion protocol to TCP and is also implemented in the transport layer. The fundamental difference between UDP and TCP is that UDP offers unreliable data transfer. It does not verify that data has been received on the other end of the connection. This might sound like a bad thing, and for many purposes, it is. However, it is also extremely important for some functions. It’s not required to wait for confirmation that the data was received and forced to resend data, UDP is much faster than TCP. It does not establish a connection with the remote host, it simply fires off the data to that host and doesn't care if it is accepted or not. Since UDP is a simple transaction, it is useful for simple communications like querying for network resources. It also doesn't maintain a state, which makes it great for transmitting data from one machine to many real-time clients. This makes it ideal for VOIP, games, and other applications that cannot afford delays.

HTTP: Hypertext transfer protocol is a protocol defined in the application layer that forms the basis for communication on the web. HTTP defines a number of functions that tell the remote system what you are requesting. For instance, GET, POST, and DELETE all interact with the requested data in a different way.

JSON Web Token (JWT) is a compact URL-safe means of representing claims to be transferred between two parties. The claims in a JWT are encoded as a JSON object that is digitally signed using JSON Web Signature (JWS).

OAuth 2.0 is an open source authorization framework that enables applications to obtain limited access to user accounts on an HTTP service, such as Amazon, Google, Facebook, Microsoft, Twitter GitHub, and DigitalOcean. It works by delegating user authentication to the service that hosts the user account, and authorizing third-party applications to access the user account.

FTP: File transfer protocol is in the application layer and provides a way of transferring complete files from one host to another. It is inherently insecure, so it is not recommended for any externally facing network unless it is implemented as a public, download-only resource.

DNS: Domain name system is an application layer protocol used to provide a human-friendly naming mechanism for internet resources. It is what ties a domain name to an IP address and allows you to access sites by name in your browser.

SSH: Secure shell is an encrypted protocol implemented in the application layer that can be used to communicate with a remote server in a secure way. Many additional technologies are built around this protocol because of its end-to-end encryption and ubiquity. There are many other protocols that we haven't covered that are equally important. However, this should give you a good overview of some of the fundamental technologies that make the internet and networking possible.

Virtualization

KVM (for Kernel-based Virtual Machine) is a full virtualization solution for Linux on x86 hardware containing virtualization extensions (Intel VT or AMD-V). It consists of a loadable kernel module, kvm.ko, that provides the core virtualization infrastructure and a processor specific module, kvm-intel.ko or kvm-amd.ko.

QEMU is a fast processor emulator using a portable dynamic translator. QEMU emulates a full system, including a processor and various peripherals. It can be used to launch a different Operating System without rebooting the PC or to debug system code.

Hyper-V enables running virtualized computer systems on top of a physical host. These virtualized systems can be used and managed just as if they were physical computer systems, however they exist in virtualized and isolated environment. Special software called a hypervisor manages access between the virtual systems and the physical hardware resources. Virtualization enables quick deployment of computer systems, a way to quickly restore systems to a previously known good state, and the ability to migrate systems between physical hosts.

VirtManager is a graphical tool for managing virtual machines via libvirt. Most usage is with QEMU/KVM virtual machines, but Xen and libvirt LXC containers are well supported. Common operations for any libvirt driver should work.

oVirt is an open-source distributed virtualization solution, designed to manage your entire enterprise infrastructure. oVirt uses the trusted KVM hypervisor and is built upon several other community projects, including libvirt, Gluster, PatternFly, and Ansible.Founded by Red Hat as a community project on which Red Hat Enterprise Virtualization is based allowing for centralized management of virtual machines, compute, storage and networking resources, from an easy-to-use web-based front-end with platform independent access.

Xen is focused on advancing virtualization in a number of different commercial and open source applications, including server virtualization, Infrastructure as a Services (IaaS), desktop virtualization, security applications, embedded and hardware appliances, and automotive/aviation.

Ganeti is a virtual machine cluster management tool built on top of existing virtualization technologies such as Xen or KVM and other open source software. Once installed, the tool assumes management of the virtual instances (Xen DomU).

Packer is an open source tool for creating identical machine images for multiple platforms from a single source configuration. Packer is lightweight, runs on every major operating system, and is highly performant, creating machine images for multiple platforms in parallel. Packer does not replace configuration management like Chef or Puppet. In fact, when building images, Packer is able to use tools like Chef or Puppet to install software onto the image.

Vagrant is a tool for building and managing virtual machine environments in a single workflow. With an easy-to-use workflow and focus on automation, Vagrant lowers development environment setup time, increases production parity, and makes the "works on my machine" excuse a relic of the past. It provides easy to configure, reproducible, and portable work environments built on top of industry-standard technology and controlled by a single consistent workflow to help maximize the productivity and flexibility of you and your team.

VMware Workstation is a hosted hypervisor that runs on x64 versions of Windows and Linux operating systems; it enables users to set up virtual machines on a single physical machine, and use them simultaneously along with the actual machine.

VirtualBox is a powerful x86 and AMD64/Intel64 virtualization product for enterprise as well as home use. Not only is VirtualBox an extremely feature rich, high performance product for enterprise customers.

Databases

Database Learning Resources

SQL is a standard language for storing, manipulating and retrieving data in relational databases.

Learn SQL Skills Online from Coursera

SQL Online Training Courses from LinkedIn Learning

Learn SQL For Free from Codecademy

OracleDB SQL Style Guide Basics

Tableau CRM: BI Software and Tools

Best Practices and Recommendations for SQL Server Clustering in AWS EC2.

Connecting from Google Kubernetes Engine to a Cloud SQL instance.

Educational Microsoft Azure SQL resources

SQL vs. NoSQL Databases: What's the Difference?

Databases and Tools

Azure Data Studio is an open source data management tool that enables working with SQL Server, Azure SQL DB and SQL DW from Windows, macOS and Linux.

Azure SQL Database is the intelligent, scalable, relational database service built for the cloud. It’s evergreen and always up to date, with AI-powered and automated features that optimize performance and durability for you. Serverless compute and Hyperscale storage options automatically scale resources on demand, so you can focus on building new applications without worrying about storage size or resource management.

Azure SQL Managed Instance is a fully managed SQL Server Database engine instance that's hosted in Azure and placed in your network. This deployment model makes it easy to lift and shift your on-premises applications to the cloud with very few application and database changes. Managed instance has split compute and storage components.

Azure Synapse Analytics is a limitless analytics service that brings together enterprise data warehousing and Big Data analytics. It gives you the freedom to query data on your terms, using either serverless or provisioned resources at scale. It brings together the best of the SQL technologies used in enterprise data warehousing, Spark technologies used in big data analytics, and Pipelines for data integration and ETL/ELT.

MSSQL for Visual Studio Code is an extension for developing Microsoft SQL Server, Azure SQL Database and SQL Data Warehouse everywhere with a rich set of functionalities.

SQL Server Data Tools (SSDT) is a development tool for building SQL Server relational databases, Azure SQL Databases, Analysis Services (AS) data models, Integration Services (IS) packages, and Reporting Services (RS) reports. With SSDT, a developer can design and deploy any SQL Server content type with the same ease as they would develop an application in Visual Studio or Visual Studio Code.

Bulk Copy Program is a command-line tool that comes with Microsoft SQL Server. BCP, allows you to import and export large amounts of data in and out of SQL Server databases quickly snd efficeiently.

SQL Server Migration Assistant is a tool from Microsoft that simplifies database migration process from Oracle to SQL Server, Azure SQL Database, Azure SQL Database Managed Instance and Azure SQL Data Warehouse.

SQL Server Integration Services is a development platform for building enterprise-level data integration and data transformations solutions. Use Integration Services to solve complex business problems by copying or downloading files, loading data warehouses, cleansing and mining data, and managing SQL Server objects and data.

SQL Server Business Intelligence(BI) is a collection of tools in Microsoft's SQL Server for transforming raw data into information businesses can use to make decisions.

Tableau is a Data Visualization software used in relational databases, cloud databases, and spreadsheets. Tableau was acquired by Salesforce in August 2019.

DataGrip is a professional DataBase IDE developed by Jet Brains that provides context-sensitive code completion, helping you to write SQL code faster. Completion is aware of the tables structure, foreign keys, and even database objects created in code you're editing.

RStudio is an integrated development environment for R and Python, with a console, syntax-highlighting editor that supports direct code execution, and tools for plotting, history, debugging and workspace management.

MySQL is a fully managed database service to deploy cloud-native applications using the world's most popular open source database.

PostgreSQL is a powerful, open source object-relational database system with over 30 years of active development that has earned it a strong reputation for reliability, feature robustness, and performance.

Amazon DynamoDB is a key-value and document database that delivers single-digit millisecond performance at any scale. It is a fully managed, multiregion, multimaster, durable database with built-in security, backup and restore, and in-memory caching for internet-scale applications.

FoundationDB is an open source distributed database designed to handle large volumes of structured data across clusters of commodity servers. It organizes data as an ordered key-value store and employs ACID transactions for all operations. It is especially well-suited for read/write workloads but also has excellent performance for write-intensive workloads. FoundationDB was acquired by Apple in 2015.

CouchbaseDB is an open source distributed multi-model NoSQL document-oriented database. It creates a key-value store with managed cache for sub-millisecond data operations, with purpose-built indexers for efficient queries and a powerful query engine for executing SQL queries.

IBM DB2 is a collection of hybrid data management products offering a complete suite of AI-empowered capabilities designed to help you manage both structured and unstructured data on premises as well as in private and public cloud environments. Db2 is built on an intelligent common SQL engine designed for scalability and flexibility.

MongoDB is a document database meaning it stores data in JSON-like documents.

OracleDB is a powerful fully managed database helps developers manage business-critical data with the highest availability, reliability, and security.

MariaDB is an enterprise open source database solution for modern, mission-critical applications.

SQLite is a C-language library that implements a small, fast, self-contained, high-reliability, full-featured, SQL database engine.SQLite is the most used database engine in the world. SQLite is built into all mobile phones and most computers and comes bundled inside countless other applications that people use every day.

SQLite Database Browser is an open source SQL tool that allows users to create, design and edits SQLite database files. It lets users show a log of all the SQL commands that have been issued by them and by the application itself.

dbWatch is a complete database monitoring/management solution for SQL Server, Oracle, PostgreSQL, Sybase, MySQL and Azure. Designed for proactive management and automation of routine maintenance in large scale on-premise, hybrid/cloud database environments.

Cosmos DB Profiler is a real-time visual debugger allowing a development team to gain valuable insight and perspective into their usage of Cosmos DB database. It identifies over a dozen suspicious behaviors from your application’s interaction with Cosmos DB.

Adminer is an SQL management client tool for managing databases, tables, relations, indexes, users. Adminer has support for all the popular database management systems such as MySQL, MariaDB, PostgreSQL, SQLite, MS SQL, Oracle, Firebird, SimpleDB, Elasticsearch and MongoDB.

DBeaver is an open source database tool for developers and database administrators. It offers supports for JDBC compliant databases such as MySQL, Oracle, IBM DB2, SQL Server, Firebird, SQLite, Sybase, Teradata, Firebird, Apache Hive, Phoenix, and Presto.

DbVisualizer is a SQL management tool that allows users to manage a wide range of databases such as Oracle, Sybase, SQL Server, MySQL, H3, and SQLite.

AppDynamics Database is a management product for Microsoft SQL Server. With AppDynamics you can monitor and trend key performance metrics such as resource consumption, database objects, schema statistics and more, allowing you to proactively tune and fix issues in a High-Volume Production Environment.

Toad is a SQL Server DBMS toolset developed by Quest. It increases productivity by using extensive automation, intuitive workflows, and built-in expertise. This SQL management tool resolve issues, manage change and promote the highest levels of code quality for both relational and non-relational databases.

Lepide SQL Server is an open source storage manager utility to analyse the performance of SQL Servers. It provides a complete overview of all configuration and permission changes being made to your SQL Server environment through an easy-to-use, graphical user interface.

Sequel Pro is a fast MacOS database management tool for working with MySQL. This SQL management tool helpful for interacting with your database by easily to adding new databases, new tables, and new rows.

Telco 5G

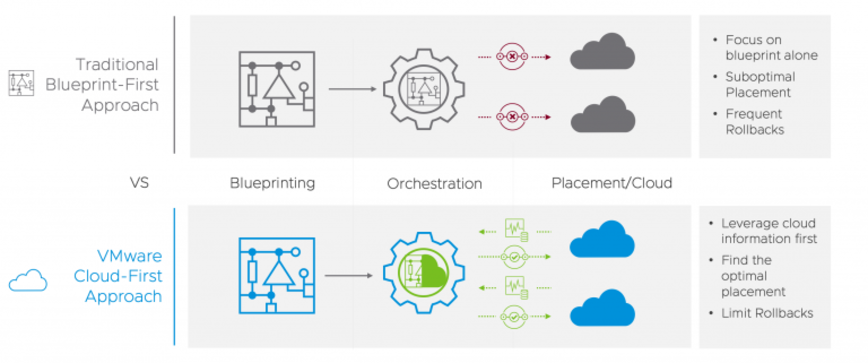

VMware Cloud First Approach. Source: VMware, 2020.

VMware Telco Cloud Automation Components. Source: VMware, 2020.

Telco Learning Resources

HPE(Hewlett Packard Enterprise) Telco Blueprints overview

Network Functions Virtualization Infrastructure (NFVI) by Cisco

Introduction to vCloud NFV Telco Edge from VMware

VMware Telco Cloud Automation(TCA) Architecture Overview

Maturing OpenStack Together To Solve Telco Needs from Red Hat

Red Hat telco ecosystem program

OpenStack for Telcos by Canonical

Open source NFV platform for 5G from Ubuntu

Understanding 5G Technology from Verizon

Verizon and Unity partner to enable 5G & MEC gaming and enterprise applications

Understanding 5G Technology from Intel

Understanding 5G Technology from Qualcomm