Lz4 Versions Save

Extremely Fast Compression algorithm

v1.9.4

1 year agoLZ4 v1.9.4 is a maintenance release, featuring a substantial amount (~350 commits) of minor fixes and improvements, making it a recommended upgrade. The stable portion of liblz4 API is unmodified, making this release a drop-in replacement for existing features.

Improved decompression speed

Performance wasn't a major focus of this release, but there are nonetheless a few improvements worth mentioning :

- Decompression speed on high-end

ARM64platform is improved, by ~+20%. This is notably the case for recent M1 chips, featured in macbook laptops and nucs. Some server-class ARM64 cpus are also impacted, most notably when employinggccas a compiler. Due to the diversity ofaarch64chips in service, it's still difficult to have a one-size-fits-all policy for this platform. - For the specific scenario of data compressed with

-BD4setting (small blocks, <= 64 KB, linked) decompressed block-by-block into a flush buffer, decompression speed is improved ~+70%. This is most visible in thelz4CLI, which triggers this exact scenario, but since the improvement is achieved at library level, it may also apply to other scenarios. - Additionally, for compressed data employing the

lz4frameformat (native format oflz4CLI), it's possible to ignore checksum validation during decompression, resulting in speed improvements of ~+40% . This capability is exposed at both CLI (see--no-crc) and library levels.

New experimental library capabilities

New liblz4 capabilities are provided in this version. They are considered experimental at this stage, and the most useful ones will be upgraded as candidate "stable" status in an upcoming release :

- Ability to require

lz4frameAPI to employ custom allocators for dynamic allocation. - Partial decompression of LZ4 blocks compressed with a dictionary, using

LZ4_decompress_safe_partial_usingDict()by @yawqi - Create

lz4frameblocks which are intentionally uncompressed, usingLZ4F_uncompressedUpdate(), by @alexmohr - New API unit

lz4file, abstracting File I/O operations for higher-level programs and libraries, by @anjiahao1 -

liblz4can be built for freestanding environments, using the new build macroLZ4_FREESTANDING, by @t-mat. In which case, it will not link to any standard library, disable all dynamic allocations, and rely on user-providedmemcpy()andmemset()operations.

Miscellaneous

- Fixed an annoying

Makefilebug introduced inv1.9.3, in whichCFLAGSwas no longer respected when provided from environment variable. The root cause was an obscure bug inmake, which has been fixed upstream following this bug report. There is no need to updatemaketo buildliblz4though, theMakefilehas been modified to circumvent the issue and remains compatible with older versions ofmake. -

Makefileis compatible with-jparallel run, including to run parallel tests (make -j test). - Documentation of LZ4 Block format has been updated, featuring notably a paragraph "Implementation notes", underlining common pitfalls for new implementers of the format

Changes list

Here is a more detailed list of updates introduced in v1.9.4 :

- perf : faster decoding speed (~+20%) on Apple Silicon platforms, by @zeux

- perf : faster decoding speed (~+70%) for

-BD4setting in CLI - api : new function

LZ4_decompress_safe_partial_usingDict()by @yawqi - api :

lz4frame: ability to provide custom allocators at state creation - api : can skip checksum validation for improved decoding speed

- api : new experimental unit

lz4filefor file i/o API, by @anjiahao1 - api : new experimental function

LZ4F_uncompressedUpdate(), by @alexmohr - cli :

--listworks onstdininput, by @Low-power - cli :

--no-crcdoes not produce (compression) nor check (decompression) checksums - cli : fix:

--testand--listproduce an error code when parsing invalid input - cli : fix:

--test -mdoes no longer create decompressed file artifacts - cli : fix: support skippable frames when passed via

stdin, reported by @davidmankin - build: fix:

MakefilerespectsCFLAGSdirectives passed via environment variable - build:

LZ4_FREESTANDING, new build macro for freestanding environments, by @t-mat - build:

makeandmake testare compatible with-jparallel run - build: AS/400 compatibility, by @jonrumsey

- build: Solaris 10 compatibility, by @pekdon

- build: MSVC 2022 support, by @t-mat

- build: improved meson script, by @eli-schwartz

- doc : Updated LZ4 block format, provide an "implementation notes" section

New Contributors

- @emaxerrno made their first contribution in https://github.com/lz4/lz4/pull/884

- @servusdei2018 made their first contribution in https://github.com/lz4/lz4/pull/886

- @aqrit made their first contribution in https://github.com/lz4/lz4/pull/898

- @attilaolah made their first contribution in https://github.com/lz4/lz4/pull/919

- @XVilka made their first contribution in https://github.com/lz4/lz4/pull/922

- @hmaarrfk made their first contribution in https://github.com/lz4/lz4/pull/962

- @ThomasWaldmann made their first contribution in https://github.com/lz4/lz4/pull/965

- @sigiesec made their first contribution in https://github.com/lz4/lz4/pull/964

- @klebertarcisio made their first contribution in https://github.com/lz4/lz4/pull/973

- @jasperla made their first contribution in https://github.com/lz4/lz4/pull/972

- @GabeNI made their first contribution in https://github.com/lz4/lz4/pull/1001

- @ITotalJustice made their first contribution in https://github.com/lz4/lz4/pull/1005

- @lifegpc made their first contribution in https://github.com/lz4/lz4/pull/1000

- @eloj made their first contribution in https://github.com/lz4/lz4/pull/1011

- @pekdon made their first contribution in https://github.com/lz4/lz4/pull/999

- @fanzeyi made their first contribution in https://github.com/lz4/lz4/pull/1017

- @a1346054 made their first contribution in https://github.com/lz4/lz4/pull/1024

- @kmou424 made their first contribution in https://github.com/lz4/lz4/pull/1026

- @kostasdizas made their first contribution in https://github.com/lz4/lz4/pull/1030

- @fwessels made their first contribution in https://github.com/lz4/lz4/pull/1032

- @zeux made their first contribution in https://github.com/lz4/lz4/pull/1040

- @DimitriPapadopoulos made their first contribution in https://github.com/lz4/lz4/pull/1042

- @mcfi made their first contribution in https://github.com/lz4/lz4/pull/1054

- @eli-schwartz made their first contribution in https://github.com/lz4/lz4/pull/1049

- @leonvictor made their first contribution in https://github.com/lz4/lz4/pull/1052

- @tristan957 made their first contribution in https://github.com/lz4/lz4/pull/1064

- @anjiahao1 made their first contribution in https://github.com/lz4/lz4/pull/1068

- @danyeaw made their first contribution in https://github.com/lz4/lz4/pull/1075

- @yawqi made their first contribution in https://github.com/lz4/lz4/pull/1093

- @nathannaveen made their first contribution in https://github.com/lz4/lz4/pull/1088

- @alexmohr made their first contribution in https://github.com/lz4/lz4/pull/1094

- @yoniko made their first contribution in https://github.com/lz4/lz4/pull/1100

- @jonrumsey made their first contribution in https://github.com/lz4/lz4/pull/1104

- @dpelle made their first contribution in https://github.com/lz4/lz4/pull/1125

- @SpaceIm made their first contribution in https://github.com/lz4/lz4/pull/1133

v1.9.3

3 years agoLZ4 v1.9.3 is a maintenance release, offering more than 200+ commits to fix multiple corner cases and build scenarios. Update is recommended. Existing liblz4 API is not modified, so it should be a drop-in replacement.

Faster Windows binaries

On the build side, multiple rounds of improvements, thanks to contributors such as @wolfpld and @remittor, make this version generate faster binaries for Visual Studio. It is also expected to better support a broader range of VS variants.

Speed benefits can be substantial. For example, on my laptop, compared with v1.9.2, this version built with VS2019 compresses at 640 MB/s (from 420 MB/s), and decompression reaches 3.75 GB/s (from 3.3 GB/s). So this is definitely perceptible.

Other notable updates

Among the visible fixes, this version improves the _destSize() variant, an advanced API which reverses the logic by targeting an a-priori compressed size and trying to shove as much data as possible into the target budget. The high compression variant LZ4_compress_HC_destSize() would miss some important opportunities in highly compressible data, resulting in less than optimal compression (detected by @hsiangkao). This is fixed in this version. Even the "fast" variant receives some gains (albeit very small).

Also, the corresponding decompression function, LZ4_decompress_safe_partial(), officially supports a scenario where the input (compressed) size is unknown (but bounded), as long as the requested amount of data to regenerate is smaller or equal to the block's content. This function used to require the exact compressed size, and would sometimes support above scenario "by accident", but then could also break it by accident. This is now firmly controlled, documented and tested.

Finally, replacing memory functions (malloc(), calloc(), free()), typically for freestanding environments, is now a bit easier. It used to require a small direct modification of lz4.c source code, but can now be achieved by using the build macro LZ4_USER_MEMORY_FUNCTIONS at compilation time. In which case, liblz4 no longer includes <stdlib.h>, and requires instead that functions LZ4_malloc(), LZ4_calloc() and LZ4_free() are implemented somewhere in the project, and then available at link time.

Changes list

Here is a more detailed list of updates introduced in v1.9.3 :

- perf: highly improved speed in kernel space, by @terrelln

- perf: faster speed with Visual Studio, thanks to @wolfpld and @remittor

- perf: improved dictionary compression speed, by @felixhandte

- perf: fixed

LZ4_compress_HC_destSize()ratio, detected by @hsiangkao - perf: reduced stack usage in high compression mode, by @Yanpas

- api :

LZ4_decompress_safe_partial()supports unknown compressed size, requested by @jfkthame - api : improved

LZ4F_compressBound()with automatic flushing, by Christopher Harvie - api : can (de)compress to/from NULL without UBs

- api : fix alignment test on 32-bit systems (state initialization)

- api : fix

LZ4_saveDictHC()in corner case scenario, detected by @IgorKorkin - cli : compress multiple files using the legacy format, by Filipe Calasans

- cli : benchmark mode supports dictionary, by @rkoradi

- cli : fix

--fastwith large argument, detected by @picoHz - build: link to user-defined memory functions with

LZ4_USER_MEMORY_FUNCTIONS - build:

contrib/cmake_unofficial/moved tobuild/cmake/ - build:

visual/*moved tobuild/ - build: updated meson script, by @neheb

- build: tinycc support, by Anton Kochkov

- install: Haiku support, by Jerome Duval

- doc : updated LZ4 frame format, clarify EndMark

Known issues :

- Some people have reported a broken

liblz4_static.libfile in the packagelz4_win64_v1_9_3.zip. This is probably amingw/msvccompatibility issue. If you have issues employing this file, the solution is to rebuild it locally from sources with your target compiler. - The standard

Makefileinv1.9.3doesn't honorCFLAGSwhen passed through environment variable. This is fixed in more recent version ondevbranch. See #958 for details.

v1.9.2

4 years agoThis is primarily a bugfix release, driven by the bugs found and fixed since LZ4 recent integration into Google's oss-fuzz, initiated by @cmeister2 . The new capability was put to good use by @terrelln, dramatically expanding the number of scenarios covered by the profile-guided fuzzer. These scenarios were already covered by unguided fuzzers, but a few bugs require a large combinations of factors that unguided fuzzer are unable to produce in a reasonable timeframe.

Due to these fixes, an upgrade of LZ4 to its latest version is recommended.

- fix : out-of-bound read in exceptional circumstances when using

decompress_partial(), by @terrelln - fix : slim opportunity for out-of-bound write with

compress_fast()with a large enough input and when providing an output smaller than recommended (< LZ4_compressBound(inputSize)), by @terrelln - fix : rare data corruption bug with

LZ4_compress_destSize(), by @terrelln - fix : data corruption bug when Streaming with an Attached Dict in HC Mode, by @felixhandte

- perf: enable

LZ4_FAST_DEC_LOOPon aarch64/GCC by default, by @prekageo - perf: improved

lz4framestreaming API speed, by @dreambottle - perf: speed up

lz4hcon slow patterns when using external dictionary, by @terrelln - api: better in-place decompression and compression support

- cli :

--listsupports multi-frames files, by @gstedman - cli:

--versionoutputs tostdout - cli : add option

--bestas an alias of-12, by @Low-power - misc: Integration into

oss-fuzzby @cmeister2, expanded list of scenarios by @terrelln

v1.9.1

5 years agoThis is a point release, which main objective is to fix a read out-of-bound issue reported in the decoder of v1.9.0. Upgrade from this version is recommended.

A few other improvements were also merged during this time frame (listed below).

A visible user-facing one is the introduction of a new command --list, started by @gabrielstedman, which makes it possible to peek at the internals of a .lz4 file. It will provide the block type, checksum information, compressed and decompressed sizes (if present). The command is limited to single-frame files for the time being.

Changes

- fix : decompression functions were reading a few bytes beyond input size (introduced in v1.9.0, reported by @ppodolsky and @danlark1)

- api : fix : lz4frame initializers compatibility with c++, reported by @degski

- cli : added command

--list, based on a patch by @gabrielstedman - build: improved Windows build, by @JPeterMugaas

- build: AIX, by Norman Green

Note : this release has an issue when compiling liblz4 dynamic library on Mac OS-X. This issue is fixed in : https://github.com/lz4/lz4/pull/696 .

v1.9.0

5 years agoWarning : this version has a known bug in the decompression function which makes it read a few bytes beyond input limit. Upgrade to v1.9.1 is recommended.

LZ4 v1.9.0 is a performance focused release, also offering minor API updates.

Decompression speed improvements

Dave Watson (@djwatson) managed to carefully optimize the LZ4 decompression hot loop, offering substantial speed improvements on x86 and x64 platforms.

Here are some benchmark running on a Core i7-9700K, source compiled using gcc v8.2.0 on Ubuntu 18.10 "Cosmic Cuttlefish" (Linux 4.18.0-17-generic) :

| Version | v1.8.3 | v1.9.0 | Improvement |

|---|---|---|---|

| enwik8 | 4090 MB/s | 4560 MB/s | +12% |

| calgary.tar | 4320 MB/s | 4860 MB/s | +13% |

| silesia.tar | 4210 MB/s | 4970 MB/s | +18% |

Given that decompression speed has always been a strong point of lz4, the improvement is quite substantial.

The new decoding loop is automatically enabled on x64 and x86.

For other cpu types, since our testing capabilities are more limited, the new decoding loop is disabled by default. However, anyone can manually enable it, by using the build macro LZ4_FAST_DEC_LOOP, which accepts values 0 or 1. The outcome will vary depending on exact target and build chains. For example, in our limited tests with ARM platforms, we found that benefits vary strongly depending on cpu manufacturer, chip model, and compiler version, making it difficult to offer a "generic" statement. ARM situation may prove extreme though, due to the proliferation of variants available. Other cpu types may prove easier to assess.

API updates

_destSize()

The _destSize() compression variants have been promoted to stable status.

These variants reverse the logic, by trying to fit as much input data as possible into a fixed memory budget. This is used for example in WiredTiger and EroFS, which cram as much data as possible into the size of a physical sector, for improved storage density.

reset*_fast()

When compressing small inputs, the fixed cost of clearing the compression's internal data structures can become a significant fraction of the compression cost. In v1.8.2, new LZ4 entry points have been introduced to perform this initialization at effectively zero cost. LZ4_resetStream_fast() and LZ4_resetStreamHC_fast() are now promoted into stable.

They are supplemented by new entry points, LZ4_initStream() and its corresponding HC variant, which must be used on any uninitialized memory segment that will be converted into an LZ4 state. After that, only reset*_fast() is needed to start some new compression job re-using the same context. This proves especially effective when compressing a lot of small data.

deprecation

The decompress*_fast() variants have been moved into the deprecate section.

While they offer slightly faster decompression speed (~+5%), they are also unprotected against malicious inputs, resulting in security liability. There are some limited cases where this property could prove acceptable (perfectly controlled environment, same producer / consumer), but in most cases, the risk is not worth the benefit.

We want to discourage such usage as clearly as possible, by pushing the _fast() variant into deprecation area.

For the time being, they will not yet generate deprecation warnings when invoked, to give time to existing applications to move towards decompress*_safe(). But this is the next stage, and is likely to happen in a future release.

LZ4_resetStream() and LZ4_resetStreamHC() have also been moved into the deprecate section, to emphasize the preference towards LZ4_resetStream_fast(). Their real equivalent are actually LZ4_initStream() and LZ4_initStreamHC(), which are more generic (can accept any memory area to initialize) and safer (control size and alignment). Also, the naming makes it clearer when to use initStream() and when to use resetStream_fast().

Changes list

This release brings an assortment of small improvements and bug fixes, as detailed below :

- perf: large decompression speed improvement on x86/x64 (up to +20%) by @djwatson

- api : changed :

_destSize()compression variants are promoted to stable API - api : new :

LZ4_initStream(HC), replacingLZ4_resetStream(HC) - api : changed :

LZ4_resetStream(HC)as recommended reset function, for better performance on small data - cli : support custom block sizes, by @blezsan

- build: source code can be amalgamated, by Bing Xu

- build: added meson build, by @lzutao

- build: new build macros :

LZ4_DISTANCE_MAX,LZ4_FAST_DEC_LOOP - install: MidnightBSD, by @laffer1

- install: msys2 on Windows 10, by @vtorri

v1.8.3

5 years agoThis is maintenance release, mainly triggered by issue #560.

#560 is a data corruption that can only occur in v1.8.2, at level 9 (only), for some "large enough" data blocks (> 64 KB), featuring a fairly specific data pattern, improbable enough that multiple cpu running various fuzzers non-stop during a period of several weeks where not able to find it. Big thanks to @Pashugan for finding and sharing a reproducible sample.

Due to this fix, v1.8.3 is a recommended update.

A few other minor features were already merged, and are therefore bundled in this release too.

Should lz4 prove too slow, it's now possible to invoke --fast=# command, by @jennifermliu . This is equivalent to the acceleration parameter in the API, in which user forfeit some compression ratio for the benefit of better speed.

The verbose CLI has been fixed, and now displays the real amount of time spent compressing (instead of cpu time). It also shows a new indicator, cpu load %, so that users can determine if the limiting factor was cpu or I/O bandwidth.

Finally, an existing function, LZ4_decompress_safe_partial(), has been enhanced to make it possible to decompress only the beginning of an LZ4 block, up to a specified number of bytes. Partial decoding can be useful to save CPU time and memory, when the objective is to extract a limited portion from a larger block.

v1.8.2

5 years agoLZ4 v1.8.2 is a performance focused release, featuring important improvements for small inputs, especially when coupled with dictionary compression.

General speed improvements

LZ4 decompression speed has always been a strong point. In v1.8.2, this gets even better, as it improves decompression speed by about 10%, thanks in a large part to suggestion from @svpv .

For example, on a Mac OS-X laptop with an Intel Core i7-5557U CPU @ 3.10GHz,

running lz4 -bsilesia.tar compiled with default compiler llvm v9.1.0:

| Version | v1.8.1 | v1.8.2 | Improvement |

|---|---|---|---|

| Decompression speed | 2490 MB/s | 2770 MB/s | +11% |

Compression speeds also receive a welcomed boost, though improvement is not evenly distributed, with higher levels benefiting quite a lot more.

| Version | v1.8.1 | v1.8.2 | Improvement |

|---|---|---|---|

| lz4 -1 | 504 MB/s | 516 MB/s | +2% |

| lz4 -9 | 23.2 MB/s | 25.6 MB/s | +10% |

| lz4 -12 | 3.5 Mb/s | 9.5 MB/s | +170% |

Should you aim for best possible decompression speed, it's possible to request LZ4 to actively favor decompression speed, even if it means sacrificing some compression ratio in the process. This can be requested in a variety of ways depending on interface, such as using command --favor-decSpeed on CLI. This option must be combined with ultra compression mode (levels 10+), as it needs careful weighting of multiple solutions, which only this mode can process.

The resulting compressed object always decompresses faster, but is also larger. Your mileage will vary, depending on file content. Speed improvement can be as low as 1%, and as high as 40%. It's matched by a corresponding file size increase, which tends to be proportional. The general expectation is 10-20% faster decompression speed for 1-2% bigger files.

| Filename | decompression speed | --favor-decSpeed |

Speed Improvement | Size change |

|---|---|---|---|---|

| silesia.tar | 2870 MB/s | 3070 MB/s | +7 % | +1.45% |

| dickens | 2390 MB/s | 2450 MB/s | +2 % | +0.21% |

| nci | 3740 MB/s | 4250 MB/s | +13 % | +1.93% |

| osdb | 3140 MB/s | 4020 MB/s | +28 % | +4.04% |

| xml | 3770 MB/s | 4380 MB/s | +16 % | +2.74% |

Finally, variant LZ4_compress_destSize() also receives a ~10% speed boost, since it now internally redirects toward primary internal implementation of LZ4 fast mode, rather than relying on a separate custom implementation. This allows it to take advantage of all the optimization work that has gone into the main implementation.

Compressing small contents

When compressing small inputs, the fixed cost of clearing the compression's internal data structures can become a significant fraction of the compression cost. This release adds a new way, under certain conditions, to perform this initialization at effectively zero cost.

New, experimental LZ4 APIs have been introduced to take advantage of this functionality in block mode:

-

LZ4_resetStream_fast() -

LZ4_compress_fast_extState_fastReset() -

LZ4_resetStreamHC_fast() -

LZ4_compress_HC_extStateHC_fastReset()

More detail about how and when to use these functions is provided in their respective headers.

LZ4 Frame mode has been modified to use this faster reset whenever possible. LZ4F_compressFrame_usingCDict() prototype has been modified to additionally take an LZ4F_CCtx* context, so it can use this speed-up.

Efficient Dictionary compression

Support for dictionaries has been improved in a similar way: they can now be used in-place, which avoids the expense of copying the context state from the dictionary into the working context. Users are expect to see a noticeable performance improvement for small data.

Experimental prototypes (LZ4_attach_dictionary() and LZ4_attach_HC_dictionary()) have been added to LZ4 block API using a loaded dictionary in-place. LZ4 Frame API users should benefit from this optimization transparently.

The previous two changes, when taken advantage of, can provide meaningful performance improvements when compressing small data. Both changes have no impact on the produced compressed data. The only observable difference is speed.

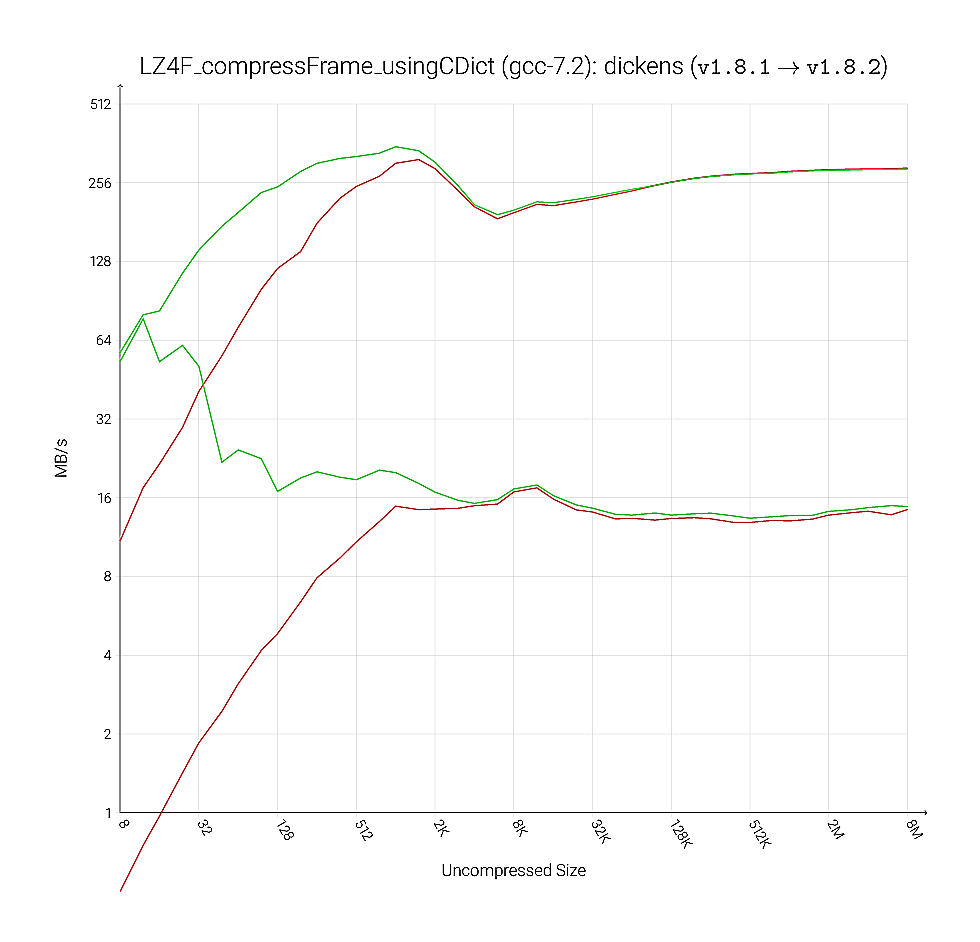

This is a representative graphic of the sort of speed boost to expect. The red lines are the speeds seen for an input blob of the specified size, using the previous LZ4 release (v1.8.1) at compression levels 1 and 9 (those being, fast mode and default HC level). The green lines are the equivalent observations for v1.8.2. This benchmark was performed on the Silesia Corpus. Results for the dickens text are shown, other texts and compression levels saw similar improvements. The benchmark was compiled with GCC 7.2.0 with -O3 -march=native -mtune=native -DNDEBUG under Linux 4.6 and run on an Intel Xeon CPU E5-2680 v4 @ 2.40GHz.

lz4frame_static.h Deprecation

The content of lz4frame_static.h has been folded into lz4frame.h, hidden by a macro guard "#ifdef LZ4F_STATIC_LINKING_ONLY". This means lz4frame.h now matches lz4.h and lz4hc.h. lz4frame_static.h is retained as a shell that simply sets the guard macro and includes lz4frame.h.

Changes list

This release also brings an assortment of small improvements and bug fixes, as detailed below :

- perf: faster compression on small files, by @felixhandte

- perf: improved decompression speed and binary size, by Alexey Tourbin (@svpv)

- perf: faster HC compression, especially at max level

- perf: very small compression ratio improvement

- fix : compression compatible with low memory addresses (

< 0xFFFF) - fix : decompression segfault when provided with

NULLinput, by @terrelln - cli : new command

--favor-decSpeed - cli : benchmark mode more accurate for small inputs

- fullbench : can bench

_destSize()variants, by @felixhandte - doc : clarified block format parsing restrictions, by Alexey Tourbin (@svpv)

v1.8.1.2

6 years agoLZ4 v1.8.1 most visible new feature is its support for Dictionary compression .

This was already somewhat possible, but in a complex way, requiring knowledge of internal working.

Support is now more formally added on the API side within lib/lz4frame_static.h. It's early days, and this new API is tagged "experimental" for the time being.

Support is also added in the command line utility lz4, using the new command -D, implemented by @felixhandte. The behavior of this command is identical to zstd, should you be already familiar.

lz4 doesn't specify how to build a dictionary. All it says is that it can be any file up to 64 KB.

This approach is compatible with zstd dictionary builder, which can be instructed to create a 64 KB dictionary with this command :

zstd --train dirSamples/* -o dictName --maxdict=64KB

LZ4 v1.8.1 also offers improved performance at ultra settings (levels 10+).

These levels receive a new code, called optimal parser, available in lib/lz4_opt.h.

Compared with previous version, the new parser uses less memory (from 384KB to 256KB), performs faster, compresses a little bit better (not much, as it was already close to theoretical limit), and resists pathological patterns which could destroy performance (see #339),

For comparison, here are some quick benchmark using LZ4 v1.8.0 on my laptop with silesia.tar :

./lz4 -b9e12 -v ~/dev/bench/silesia.tar

*** LZ4 command line interface 64-bits v1.8.0, by Yann Collet ***

Benchmarking levels from 9 to 12

9#silesia.tar : 211984896 -> 77897777 (2.721), 24.2 MB/s ,2401.8 MB/s

10#silesia.tar : 211984896 -> 77852187 (2.723), 16.9 MB/s ,2413.7 MB/s

11#silesia.tar : 211984896 -> 77435086 (2.738), 7.1 MB/s ,2425.7 MB/s

12#silesia.tar : 211984896 -> 77274453 (2.743), 3.3 MB/s ,2390.0 MB/s

and now using LZ4 v1.8.1 :

./lz4 -b9e12 -v ~/dev/bench/silesia.tar

*** LZ4 command line interface 64-bits v1.8.1, by Yann Collet ***

Benchmarking levels from 9 to 12

9#silesia.tar : 211984896 -> 77890594 (2.722), 24.4 MB/s ,2405.2 MB/s

10#silesia.tar : 211984896 -> 77859538 (2.723), 19.3 MB/s ,2476.0 MB/s

11#silesia.tar : 211984896 -> 77369725 (2.740), 10.1 MB/s ,2478.4 MB/s

12#silesia.tar : 211984896 -> 77270146 (2.743), 3.7 MB/s ,2508.3 MB/s

The new parser is also directly compatible with lower compression levels, which brings additional benefits :

- Compatibility with

LZ4_*_destSize()variant, which reverses the logic by trying to fit as much data as possible into a predefined limited size buffer. - Compatibility with Dictionary compression, as it uses the same tables as regular HC mode

In the future, this compatibility will also allow dynamic on-the-fly change of compression level, but such feature is not implemented at this stage.

The release also provides a set of small bug fixes and improvements, listed below :

- perf : faster and stronger ultra modes (levels 10+)

- perf : slightly faster compression and decompression speed

- perf : fix bad degenerative case, reported by @c-morgenstern

- fix : decompression failed when using a combination of extDict + low memory address (#397), reported and fixed by Julian Scheid (@jscheid)

- cli : support for dictionary compression (

-D), by Felix Handte @felixhandte - cli : fix :

lz4 -d --rmpreserves timestamp (#441) - cli : fix : do not modify

/dev/nullpermission as root, by @aliceatlas - api : new dictionary api in

lib/lz4frame_static.h - api :

_destSize()variant supported for all compression levels - build :

makeandmake testcompatible with parallel build-jX, reported by @mwgamera - build : can control

LZ4LIB_VISIBILITYmacro, by @mikir - install: fix man page directory (#387), reported by Stuart Cardall (@itoffshore)

Note : v1.8.1.2 is the same as v.1.8.1, with the version number fixed in source code, as notified by Po-Chuan Hsieh (@sunpoet).

v1.8.1

6 years agoPrefer using v1.8.1.2.

It's the same as v1.8.1, but the version number in source code has been fixed, thanks to @sunpoet.

The version number is used in cli and documentation display, to create the full name of dynamic library, and can be requested via LZ4_versionNumber().

v1.8.0

6 years agocli : fix : do not modify /dev/null permissions, reported by @Maokaman1

cli : added GNU separator -- specifying that all following arguments are only files

cli : restored -BX command enabling block checksum

API : added LZ4_compress_HC_destSize(), by @remittor

API : added LZ4F_resetDecompressionContext()

API : lz4frame : negative compression levels trigger fast acceleration, request by @llchan

API : lz4frame : can control block checksum and dictionary ID

API : fix : expose obsolete decoding functions, reported by @cyfdecyf

API : experimental : lz4frame_static.h : new dictionary compression API

build : fix : static lib installation, by @ido

build : dragonFlyBSD, OpenBSD, NetBSD supported

build : LZ4_MEMORY_USAGE can be modified at compile time, through external define

doc : Updated LZ4 Frame format to v1.6.0, restoring Dictionary-ID field in header

doc : lz4's API manual in .html format, by @inikep