Dogen Versions Save

Reference implementation of the MASD Code Generator.

v1.0.32

1 year ago Baía Azul, Benguela, Angola. (C) 2022 Casal Sampaio.

Baía Azul, Benguela, Angola. (C) 2022 Casal Sampaio.

DRAFT: Release notes under construction

Introduction

As expected, going back into full time employment has had a measurable impact on our open source throughput. If to this one adds the rather noticeable PhD hangover — there were far too many celebratory events to recount — it is perhaps easier to understand why it took nearly four months to nail down the present release. That said, it was a productive effort when measured against its goals. Our primary goal was to finish the CI/CD work commenced the previous sprint. This we duly achieved, though you won't be surprised to find out it was far more involved than anticipated. So much so that the, ahem, final touches, have spilled over to the next sprint. Our secondary goal was to resume tidying up the LPS (Logical-Physical Space), but here too we soon bumped into a hurdle: Dogen's PlantUML output was not fit for purpose, so the goal quickly morphed into diagram improvement. Great strides were made in this new front but, as always, progress was hardly linear; to cut a very long story short, when we were half-way through the ask, we got lost on yet another architectural rabbit hole. A veritable Christmas Tale of a sprint it was, though we are not entirely sure on the moral of the story. Anyway, grab yourself that coffee and let's dive deep into the weeds.

User visible changes

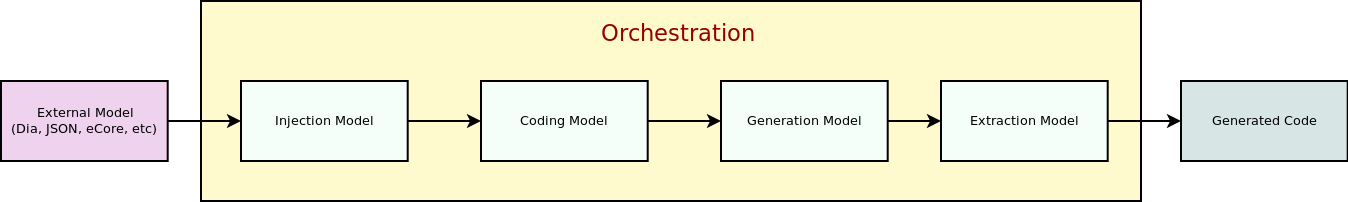

This section covers stories that affect end users, with the video providing a quick demonstration of the new features, and the sections below describing them in more detail. Given the stories do not require that much of a demo, we discuss their implications in terms of the Domain Architecture.

Remove Dia and JSON support

The major user facing story this sprint is the deprecation of two of our three codecs, Dia and JSON, and, somewhat more dramatically, the eradication of the entire notion of "codec" as it stood thus far. Such a drastic turn of events demands an in-depth explanation, so you'll have to bear with us. Lets start our journey with an historical overview.

It wasn't that long ago that "codecs" took the place of the better-known "injectors". Going further back in time, injectors themselves emerged from a refactor of the original "frontends", a legacy of the days when we viewed Dogen more like a traditional compiler. "Frontend" implies a unidirectional transformation and belongs to the compiler domain rather than MDE, so the move to injectors was undoubtedly a step in the right direction. Alas, as the release notes tried to explain then (section "Rename injection to codec"), we could not settle on this term because Dogen's injectors did not behave like "proper" MDE injectors, as defined in the MDE companion notes (p. 32):

In [Béz+03], Bézivin et al. outlines their motivation [for the creation of Technical Spaces (TS)]: ”The notion of TS allows us to deal more efficiently with the ever-increasing complexity of evolving technologies. There is no uniformly superior technology and each one has its strong and weak points.” The idea is then to engineer bridges between technical spaces, allowing the importing and exporting of artefacts across them. These bridges take the form of adaptors called ”projectors”, as Bézivin explains (emphasis ours):

The responsibility to build projectors lies in one space. The rationale to define them is quite simple: when one facility is available in another space and that building it in a given space is economically too costly, then the decision may be taken to build a projector in that given space. There are two kinds of projectors according to the direction: injectors and extractors. Very often we need a couple of injector/extractor [(sic.)] to solve a given problem. [Béz05a]

In other words, injectors are meant to be transforms responsible for projecting elements from one TS into another. Our "injectors" behaved like real injectors in some cases (e.g. Dia), but there were also extractors in the mix (e.g. PlantUML) and even "injector-extractors" too (e.g. JSON, org-mode). Calling this motley projector set "injectors" was a bit of a stretch, and maybe even contrary to the Domain Architecture clean up, given its responsibility for aligning Dogen source code and MDE vocabulary. After wrecking our brains for a fair bit, we decided "codec" sufficed as a stop-gap alternative:

A codec is a device or computer program that encodes or decodes a data stream or signal. Codec is a portmanteau [a blend of words in which parts of multiple words are combined into a new word] of coder/decoder. [Source: Wikipedia]

As this definition implies, the term belongs to the Audio/Video domain so its use never felt entirely satisfying; but, try as we might, we could not come up with a better of way of saying "injection and extraction" in one word, nor had anyone — to our knowledge — defined the appropriate portemanteau within MDE's canon. The alert reader won't fail to notice this is a classic case of a design smell, and so did we, though it was hard to pinpoint what hid behind the smell. Since development life is more than interminable discussions on terminology, and having more than exhausted the allocated resources for this matter, a line was drawn: "codec" was to remain in place until something better came along. So things stood at the start of the sprint, in this unresolved state.

Then, whilst dabbling on some apparently unrelated matters, the light bulb moment finally arrived; and when we fully grasped all its implications, the fallout was much bigger than just a component rename. To understand why it was so, it's important to remember that MASD theory set in stone the very notion of "injection from multiple sources" via the pervasive integration principle — the second of the methodology's six core values. I shan't bother you too much with the remaining five principles, but it is worth reading Principle 2 in full to contextualise our decision making. The PhD thesis (p. 61) states:

Principle 2: MASD adapts to users’ tools and workflows, not the converse. Adaptation is achieved via a strategy of pervasive integration.

MASD promotes tooling integration: developers preferred tools and workflows must be leveraged and integrated with rather than replaced or subverted. First and foremost, MASD’s integration efforts are directly aligned with its mission statement (cf. Section 5.2.2 [Mission Statement]) because integration infrastructure is understood to be a key source of SRPPs [Schematic and Repetitive Physical Patterns]. Secondly, integration efforts must be subservient to MASD’s narrow focus [Principle 1]; that is, MASD is designed with the specific purpose of being continually extended, but only across a fixed set of dimensions. For the purposes of integration, these dimensions are the projections in and out of MASD’s TS [Technical Spaces], as Figure 5.2 illustrates.

Figure 1 [orginaly 5.2]: MASD Pervasive integration strategy.

Figure 1 [orginaly 5.2]: MASD Pervasive integration strategy.

Within these boundaries, MASD’s integration strategy is one of pervasive integration. MASD encourages mappings from any tools and to any programming languages used by developers — provided there is sufficient information publicly available to create and maintain those mappings, and sufficient interest from the developer community to make use of the functionality. Significantly, the onus of integration is placed on MASD rather than on the external tools, with the objective of imposing minimal changes to the tools themselves. To demonstrate how the approach is to be put in practice, MASD’s research includes both the integration of org-mode (cf. Chapter 7), as well as a survey on the integration strategies of special purpose code generators (Craveiro, 2021d [available here]); subsequent analysis generalised these findings so that MASD tooling can benefit from these integration strategies. Undertakings of a similar nature are expected as the tooling coverage progresses.

Whilst in theory this principle sounds great, and whilst we still agree wholeheartedly with it in spirit, there are a few practical problems with its current implementation. The first, which to be fair is already hinted at above, is that you need to have an interested community maintaining the injectors into MASD's TS. That is because, even with decent test coverage, it's very easy to break existing workflows when adding new functionality, and the continued maintenance of the tests is costly. Secondly, many of these formats evolve over time, so one needs to keep up-to-date with tooling to remain relevant. Thirdly, as we add formats we will inevitably pickup more and more external dependencies, resulting in a bulking up of Dogen's core only to satisfy some possibly peripheral use case. Finally, each injector adds a large cognitive load because, as we do changes, we now need to revisit all injectors and see how they map to each representation. Advanced mathematics is not required to see that the velocity of coding is an inverse function of the number of injectors; simple extrapolation shows a future where complexity goes through the roof and development slows down to a crawl. The obviousness of this conclusion does leave one wondering why it wasn't spotted earlier. Turns out we had looked into it but the analysis was naively hand-waved away during our PhD research by means of one key assumption: we posited the existence of a "native" format for modeling, whose scope would be a super-set of all functionality required by MASD. XMI was the main contender, and we even acquired Mastering XMI: Java Programming with the XMI Toolkit, XML and UML (OMG) for this purpose. In this light, mappings were seen as trivial-ish functions to well defined structural patterns, rather than an exploration of an open-ended space. Turns out this assumption was misplaced.

To make matters worse, the more we used org-mode in anger, the more we compared its plasticity to all other formats. Soon, a very important question emerged: what if org-mode is the native format for MASD? That is to say, given our experience with the myriad of input formats (including Dia, JSON, XMI and others), what if org-mode is the format which best embodies MASD's approach to Literate Modeling? Thus far, it certainly has proven to be the format with the lowest impedance mismatch to our conceptual model. And we could already see how the future would play out by looking at some of the stories in this release: there were obvious ways in which to simplify the org-mode representation (with the objective of improving PlantUML output), but these changes lacked an obvious mapping to other codecs such as Dia and JSON. They could of course be done, but in ways that would increase complexity across the board for other codecs. If to this you add resourcing constraints, then it makes sense to refocus the mission and choose a single format as the native MASD input format. Note that it does not mean we are abandoning Principle 2 altogether; one can envision separate repos for tools with mapping code that translates from a specific input format into org-mode, and these can even be loaded into the Dogen binary as shared objects via a plugin interface a-la Boost.DLL. In this framework, each format becomes the responsibility of a specific maintainer with its own plugin and set of tests — all of which exogenous to Dogen's core responsibilities — but still falling under the broader MASD umbrella. Most important of all, they can safely be ignored until such time concrete use cases arrive.

Figure 2: After a decade and a half of continuous usage, Dia stands down - for now.

Figure 2: After a decade and a half of continuous usage, Dia stands down - for now.

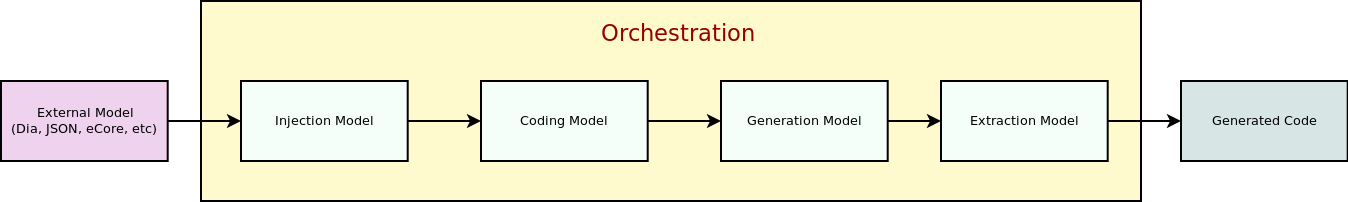

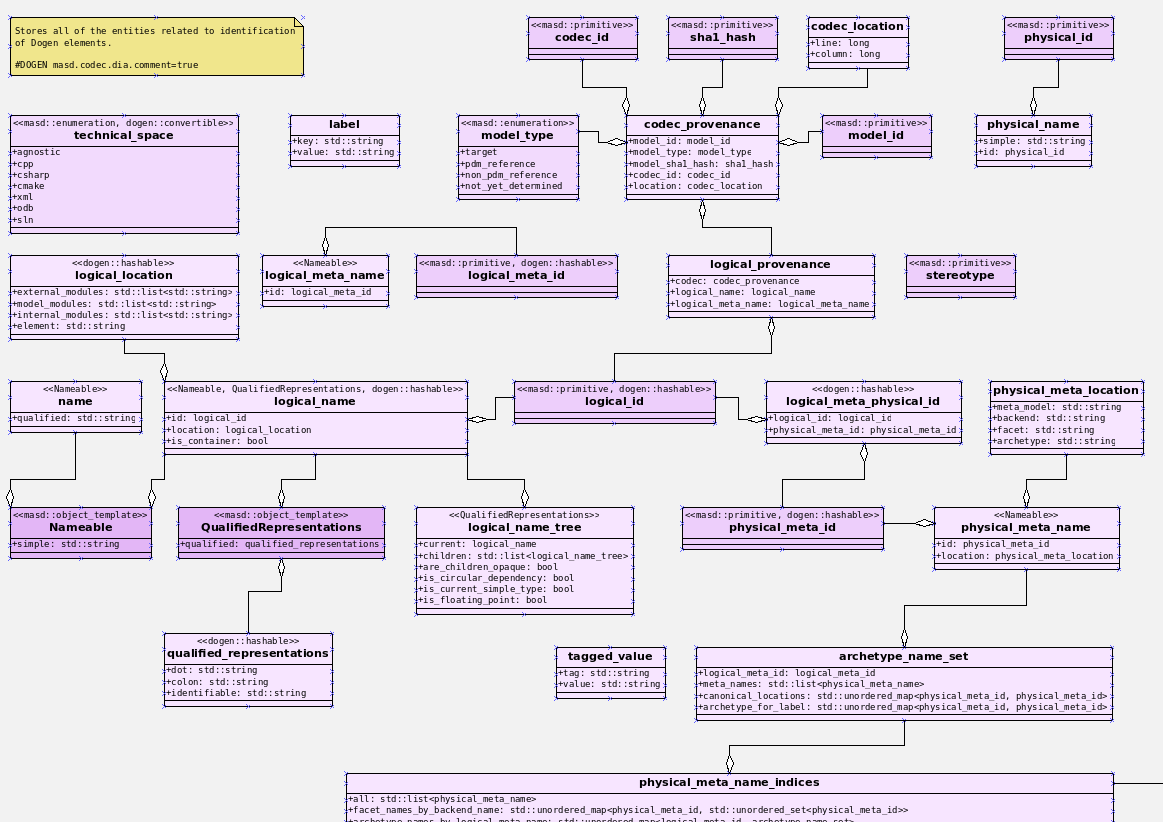

Whilst the analysis required its fair share of white-boarding, the resulting action items did not; they were dealt with swiftly at the sprint's death. Post implementation, we could not help but notice its benefits are even broader than originally envisioned because a lot of the complexity in the codec model was related to supporting bits of functionality for disparate codecs. In addition, we trimmed down dependencies to libxml and zlib, and removed a lot of testing infrastructure — including the deletion of the infamous "frozen" repo described in Sprint 30. It was painful to see Dia going away, having used it for over a decade (Figure 2). Alas, one can ill afford to be sentimental with code bases, lest they rot and become an unmaintainable ball of mud. The dust has barely settled, but it already appears we are converging closer to the original vision of injection (Figure 3); next sprint we'll continue to work out the implications of this change, such as moving PlantUML output to regular code generation. If that is indeed doable, it'll be a major breakthrough in regularising the code base.

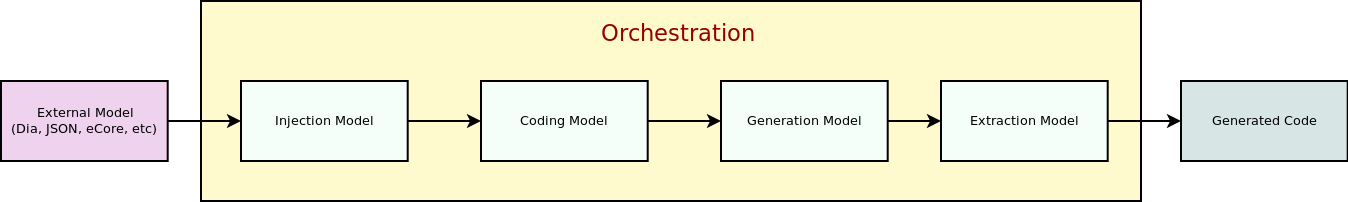

Figure 3: The Dogen pipeline, circa Sprint 12.

Figure 3: The Dogen pipeline, circa Sprint 12.

Those still paying attention will not fail to see a symmetry between injectors and extractors. In other words, as we increase Dogen's coverage across TS — adding more and more languages, and more and more functionality in each language — we will suffer from a similar complexity explosion to what was described above for injection. However, several mitigating factors come to our rescue, or so we hope. First, whilst injectors are at the mercy of the tooling, which changes often, extractors depend on programming language specifications, idioms and libraries. These change too but not quite as often. The problem is worse for libraries, of course, as these do get released often, but not quite as bad for the programming language itself. Secondly, there is an expectation of backwards compatibility when programming languages change, meaning we can get away with being stale for longer; and for libraries, we should clearly state which versions we support. Existing versions will not bit-rot, though we may be a bit stale with regards to latest-and-greatest. I guess, as it was with injectors, time will tell how well these assumptions hold up.

Improve note placement in PlantUML for namespaces

A minor user facing change was the improvement on how we generate PlantUML notes for namespaces. In the past these were generated as follows:

namespace entities #F2F2F2 {

note top of variability

Houses all of the meta-modeling elements related to variability.

end note

The problem with this approach is that the notes end up floating above the namespace with an arrow, making it hard to read. A better approach is a floating note:

namespace entities #F2F2F2 {

note variability_1

Houses all of the meta-modeling elements related to variability.

end note

The note is now declared inside the namespace. To ensure the note has a unique name, we simply append the note count.

A second, somewhat related change is the removal of indentation on notes:

note as transforms_1

Top-level transforms for Dogen. These are the entry points to all

transformations.

end note

Sadly, though it makes PlantUML output a fair bit uglier, this change was needed because indentation spacing is included in the output of PlantUML. Notes now look better — i.e., un-indented — though of course the input PlantUML script did suffer. These are the trade-offs one must make.

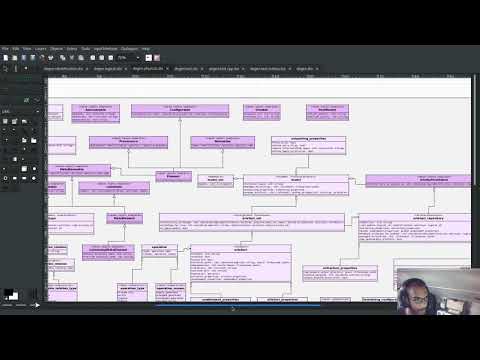

Change PlantUML's layout engine

Strictly speaking, this change is not user facing per se — in other words, nothing will change for users, unless they follow the same approach as Dogen. However, as it has had a major impact in the readability of our PlantUML diagrams, we believe it's worth shouting about. Anyway, to cut a long story short: we played a bit with different layout engines this sprint, as part of our efforts in making PlantUML diagrams more readable. In the end we settled on ELK, the Eclipse Layout Kernel. If you are interested, we were greatly assisted in our endeavours by the PlantUML community:

- Alternative layout engines from graphviz #1110

- Class diagrams: how to make best use of space in large diagrams #1187

The change itself is fairly minor from a Dogen perspective, e.g. in CMake we added:

message(STATUS "Found PlantUML: ${PLANTUML_PROGRAM}")

set(WITH_PLANTUML "on")

set(PLANTUML_ENVIRONMENT PLANTUML_LIMIT_SIZE=65536 PLANTUML_SECURITY_PROFILE=UNSECURE)

set(PLANTUML_OPTIONS -Playout=elk -tsvg)

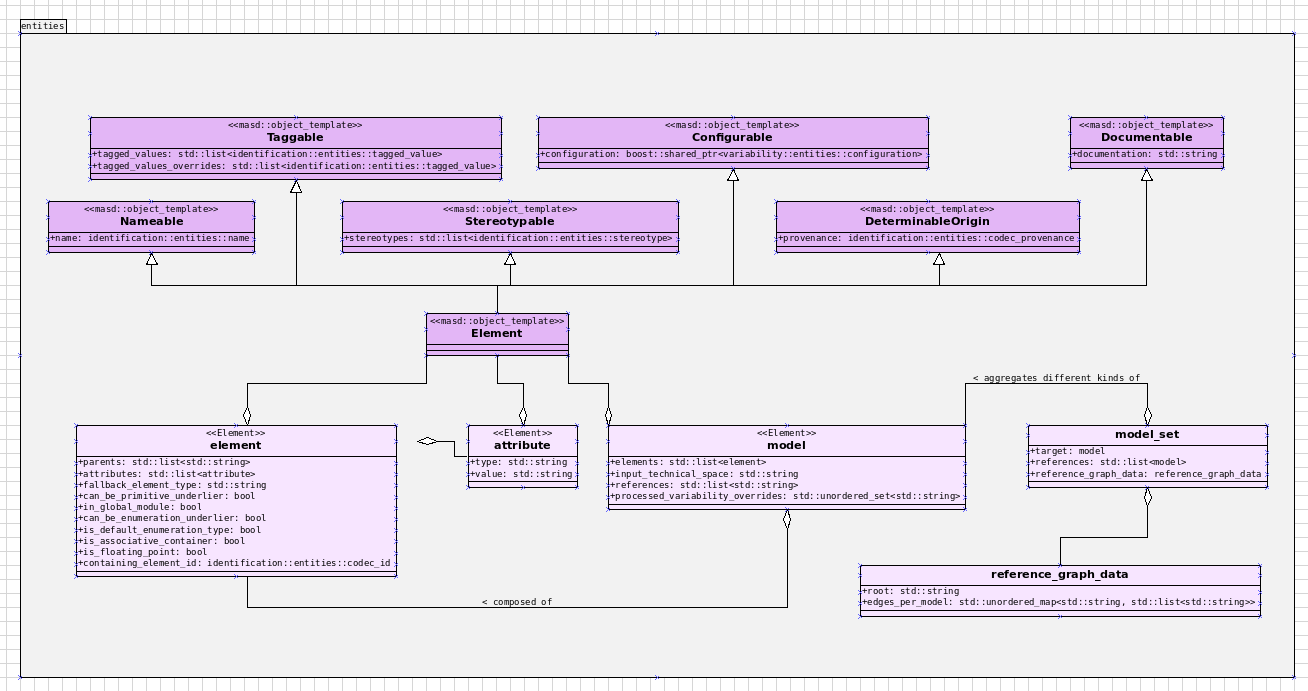

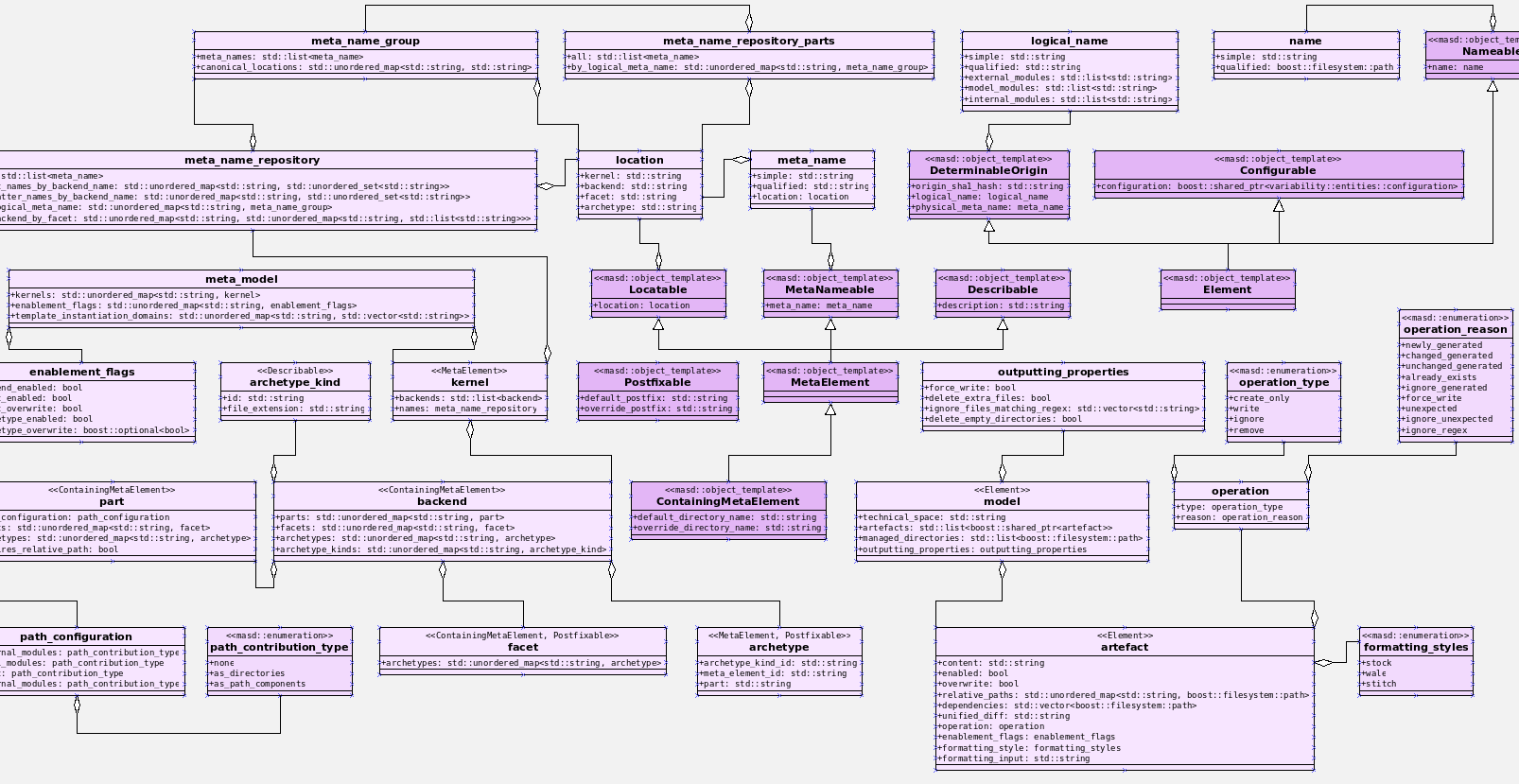

The operative part being -Playout=elk. Whilst it did not solve all of our woes, it certainly made diagrams a tad neater as Figure 4 attests.

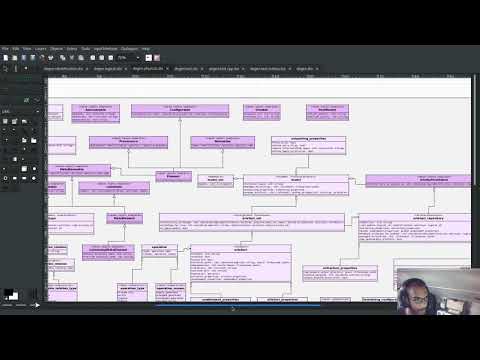

Figure 4: Codec model in PlantUML with the ELK layout.

Figure 4: Codec model in PlantUML with the ELK layout.

Note also that you need to install the ELK jar, as per instructions in the PlantUML site.

Development Matters

In this section we cover topics that are mainly of interest if you follow Dogen development, such as details on internal stories that consumed significant resources, important events, etc. As usual, for all the gory details of the work carried out this sprint, see the sprint log. As usual, for all the gory details of the work carried out this sprint, see the sprint log.

Milestones and Éphémérides

There were no particular events to celebrate.

Significant Internal Stories

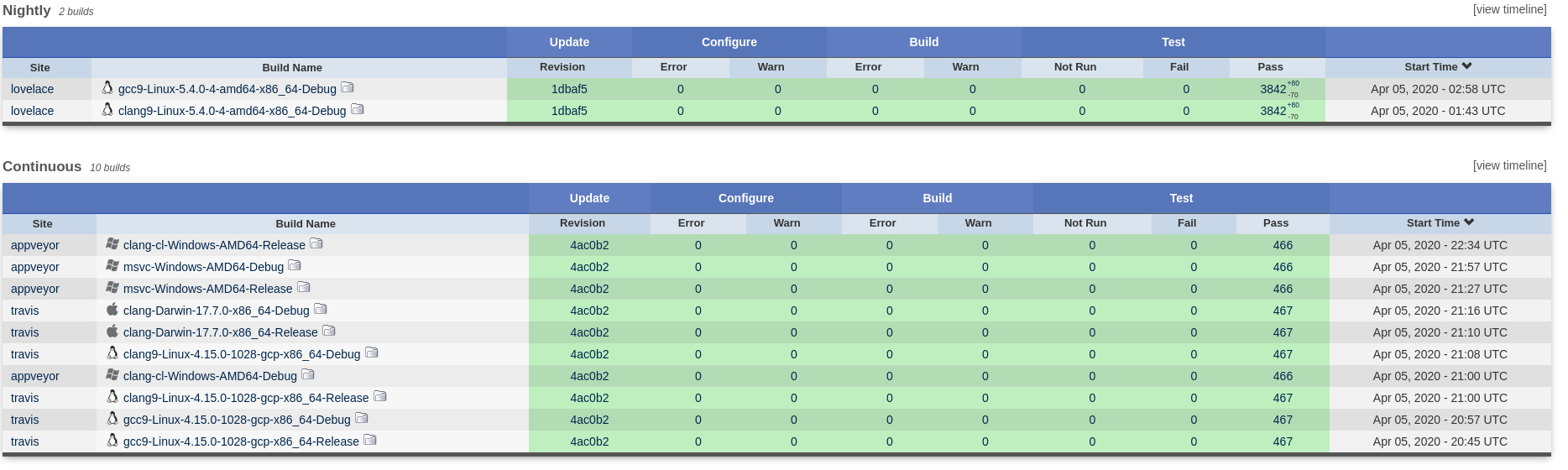

This was yet another sprint focused on internal engineering work, completing the move to the new CI environment that was started in Sprint 31. This work can be split into three distinct epics: continuous builds, nightly builds and general improvements. Finally, we also spent a fair bit of time improving PlantUML diagrams.

CI Epic 1: Continuous Builds

The main task this sprint was to get the Reference Products up to speed in terms of Continuous builds. We also spent some time ironing out messaging coming out of CI.

Figure 5: Continuous builds for the C++ Reference Product.

Figure 5: Continuous builds for the C++ Reference Product.

The key stories under this epic can be summarised as follows:

- Add continuous builds to C++ reference product: CI has been restored to the C++ reference product, via github workflows.

- Add continuous builds to C# reference product: CI has been restored to the C# reference product, via github workflows.

- Gitter notifications for builds are not showing up: some work was required to reinstate basic Gitter support for GitHub workflows. It the end it was worth it, especially because we can see everything from within Emacs!

-

Create a GitHub account for MASD BOT: closely related to the previous story, it was a bit annoying to have the GitHub account writing messages to gitter as oneself because you would not see these (presumably the idea being that you sent the message yourself, so you don't need to see it twice). Turns out its really easy to create a github account for a bot, just use your existent email address and add

+something, for example+masd-bot. With this we now see the messages as coming from the MASD bot.

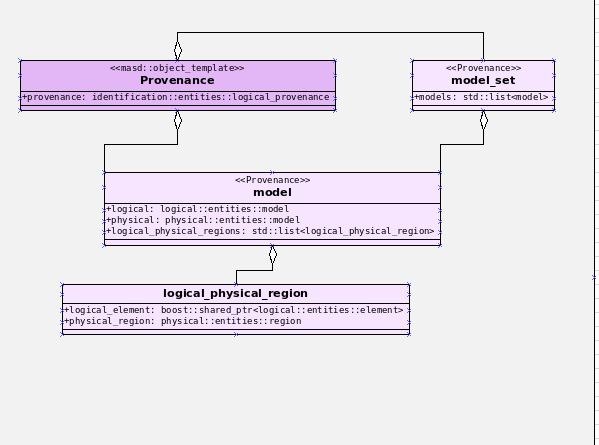

CI Epic 2: Nightly Builds

This sprint was focused on bringing Nightly builds up-to-speed. The work was difficult due to the strange nature of our nightly builds. We basically do two types things with our nightlies:

- run valgrind on the existing CI, to check for any memory issues. In the future one can imagine adding fuzzing etc and other long running tasks that are not suitable for every commit.

- perform a "full generation" for all Dogen code, called internally "fullgen". This is a setup whereby we generate all facets across physical space, even though many of them are disabled for regular use. It serves as a way to validate that we generate good code. We also generate tests for these facets. Ideally we'd like to valgrind all of this code too.

At the start of this sprint we were in a bad state because all of the changes done to support CI in GitHub didn't work too well with our current setup. In addition, because nightlies took too long to run on Travis, we were running them on our own PC. Our first stab was to simply move nightlies into GitHub workflow. We soon found out that a naive approach would burst GitHub limits, generous as they are, because fullgen plus valgrind equal a long time running tests. Eventually we settled on the final approach of splitting fullgen from the plain nightly. This, plus the deprecation of vast swathes of the Dogen codebase meant that we could run fullgen.

Figure 6: Nightly builds for Dogen.

Figure 6: Nightly builds for Dogen. fg stands for fullgen.

In terms of detail, the following stories were implemented to get to the bottom of this epic:

- Improve diffing output in tests: It was not particularly clear why some tests were failing on nightlies but passing on continuous builds. We spent some time making it clearer.

- Nightly builds are failing due to missing environment var: A ridiculously large amount of time was spent in understanding why the locations of the reference products were not showing up in nightly builds. In the end, we ended up changing the way reference products are managed altogether, making life easier for all types of builds. See this story under "General Improvements".

- Full generation support in tests is incorrect: Nightly builds require "full generation"; that is to say, generating all facets across physical space. However, there were inconsistencies on how this was done because our unit tests relied on "regular generation".

- Tests failing with filesystem errors: yet another fallout of the complicated way in which we used to do nightlies, with lots of copying and moving of files around. We somehow managed to end up in a complex race condition when recreating the product directories and initialising the test setup. The race condition was cleaned up and we are more careful now in how we recreate the test data directories.

- Add nightly builds to C++ reference product: We are finally building the C++ reference implementation once more.

- Investigate nightly issues: this was an hilarious problem: we were still running nightlies on our desktop PC, and after a Debian update they stopped appearing. Reason: for some reason sleep mode was set to a different default and the PC was now falling asleep after a certain time without use. However, the correct solution is to move to GitHub and not depend on local PCs so we merely deprecated local nightlies. It also saves on electricity bills, so it was a double win.

- Create a nightly github workflow: as per the previous story, all nightlies are now in GitHub! this is both for "plain" nightlies as well as "fullgen" builds, with full CDash integration.

- Run nightlies only when there are changes: we now only build nightlies if there was a commit in the previous 24 hours, which hopefully will make GitHub happier.

- Consider creating nightly branches: with the move to GitHub actions, it made sense to create a real branch that is persisted in GitHub rather than a temporary throw away one. This is because its very painful to investigate issues: one has to recreate the "fullgen" code first, then redo the build, etc. With the new approach, the branch for the current nightly is created and pushed into GitHub, and then the nightly runs off of it. This means that, if the nightly fails, one simply has to pull the branch and build it locally. Quality of life improved dramatically.

- Nightly builds are taking too long: unfortunately, we burst the GitHub limits when running fullgen builds under valgrind. This was a bit annoying because we really wanted to see if all of the generated code was introducing some memory issues, but alas it just takes too long. Anyways, as a result of this, and as alluded to in other stories, we split "plain" nightlies from "fullgen" nightlies, and used valgrind only on plain nightlies.

CI Epic 3: General Improvements

Some of the work did not fall under Continuous or Nightly builds, so we are detailing it here:

- Update boost to latest in vcpkg: Dogen is now using Boost v1.80. In addition, given how trivial it is to update dependencies, we shall now perform an update at the start of every new sprint.

- Remove deprecated uses of boost bind: Minor tidy-up to get rid of annoying warnings that resulted from using latest Boost.

- Remove uses of mock configuration factory: as part of the tidy-up around configuration, we rationalised some of the infrastructure to create configurations.

- Cannot access binaries from release notes: annoyingly it seems the binaries generated on each workflow are only visible to certain GitHub users. As a mitigation strategy, for now we are appending the packages directly to the release note. A more lasting solution is required, but it will be backlogged.

- Enable CodeQL: now that LGTM is no more, we started looking into its next iteration. First bits of support have been added via GitHub actions, but it seems more is required in order to visualise its output. Sadly, as this is not urgent, it will remain on the backlog.

- Code coverage in CDash has disappeared: as part of the CI work, we seemed to have lost code coverage. It is still not clear why this was happening, but after some other changes, the code coverage came back. Not ideal, clearly there is something stochastic somewhere on our CTest setup but, hey-ho, nothing we can do until the problem resurfaces.

- Make reference products git sub-modules: in the past we had a complicated set of scripts that downloaded the reference products, copied them to well-known locations and so on. It was... not ideal. As we had already mentioned in the previous release, it also meant we had to expose end users to our quirky directory structure because the CMake presets are used by all. With this release we had a moment of enlightenment: what if the reference products were moved to git submodules? We've had such success with vcpkg in the previous sprint that it seemed like a no-brainer. And indeed it was. We are now not exposing any of the complexities of our own personal choices in directory structures, and due to the magic of git, the specific version of the reference product is pinned on the commit and commited into git. This is a much better approach altogether.

Figure 7: Reference products are now git sub-modules of Dogen.

Figure 7: Reference products are now git sub-modules of Dogen.

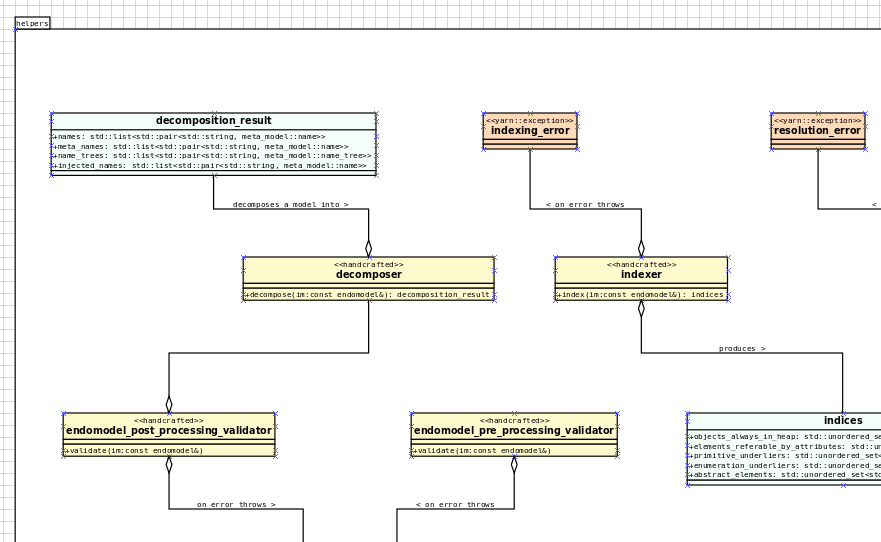

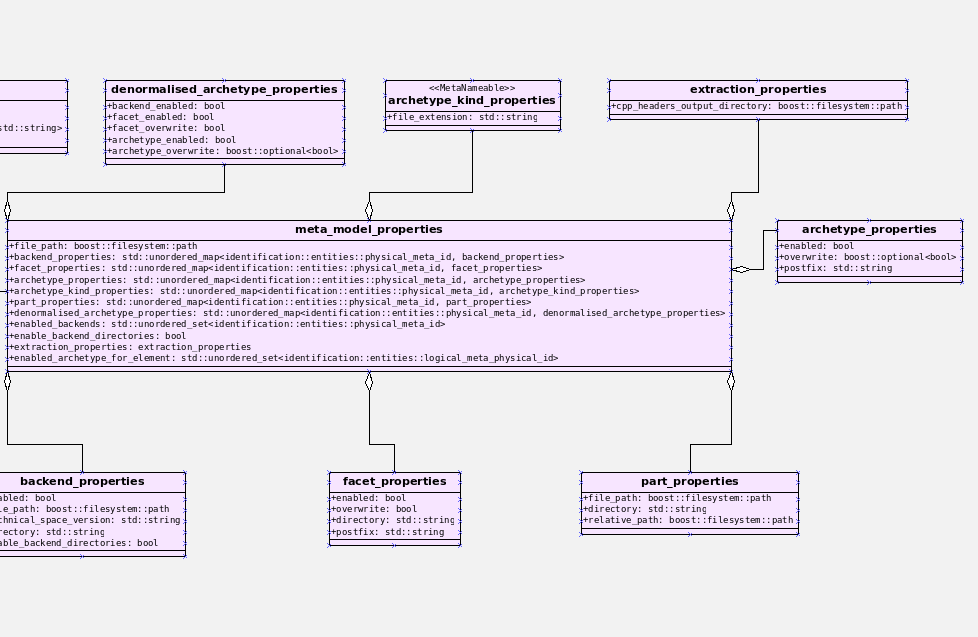

PlantUML Epic: Improvements to diagrams of Dogen models

Our hope was to resume the fabled PMM refactor this sprint. However, as we tried using the PlantUML diagrams in anger, it was painfully clear we couldn't see the class diagram for the classes, if you pardon the pun. To be fair, smaller models such as codec, identification and so on had diagrams that could be readily understood; but key diagrams such as those for the logical and text models are in an unusable state. So it was that, before we could get on with real coding, we had to make the diagrams at least "barely usable", to borrow Ambler's colourful expression [Ambler, Scott W (2007). “Agile Model driven development (AMDD)]". In the previous sprint we had already added a simple way to express relationships, like so:

** Taggable :element:

:PROPERTIES:

:custom_id: 8BBB51CE-C129-C3D4-BA7B-7F6CB7C07D64

:masd.codec.stereotypes: masd::object_template

:masd.codec.plantuml: Taggable <|.. comment

:END:

Any expression under masd.codec.plantuml is transported verbatim to the PlantUML diagram. We decided to annotate all Dogen models with such expressions to see how that would impact diagrams in terms of readability. Of course, the right thing would be to automate such relationships but, as per previous sprint's discussions, this is easier said than done: you'd move from a world of no relationships to a world of far too many relationships, making the diagram equally unusable. So hand-holding it was. This, plus the move to ELK as explained above allowed us to successfully update a large chunk of Dogen models:

-

dogen -

dogen.cli -

dogen.codec -

dogen.identification -

dogen.logical -

dogen.modeling -

dogen.orchestration -

dogen.org -

dogen.physical

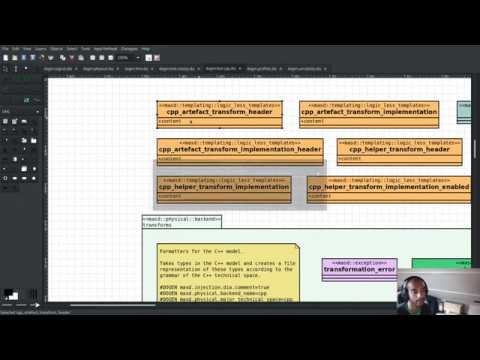

However, we hit a limitation with dogen.text. The model is just too unwieldy in its present form. Part of the problem stems from the fact that there are just no relations to add: templates are not related to anything. So, by default, PlantUML makes one long (and I do mean long) line. Here is a small fragment of the model:

Figure 8: Partial representation of Dogen's text model in PlantUML.

Figure 8: Partial representation of Dogen's text model in PlantUML.

Tried as we might we could not get this model to work. Then we noticed something interesting: some parts of the model where classes are slightly smaller were being rendered in a more optimal way, as you can see in the picture above; smaller classes cluster around a circular area whereas very long classes are lined up horizontally. We took our findings to PlantUML:

We are still investigating what can be done from a PlantUML perspective, but it seems having very long stereotypes is confusing the layout engine. Reflecting on this, it seems this is also less readable for humans too. For example:

**** builtin header :element:

:PROPERTIES:

:custom_id: ED36860B-162A-BB54-7A4B-4B157F8F7846

:masd.wale.kvp.containing_namespace: text.transforms.hash

:masd.codec.stereotypes: masd::physical::archetype, dogen::builtin_header_configuration

:END:

Using stereotypes in this manner is a legacy from Dia, because that is what is expected of a UML diagram. However, since org-mode does not suffer from these constraints, it seemed logical to create different properties to convey different kinds of information. For instance, we could split out configurations into its own entry:

**** enum header :element:

:PROPERTIES:

:custom_id: F2245764-7133-55D4-84AB-A718C66777E0

:masd.wale.kvp.containing_namespace: text.transforms.hash

:masd.codec.stereotypes: masd::physical::archetype

:masd.codec.configurations: dogen::enumeration_header_configuration

:END:

And with this, the mapping into PlantUML is also simplified, since perhaps the configurations are not needed from a UML perspective. Figure 9 shows both of these approaches side by side:

Figure 9: Removal of some stereotypes.

Figure 9: Removal of some stereotypes.

Next sprint we need to update all models with this approach and see if this improves diagram generation.

This epic was composed of a number of stories, as follows:

- Add PlantUML relationships to diagrams: manually adding each relationship to each model was a lengthy (and somewhat boring) operation, but improved the generated diagrams dramatically.

- Upgrade PlantUML to latest: it seems latest is always greatest with PlantUML, so we spent some time understanding how we can manually update it rather than depend on the slightly older version in Debian. We ended up settling on a massive hack, just drop the JAR in the same directory as the packaged version and then symlink it. Not great, but it works.

- Change namespaces note implementation in PlantUML: See user visible stories above.

- Consider using a different layout engine in PlantUML: See user visible stories above.

Video series of Dogen coding

We have been working on a long standing series of videos on the PMM refactor. However, as you probably guessed, they have had nothing to do with refactoring with the PMM so far, because the CI/CD work has dominated all our time for several months now. To make matters more confusing, we had recorded a series of videos on CI previously (MASD - Dogen Coding: Move to GitHub CI), but in an extremely optimistic step, we concluded that series because we thought the work that was left was fairly trivial — famous last words, hey. If that wasn't enough, our Debian PC has been upgraded to Pipewire which — whilst a possibly superior option to Pulse Audio — lacks a noise filter that we can work with.

To cut a long and somewhat depressing story short, our videos were in a big mess and we didn't quite know how to get out of it. So this sprint we decided to start from a clean slate:

- the existing series on PMM refactor was renamed to "MASD - Dogen Coding: Move to GitHub Actions". It seems best rather than append these 3 videos to the existing "MASD - Dogen Coding: Move to GitHub CI" playlist because it would probably make it even more confusing.

- we... well, completed it as is, even though it missed all of the work in the previous sprint. This is just so we can get it out of the way. I guess once noise-free sound is working again we could add an addendum and do a quick tour of our new CI/CD infrastructure, but given our present time constraints it is hard to tell when that will be.

Anyways, hopefully all of that makes some sense. Here are the videos we recorded so far.

Video 2: Playlist for "MASD - Dogen Coding: Move to GitHub Actions".

Video 2: Playlist for "MASD - Dogen Coding: Move to GitHub Actions".

The table below shows the individual parts of the video series.

| Video | Description |

|---|---|

| Part 1 | In this video we start off with some boring tasks left over from the previous sprint. In particular, we need to get nightlies to go green before we can get on with real work. |

| Part 2 | This video continues the boring work of sorting out the issues with nightlies and continuous builds. We start by revising what had been done offline to address the problems with failing tests in the nightlies and then move on to remove the mock configuration builder that had been added recently. |

| Part 3 | With this video we finally address the remaining CI problems by adding GitHub Actions support for the C# Reference Product. |

Table 1: Video series for "MASD - Dogen Coding: Move to GitHub Actions".

With a bit of luck, regular video service will be resumed next sprint.

Resourcing

The resourcing picture is, shall we say, nuanced. On the plus side, utilisation is down significantly when compared to the previous sprint — we did take four months this time round instead of a couple of years, so that undoubtedly helped. On the less positive side, we still find ourselves well outside the expected bounds for this particular metric; given a sprint is circa 80 hours, one would expect to clock that much time in a month or two of side-coding. We are hoping next sprint will compress some of the insane variability we have experienced of late with regards to the cadence of our sprints.

Figure 10: Cost of stories for sprint 32.

Figure 10: Cost of stories for sprint 32.

The per-story data forms an ever so slightly flatterer picture. Around 23% of the overall spend was allocated towards non-coding tasks such as writing the release notes (~12.5%), backlog refinement (~8%) and demo related activities. Worrying, it was up around 5% from the previous sprint, which was itself already an extremely high number historically. Given the resource constraints, it would be wise to compress time spent on management activities such as these to free up time for actual work, and buck the trend of these two or three sprints. However, the picture is not quite as clear cut as it may appear since release notes are becoming more and more a vehicle for reflection, both on the activities of the sprint (post mortem) but also taking on a more philosophical, and thus broader, remit. Given no further academic papers are anticipated, most of our literature reflections are now taking place via this medium. In this context, perhaps the high cost on the release notes is worth its price.

With regards t the meat of the sprint: engineering activities where bucketed into three main topics, with CI/CD taking around 30% of the total ask (22% for Nightlies and 10% for Continuous), roughly 30% taken on PlantUML work and the remaining 15% used in miscellaneous engineering activities — including a fair portion of analysis on the "native" format for MASD. This was certainly time well-spent, even though we would have preferred to conclude CI work quicker. All and all, it was a though but worthwhile sprint, which marks the end of the PhD era and heralds the start of the new "open source project on the side era".

Roadmap

With Sprint 32 we decided to decommission the Project Roadmap. It had served us well up to the end of the PhD as it was a useful, if albeit vague, forecasting device for what was to come up in the short to medium term. Now that we have finished commitments with firm dead lines we can rely on a pure agile approach and see where each sprint takes us. Besides, it is one less task to worry about when writing up the release notes. The road map started initially in Sprint 14, so it has been with us just shy of four years.

Binaries

Binaries for the present release are available in Table 2.

| Operative System | Binaries |

|---|---|

| Linux Debian/Ubuntu | dogen_1.0.32_amd64-applications.deb |

| Windows | DOGEN-1.0.32-Windows-AMD64.msi |

| Mac OSX | DOGEN-1.0.32-Darwin-x86_64.dmg |

Table 2: Binary packages for Dogen.

A few important notes:

- Linux: the Linux binaries are not stripped at present and so are larger than they should be. We have an outstanding story to address this issue, but sadly CMake does not make this a trivial undertaking.

- OSX and Windows: we are not testing the OSX and Windows builds (e.g. validating the packages install, the binaries run, etc.). If you find any problems with them, please report an issue.

- 64-bit: as before, all binaries are 64-bit. For all other architectures and/or operative systems, you will need to build Dogen from source. Source downloads are available in zip or tar.gz format.

- Assets on release note: these are just pictures and other items needed by the release note itself. We found that referring to links on the internet is not a particularly good idea as we now have lots of 404s for older releases. Therefore, from now on, the release notes will be self contained. Assets are otherwise not used.

Next Sprint

Now that we are finally out of the woods of CI/CD engineering work, expectations for the next sprint are running high. We may actually be able to devote most of the resourcing towards real coding. Having said that, we still need to mop things up with the PlantUML representation, which will probably not be the most exciting of tasks.

That's all for this release. Happy Modeling!

v1.0.31

1 year ago Graduation day for the PhD programme of Computer Science at the University of Hertfordshire, UK. (C) 2022 Shahinara Craveiro.

Graduation day for the PhD programme of Computer Science at the University of Hertfordshire, UK. (C) 2022 Shahinara Craveiro.

Introduction

After an hiatus of almost 22 months, we've finally managed to push another Dogen release out of the door. Proud of the effort as we are, it must be said it isn't exactly the most compelling of releases since the bulk of its stories are related to basic infrastructure. More specifically, the majority of resourcing had to be shifted towards getting Continuous Integration (CI) working again, in the wake of Travis CI's managerial changes. However, the true focus of the last few months lays outside the bounds of software engineering; our time was spent mainly on completing the PhD thesis, getting it past a myriad of red-tape processes and perhaps most significantly of all, on passing the final exam called the viva. And so we did. Given it has taken some eight years to complete the PhD programme, you'll forgive us for the break with the tradition in naming releases after Angolan places or events; regular service will resume with the next release, on this as well as on the engineering front <knocks on wood, nervously>. So grab a cupper, sit back, relax, and get ready for the release notes that mark the end of academic life in the Dogen project.

User visible changes

This section covers stories that affect end users, with the video providing a quick demonstration of the new features, and the sections below describing them in more detail. The demo spends some time reflecting on the PhD programme overall.

Deprecate support for dumping tracing to a relational database

It wasn't that long ago Dogen was extended to dump tracing information into relational databases such as PostgreSQL and their ilk. In fact, v1.0.20's release notes announced this new feature with great fanfare, and we genuinely had high hopes for its future. You are of course forgiven if you fail to recall what the fuss was all about, so it is perhaps worthwhile doing a quick recap. Tracing - or probing as it was known then - was introduced in the long forgotten days of Dogen v1.0.05, the idea being that it would be useful to inspect model state as the transform graph went through its motions. Together with log files, this treasure trove of information enabled us to quickly understand where things went wrong, more often than not without necessitating a debugger. And it was indeed incredibly useful to begin with, but we soon got bored of manually inspecting trace files. You see, the trouble with these crazy critters is that they are rather plump blobs of JSON, thus making it difficult to understand "before" and "after" diffs for the state of a given model transform - even when allowing for json-diff and the like. To address the problem we doubled-down on our usage of JQ, but the more we did so, the clearer it became that JQ queries competed in the readability space with computer science classics like regular expressions and perl. A few choice data points should give a flavour of our troubles:

# JQ query to obtain file paths:

$ jq .models[0].physical.regions_by_logical_id[0][1].data.artefacts_by_archetype[][1].data.data.file_path

# JQ query to sort models by elements:

$ jq '.elements|=sort_by(.name.qualified)'

# JQ query for element names in generated model:

$ jq ."elements"[]."data"."__parent_0__"."name"."qualified"."dot"

It is of course deeply unfair to blame JQ for all our problems, since "meaningful" names such as __parent_0__ fall squarely within Dogen's sphere of influence. Moreover, as a tool JQ is extremely useful for what it is meant to do, as well as being incredibly fast at it. Nonetheless, we begun to accumulate more and more of these query fragments, glued them up with complex UNIX shell pipelines that dumped information from trace files into text files, and then dumped diffs of said information to other text files which where then... - well, you get the drift. These scripts were extremely brittle and mostly "one-off" solutions, but at least the direction of travel was obvious: what was needed was a way to build up a number of queries targeting the "before" and "after" state of any given transform, such that we could ask a series of canned questions like "has object x0 gone missing in transform t0?" or "did we update field f0 incorrectly in transform t0?", and so on. One can easily conceive that a large library of these queries would accumulate over time, allowing us to see at a glance what changed between transforms and, in so doing, make routine investigations several orders of magnitude faster. Thus far, thus logical. We then investigated PostgreSQL's JSON support and, at first blush, found it to be very comprehensive. Furthermore, given that Dogen always had basic support for ODB, it was "easy enough" to teach it to dump trace information into a relational database - which we did in the aforementioned release.

Alas, after the initial enthusiasm, we soon realised that expressing our desired questions as database queries was far more difficult than anticipated. Part of it is related to the complex graph that we have on our JSON documents, which could be helped by creating a more relational-database-friendly model; and part of it is the inexperience with PostgreSQL's JSON query extensions. Sadly, we do not have sufficient time address either question properly, given the required engineering effort. To make matters worse, even though it was not being used in anger, the maintenance of this code was become increasingly expensive due to two factors:

- its reliance on a beta version of ODB (v2.5), for which there are no DEBs readily available; instead, one is expected to build it from source using Build2, an extremely interesting but rather suis generis build tool; and

- its reliance on either a manual install of the ODB C++ libraries or a patched version of vcpkg with support for v2.5. As vcpkg undergoes constant change, this means that every time we update it, we then need to spend ages porting our code to the new world.

Now, one of the rules we've had for the longest time in Dogen is that, if something is not adding value (or worse, subtracting value) then it should be deprecated and removed until such time it can be proven to add value. As with any spare time project, time is extremely scarce, so we barely have enough of it to be confused with the real issues at hand - let alone speculative features that may provide a pay-off one day. So it was that, with great sadness, we removed all support for the relational backend on this release. Not all is lost though. We use MongoDB a fair bit at work, and got the hang of its query language. A much simpler alternative is to dump the JSON documents into MongoDB - a shell script would do, at least initially - and then write Mongo queries to process the data. This is an approach we shall explore next time we get stuck investigating an issue using trace dumps.

Add "verbatim" PlantUML extension

The quality of our diagrams degraded considerably since we moved away from Dia. This was to be expected; when we originally added PlantUML support in the previous release, it was as much a feasibility study as it was the implementation of a new feature. The understanding was that we'd have to spend a number of sprints slowly improving the new codec, until its diagrams where of a reasonable standard. However, this sprint made two things clear: a) just how much we rely on these diagrams to understand the system, meaning we need them back sooner rather than later; and b) just how much machinery is required to properly model relations in a rich way, as was done previously. Worse: it is not necessarily possible to merely record relations between entities in the input codec and then map those to a UML diagram. In Dia, we only modeled "significant relations" in order to better convey meaning. Lets make matters concrete by looking at a vocabulary type such as entities::name in model dogen::identification. It is used throughout the whole of Dogen, and any entity with a representation in the LPS (Logical-Physical Space) will use it. A blind approach of modeling each and every relation to a core type such as this would result in a mess of inter-crossing lines, removing any meaning from the resulting diagram.

After a great deal of pondering, we decided that the PlantUML output needs two kinds of data sources: automated, where the relationship is obvious and uncontroversial - e.g. the attributes that make up a class, inheritance, etc.; and manual, where the relationship requires hand-holding by a human. This is useful for example in the above case, where one would like to suppress relationships against a basic vocabulary type. The feature was implemented by means of adding a PlantUML verbatim attribute to models. It is called "verbatim" because we merely cut and paste the field's content into the PlantUML representation. By convention, these statements are placed straight after the entity they were added to. It is perhaps easier to understand this feature by means of an example. Say in the dogen.codec model one wishes to add a relationship between model and element. One could go about it as follows:

Figure 1: Use of the verbatim PlantUML property in the

Figure 1: Use of the verbatim PlantUML property in the dogen.codec model.

As you can see, the property masd.codec.plantuml is extremely simple: it merely allows one to enter valid PlantUML statements, which are subsequently transported into the generated source code without modification, e.g.:

Figure 2: PlantUML source code for

Figure 2: PlantUML source code for dogen.codec model.

For good measure, we can observe the final (graphical) output produced by PlantUML in Figure 3, with the two relations, and compare it with the old Dia representation (Figure 4). Its worth highlighting a couple of things here. Firstly - and somewhat confusingly - in addition to element, the example also captures a relationship with the object template Element. It was left on purpose as it too is a useful demonstration of this new feature. Note that it's still not entirely clear whether this is the correct UML syntax for modeling relationships with object templates - the last expert I consulted was not entirely pleased with this approach - but no matter. The salient point is not whether this specific representation is correct or incorrect, but that one can choose to use this or any other representation quite easily, as desired. Secondly and similarly, the aggregation between model_set, model and element is something that one would like to highlight in this model, and it is possible to do so trivially by means of this feature. Each of these classes is composed of a number of attributes which are not particularly interesting from a relationship perspective, and adding relations for all of those would greatly increase the amount of noise in the diagram.

Figure 3: Graphical output produced by PlantUML from Dogen-generated sources.

Figure 3: Graphical output produced by PlantUML from Dogen-generated sources.

This feature is a great example of how often one needs to think of a problem from many different perspectives before arriving at a solution; and that, even though the problem may appear extremely complex at the start, sometimes all it takes is to view it from a completely different angle. All and all, the feature was implemented in just over two hours; we had originally envisioned lots of invasive changes at the lowers levels of Dogen just to propagate this information, and likely an entire sprint dedicated to it. To be fair, the jury is not out yet on whether this is really the correct approach. Firstly, because we now need to go through each and every model and compare the relations we had in Dia to those we see in PlantUML, and implement them if required. Secondly, we have no way of knowing if the PlantUML input is correct or not, short of writing a parser for their syntax - which we won't consider. This means the user will only find out about syntax errors after running PlantUML - and given it will be within generated code, it is entirely likely the error messages will be less than obvious as to what is causing the problem. Thirdly and somewhat related: the verbatim nature of this attribute entails bypassing the Dogen type system entirely, by design. This means that if this information is useful for purposes other than PlantUML generation - say for example for regular source code generation - we would have no access to it. Finally, the name masd.codec.plantuml is also questionable given the codec name is plantuml. Next release we will probably name it to masd.codec.plantuml.verbatim to better reflect its nature.

Figure 4: Dia representation of the

Figure 4: Dia representation of the dogen.codec model.

A possibly better way of modeling this property is to add a non-verbatim attribute such as "significant relationship" or "user important relationship" or some such. Whatever its name, said attribute would model the notion of there being an important relationship between some types within the Dogen type system, and it could then be used by the PlantUML codec to output it in its syntax. However, before we get too carried away, its important to remember that we always take the simplest possible approach first and wait until use cases arrive, so all of this analysis has been farmed off to the backlog for some future use.

Video series on MDE and MASD

In general, we tend to place our YouTube videos under the Development Matters section of the release notes because these tend to be about coding within the narrow confines of Dogen. As with so many items within this release, an exception was made for one of the series because it is likely to be of interest to Dogen developers and users alike. The series in question is called "MASD: An introduction to Model Assisted Software Development", and it is composed of 10 parts as of this writing. Its main objective was to prepare us for the viva, so the long arc of the series builds up to why one would want to create a new methodology and ends with an explanation of what that methodology might be. However, as we were unsure as to whether we could use material directly from the thesis, and given our shortness of time to create new material specifically for the series, we opted for a high-level description of the methodology; in hindsight, we find it slightly unsatisfactory due to a lack of visuals so we are considering an additional 11th part which reviews a couple of key chapters from the thesis (5 and 6).

At any rate, the individual videos are listed on Table 1, with a short description. They are also available as a playlist, as per link below.

Video 2: Playlist "MASD: An introduction to Model Assisted Software Development".

Video 2: Playlist "MASD: An introduction to Model Assisted Software Development".

| Video | Description |

|---|---|

| Part 1 | This lecture is the start of an overview of Model Driven Engineering (MDE), the approach that underlies MASD. |

| Part 2 | In this lecture we conclude our overview of MDE by discussing the challenges the discipline faces in terms of its foundations. |

| Part 3 | In this lecture we discuss the two fundamental concepts of MDE: Models and Transformations. |

| Part 4 | In this lecture we take a large detour to think about the philosophical implications of modeling. In the detour we discuss Russell, Whitehead, Wittgenstein and Meyers amongst others. |

| Part 5 | In this lecture we finish our excursion into the philosophy of modeling and discuss two core topics: Technical Spaces (TS) and Platforms. |

| Part 6 | In this video we take a detour and talk about research, and how our programme in particular was carried out - including all the bumps and bruises we faced along the way. |

| Part 7 | In this lecture we discuss Variability and Variability Management in the context of Model Driven Engineering (MDE). |

| Part 8 | In this lecture we start a presentation of the material of the thesis itself, covering state of the art in code generation, and the requirements for a new approach. |

| Part 9 | In this lecture we outline the MASD methodology: its philosophy, processes, actors and modeling language. We also discuss the domain architecture in more detail. |

| Part 10 | In this final lecture we discuss Dogen, introducing its architecture. |

Table 1: Video series for "MASD: An introduction to Model Assisted Software Development".

Development Matters

In this section we cover topics that are mainly of interest if you follow Dogen development, such as details on internal stories that consumed significant resources, important events, etc. As usual, for all the gory details of the work carried out this sprint, see the sprint log. As usual, for all the gory details of the work carried out this sprint, see the sprint log.

Milestones and Éphémérides

This sprint marks the end of the PhD programme that started in 2014.

Figure 5: PhD thesis within the University of Hertfordshire archives.

Figure 5: PhD thesis within the University of Hertfordshire archives.

Significant Internal Stories

From an engineering perspective, this sprint had one goal which was to restore our CI environment. Other smaller stories were also carried out.

Move CI to GitHub actions

A great number of stories this sprint were connected with the epic of returning to a sane world of continuous integration; CI had been lost with the demise of the open source support in Travis CI. First and foremost, I'd like to give an insanely huge shout out to Travis CI for all the years of supporting open source projects, even when perhaps it did not make huge financial sense. Prior to this decision, we had relied on Travis CI quite a lot, and in general it just worked. To my knowledge, they were the first ones to introduce the simple YAML configuration for their IaC language, and it still supports features that we could not map to in our new approach (e.g. the infamous issue #399). So it was not without sadness that we lost Travis CI support and found ourselves needing to move on to a new, hopefully stable, home.

Whilst where at it, a second word of thanks goes out to AppVeyor who we have also used for the longest time, with very few complaints. For many years, AppVeyor have been great supporters of open source and free software, so a massive shout out goes to them as well. Sadly, we had to reconsider AppVeyor's use in the context of Travis CI's loss, and it seemed sensible to stick to a single approach for all operative systems if at all possible. Personally, this is a sad state of affairs because we are choosing to support one large monolithic corporation in detriment of two small but very committed vendors, but as always, the nuances of the decision making process are obliterated by the practicalities of limited resourcing with which to carry work out - and thus a small risk apetite for both the demise of yet another vendor as well as for the complexities that always arrive when dealing with a mix of suppliers.

And so our search begun. As we have support for GitHub, BitBucket and GitLab as Git clones, we considered these three providers. In the end, we settled on GitHub actions, mainly because of the wealth of example projects using C++. All things considered, the move was remarkably easy, though not without its challenges. At present we seem to have all Dogen builds across Linux, Windows and OSX working reliably - though, as always, much work still remains such as porting all of our reference products.

Figure 6: GitHub actions for the Dogen project.

Figure 6: GitHub actions for the Dogen project.

Related Stories: "Move build to GitHub", "Can't see build info in github builds", "Update the test package scripts for the GitHub CI", "Remove deprecated travis and appveyor config files", "Create clang build using libc++", "Add packaging step to github actions", "Setup MSVC Windows build for debug and release", "Update build instructions in readme", "Update the test package scripts for the GitHub CI", "Comment out clang-cl windows build", "Setup the laptop for development", "Windows package is broken", "Rewrite CTest script to use github actions".

Improvements to vcpkg setup

As part of the move to GitHub actions, we decided to greatly simplify our builds. In the past we had relied on a massive hack: we built all our third party dependencies manually and placed them, as a zip, on DropBox. This worked, but updating these dependencies was a major pain and so done very infrequently. In particular, we often forgot the details on how exactly those builds had been done and where all of the libraries had been sourced. As part of the research on GitHub actions, it became apparent that the cool kids had moved en masse to using vcpkg within the CI itself , and employed a set of supporting actions to make this use case much easier than before (building on the fly, caching, and so on). This new setup is highly advantageous because it makes updating third party dependencies a mere git submodule update, like so:

$ cd vcpkg/

$ git pull origin master

remote: Enumerating objects: 18250, done.

remote: Counting objects: 100% (7805/7805), done.

remote: Compressing objects: 100% (129/129), done.

remote: Total 18250 (delta 7720), reused 7711 (delta 7676), pack-reused 10445

Receiving objects: 100% (18250/18250), 9.05 MiB | 3.07 MiB/s, done.

Resolving deltas: 100% (12995/12995), completed with 1774 local objects.

...

$ cmake --build --preset linux-clang-release --target rat

While we were there, we took this opportunity and simplified all dependencies; sadly this meant removing our use of ODB since v2.5 is not available on vcpkg (see above). The feature is still present on the code generator, but one wonders if it should be completely deprecated next release when we get to the C++ reference product. Boost.DI was also another victim of this clean up. At any rate, the new setup is a productivity improvement of several orders of magnitude, since in the past we had to have our own OSX and Windows Physicals/VM's to build the dependencies whereas now we rely solely on vcpkg. For an idea of just how painful things used to be, just have a peek at "Updating Boost Version" in v1.0.19

Related Stories: "Update vcpkg to latest", "Remove third-party dependencies outside vcpkg", "Update nightly builds to use new vcpkg setup".

Improvements to CTest and CMake scripts

Closely related to the work on vcpkg and GitHub actions was a number of fundamental changes to our CMake and CTest setup. First and foremost, we like to point out the move to use CMake Presets. This is a great little feature in CMake that enables packing all of the CMake configuration into a preset file, and removes the need for the good old build.* scripts that had littered the build directory. It also means that building from Emacs - as well as other editors and IDEs which support presets, of course - is now really easy. In the past we had to supply a number o environment variables and other such incantations to the build script in order to setup the required environment. With presets all of that is encapsulated into a self comntained CMakePresets.json file, making the build much simpler:

cmake --preset linux-clang-release

cmake --build --preset linux-clang-release

You can also list the available presets very easily:

$ cmake --list-presets

Available configure presets:

"linux-clang-debug" - Linux clang debug

"linux-clang-release" - Linux clang release

"linux-gcc-debug" - Linux gcc debug

"linux-gcc-release" - Linux gcc release

"windows-msvc-debug" - Windows x64 Debug

"windows-msvc-release" - Windows x64 Release

"windows-msvc-clang-cl-debug" - Windows x64 Debug

"windows-msvc-clang-cl-release" - Windows x64 Release

"macos-clang-debug" - Mac OSX Debug

"macos-clang-release" - Mac OSX Release

This ensures a high degree of regularity of Dogen builds if you wish to stick to the defaults, which is the case for almost all our use cases. The exception had been nightlies, but as we explain elsewhere, with this release we also managed to make those builds conform to the same overall approach.

The release also saw a general clean up of the CTest script, now called CTest.cmake, which supports both continuous as well as nighly builds with minimal complexity. Sadly, the integration of presets with CTest is not exactly perfect, so it took us a fair amount of time to work out how to best get these two to talk to each other.

Related Stories: "Rewrite CTest script to use github actions", "Assorted improvements to CMake files"

Smaller stories

In addition to the big ticket items, a number of smaller stories was also worked om.

- Fix broken org-mode tests: due to the ad-hoc nature of our org-mode parser, we keep finding weird and wonderful problems with code generation, mainly related to the introduction of spurious whitelines. This sprint we fixed yet another group of these issues. Going forward, the right solution is to remove org-mode support from within Dogen, since we can't find a third party library that is rock solid, and add instead an XMI-based codec. We can then extend Emacs to generate this XMI output. There are downsides to this approach - for example, the loss of support to non-Emacs based editors such as VI and VS Code.

- Generate doxygen docs and add to site: Every so often we update manually the Doxygen docs available on our site. This time we also added a badge linking back to the documentation. Once the main bulk of work is finished with GitHub actions, we need to consider adding an action to regenerate documentation.

- Update build instructions in README*: This sprint saw a raft of updates to our REAMDE file, mostly connected with the end of the tesis as well as all the build changes related to GitHub actions.

- Replace Dia IDs with UUIDs: Now that we have removed Dia models from within Dogen, it seemed appropriate to get rid of some of its vestiges such as Object IDs based on Dia object names. This is yet another small step towards making the org-mode models closer to their native representation. We also begun work on supporting proper capitalisation of org-mode headings ("Capitalise titles in models correctly"), but sadly this proved to be much more complex than expected and has since been returned to the product backlog for further analysis.

- Tests should take full generation into account: Since time immemorial, our nightly builds have been, welll, different, from regular CI builds. This is because we make use of a feature called "full generation". Full generation forces the instantiation of model elements across all facets of physical space regardless of the requested configuration within the user model. This is done so that we exercise generated code to the fullest, and also has the great benefit of valgrinding the generated tests, hopefully pointing out any leaks we may have missed. One major down side of this approach was the need to somehow "fake" the contents of the Dogen directory, to ensure the generated tests did not break. We did this via the "pristine" hack: we kept two checkouts of Dogen, and pointed the tests of the main build towards this printine directory, so that the code geneation tests did not fail. It was ugly but just about worked. That is, until we introduced CMake Presets. Then, it caused all sorts of very annoying issues. In this sprint, after the longest time of trying to extend the hack, we finally saw the obvious: the easiest way to address this issue is to extend the tests to also use full generation. This was very easy to implement and made the nightlies regular with respect to the continuous builds.

Video series of Dogen coding

This sprint we recorded a series of videos titled "MASD - Dogen Coding: Move to GitHub CI". It is somewhat more generic than the name implies, because it includes a lot of the side-tasks needed to make GitHub actions work such as removing third party dependencies, fixing CTest scripts, etc. The video series is available as a playlist, in the link below.

Video 3: Playlist for "MASD - Dogen Coding: Move to GitHub CI".

Video 3: Playlist for "MASD - Dogen Coding: Move to GitHub CI".

The next table shows the individual parts of the video series.

| Video | Description |

|---|---|

| Part 1 | In this part we start by getting all unit tests to pass. |

| Part 2 | In this video we update our vcpkg fork with the required libraries, including ODB. However, we bump into problems getting Dogen to build with the new version of ODB. |

| Part 3 | In this video we decide to remove the relational model altogether as a way to simplify the building of Dogen. It is a bittersweet decision as it took us a long time to code the relational model, but in truth it never lived up to its original promise. |

| Part 4 | In this short video we remove all uses of Boost DI. Originally, we saw Boost DI as a solution for our dependency injection needs, which are mainly rooted in the registration of M2T (Model to Text) transforms. |

| Part 5 | In this video we update vcpkg to use latest and greatest and start to make use of the new standard machinery for CMake and vcpkg integration such as CMake presets. However, ninja misbehaves at the end. |

| Part 6 | In this part we get the core of the workflow to work, and iron out a lot of the kinks across all platforms. |

| Part 7 | In this video we review the work done so far, and continue adding support for nightly builds using the new CMake infrastructure. |

| Part 8 | This video concludes the series. In it, we sort out the few remaining problems with nightly builds, by making them behave more like the regular CI builds. |

Table 2: Video series for "MASD - Dogen Coding: Move to GitHub CI".

Resourcing

At almost two years elapsed time, this sprint was characterised mainly by its irregularity, and rendered metrics such as utilisation rate completely meaningless. It would of course be an unfair comment if we stopped at that, given how much was achieved on the PhD front; alas, lines of LaTex source count not towards the engineering of software systems. Focusing solely on the engineering front and looking at the sprint as a whole, it must be classified as very productive, since it was just over 85 hours long and broadly achieved its stated mission. It is always painful to spend this much effort just to get back to where we were in terms of CI/CD during the Travis CI golden era, but it is what it is. If anything, our modernised setup is a qualitative step up in terms of functionality when compared to the previous approach, so its not all doom and gloom.

Figure 7: Cost of stories for sprint 31.

Figure 7: Cost of stories for sprint 31.

In total, we spent just over 57% working on the GitHub CI move. Of these - in fact, of the whole sprint - the most expensive story was rewriting CTest scripts, at almost 16% of total effort. We also spent a lot of time updating nightly builds to use new vcpkg setup and performing assorted improvements to CMake files (9.3% and 7.6% respectively). It was somewhat disappointing that we did not manage to touch the reference products, which are at present still CI-less. Somewhat surprisingly we still managed to spend 13.3% of the total resource ask doing real coding; some of it justified (for example, removing the database options was a requirement for the GitHub CI move because we wanted to drop ODB) and some of it more of a form of therapy given the boredom of working on IaC (Infrastructure as Code). We also clocked just over 11% on working in the MDE and MASD video series, which is more broadly classified as PhD work; since it has an application to the engineering side, it was booked against the engineering work rather than the PhD programme itself.

A final note on the whopping 18.3% consumed on agile-related work. In particular, we spent an uncharacteristically large amount of time refining our sprint and product backlogs: 10% versus the 7% of sprint 30 and the 3.5% of sprint 29. Of course, in the context of thee many months with very little coding, it does make sense that we spent a lot of time dreaming about coding, and that is what the backlogs there are for. At any rate, over 80% of the resourcing this sprint can be said to be aligned with the core mission of the sprint, so one would conclude it was well spent.

Roadmap

We've never been particularly sold on the usefulness of our roadmaps, to be fair, but perhaps for historical reasons we have grown attached to them. There is little to add from the previous sprint: the big ticket items stay unchanged, and given our day release from work for the PhD will cease soon, it is expected that our utilisation rate will start to slow down correspondingly. The roadmap remains the same, nonetheless.

Binaries

As part of the GitHub CI move, the production of binaries has changed considerably. In addition, we are not yet building binaries off of the tag workflow so these links are against the last commit of the sprint - presumably the resulting build would have been identical. For now, we have manually uploaded the binaries into the release assets.

| Operative System | Binaries |

|---|---|

| Linux Debian/Ubuntu | dogen_1.0.31_amd64-applications.deb |

| Windows | DOGEN-1.0.31-Windows-AMD64.msi |

| Mac OSX | DOGEN-1.0.31-Darwin-x86_64.dmg |

Table 3: Binary packages for Dogen.

A few important notes:

- the Linux binaries are not stripped at present and so are larger than they should be. We have an outstanding story to address this issue, but sadly CMake does not make this a trivial undertaking.

- as before, all binaries are 64-bit. For all other architectures and/or operative systems, you will need to build Dogen from source. Source downloads are available in zip or tar.gz format.

- a final note on the assets present on the release note. These are just pictures and other items needed by the release note itself. We found that referring to links on the internet is not a particularly good idea as we now have lots of 404s for older releases. Therefore, from now on, the release notes will be self contained. Assets are otherwise not used.

- we are not testing the OSX and Windows builds (e.g. validating the packages install, the binaries run, etc.). If you find any problems with them, please report an issue.

Next Sprint

The next sprint's mission will focus on mopping up GitHub CI - largely addressing the reference products, which were untouched this sprint. The remaining time will be allocated to the clean up of the physical model and meta-model, which we are very much looking forward to. It will be the first release with real coding in quite some time.

That's all for this release. Happy Modeling!

v1.0.30

3 years ago Municipal stadium in Moçamedes, Namibe, Angola. (C) 2020 Angop.

Municipal stadium in Moçamedes, Namibe, Angola. (C) 2020 Angop.

Introduction

Happy new year! The first release of the year is a bit of a bumper one: we finally managed to add support for org-mode, and transitioned all of Dogen to it. It was a mammoth effort, consuming the entirety of the holiday season, but it is refreshing to finally be able to add significant user facing features again. Alas, this is also a bit of a bitter-sweet release because we have more or less run out of coding time, and need to redirect our efforts towards writing the PhD thesis. On the plus side, the architecture is now up-to-date with the conceptual model, mostly, and the bits that aren't are fairly straightforward (famous last words). And this is nothing new; Dogen development has always oscillated between theory and practice. If you recall, a couple of years ago we had to take a nine-month coding break to learn about the theoretical underpinnings of MDE and then resumed coding on Sprint 8 for what turned out to be a 22-sprint-long marathon (pun intended), where we tried to apply all that was learned to the code base. Sprint 30 brings this long cycle to a close, and begins a new one; though, this time round, we are hoping for far swifter travels around the literature. But lets not get lost talking about the future, and focus instead on the release at hand. And what a release it was.

User visible changes

This section covers stories that affect end users, with the video providing a quick demonstration of the new features, and the sections below describing them in more detail.

Org-mode support

A target that we've been chasing for the longest time is the ability to create models using org-mode. We use org-mode (and emacs) for pretty much everything in Dogen, such time keeping and task management - it's how we manage our product and sprint backlogs, for one - and we'll soon be using it to write academic papers too. It's just an amazing tool with a great tooling ecosystem, so it seemed only natural to try and see if we could make use of it for modeling too. Now, even though we are very comfortable with org-mode, this is not a decision to be taken lightly because we've been using Dia since Dogen's inception, over eight years ago.

Figure 1: Dia diagram for a Dogen model with the introduction of colouring, Dogen v1.0.06

Figure 1: Dia diagram for a Dogen model with the introduction of colouring, Dogen v1.0.06

As much as we profoundly love Dia, the truth is we've had concerns about relying on it too much due to its somewhat sparse maintenance, with the last release happening some nine years ago. What's more pressing is that Dia relies on an old version of GTK, meaning it could get pulled from distributions at any time; we've already had a similar experience with Gnome Referencer, which wasn't at all pleasant. In addition, there are a number of "papercuts" that are mildly annoying, if livable, and which will probably not be addressed; we've curated a list of such issues, in the hope of one day fixing these problems upstream, but that day never came. The direction of travel for the maintenance is also not entirely aligned with our needs. For example, we recently saw the removal of python support in Dia - at least in the version which ships with Debian - a feature in which we relied upon heavily, and intended to do more so in the future. All of this to say that we've had a number of ongoing worries that motivated our decision to move away from Dia. However, I don't want to sound too negative here - and please don't take any of this as a criticism to Dia or its developers. Dia is an absolutely brilliant tool, and we have used it for over two decades; It is great at what it does, and we'll continue to use it for free modeling. Nonetheless, it has become increasingly clear that the directions of Dia and Dogen have started to diverge over the last few years, and we could not ignore that. I'd like to take this opportunity to give a huge thanks to all of those involved in Dia (past and present); they have certainly created an amazing tool that stood the test of time. Also, although we are moving away from Dia use in mainline Dogen, we will continue to support the Dia codec and we have tests to ensure that the current set of features will continue to work.

That's that for the rationale for moving away from Dia. But why org-mode? We came up with a nice laundry list of reasons:

- "Natural" Representation: org-mode documents are trees, with arbitrary nesting, which makes it a good candidate to represent the nesting of namespaces and classes. It's just a natural representation for structural information.

- Emacs tooling: within the org-mode document we have full access to Emacs features. For example, we have spell checkers, regular copy-and-pasting, etc. This greatly simplifies the management of models. Since we already use Emacs for everything else in the development process, this makes the process even more fluid.