ChatdollKit Save

ChatdollKit enables you to make your 3D model into a chatbot

ChatdollKit

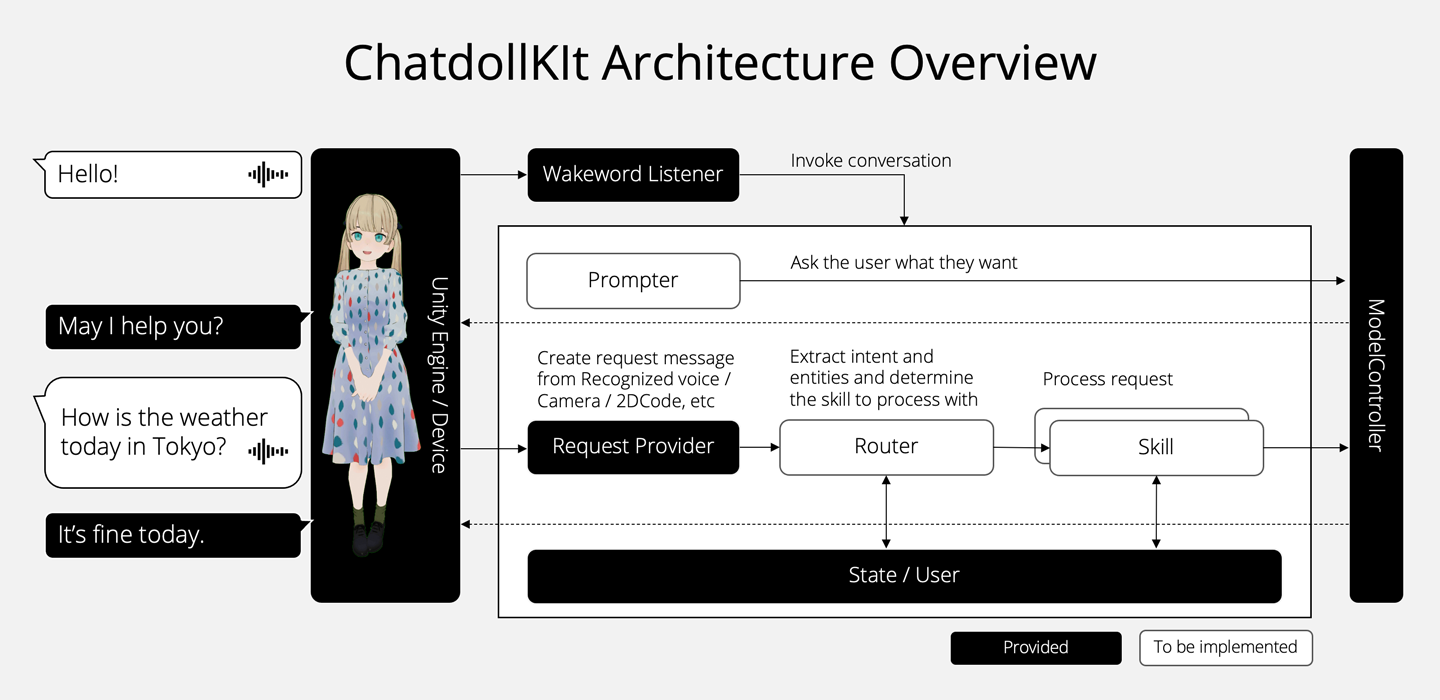

3D virtual assistant SDK that enables you to make your 3D model into a voice-enabled chatbot. 🇯🇵日本語のREADMEはこちら

- 🍎 iOS App: OshaberiAI A Virtual Agent App made with ChatdollKit: a perfect fusion of character creation by AI prompt engineering, customizable 3D VRM models, and your favorite voices by VOICEVOX.

- 🇬🇧 Live demo English Say "Hello" to start conversation. This demo just returns what you say (echo).

- 🇯🇵 Live demo in Japanese OpenAI API Keyをご用意ください。「こんにちは」と話しかけると会話がスタートします。

✨ Features

-

3D Model

- Speech and motion synchronization

- Face expression control

- Blink and lipsync

-

Generative AI

- Multiple LLMs: ChatGPT / Azure OpenAI Service, Anthropic Claude, Google Gemini Pro and others

- Agents: Function Calling (ChatGPT / Gemini) or your prompt engineering

- Multimodal: GPT-4V and Gemini-Pro-Vision are suppored

- Emotions: Autonomous face expression and animation

-

Dialog

- Speech-to-Text and Text-to-Speech (OpenAI, Azure, Google, Watson, VOICEVOX, VOICEROID etc)

- Dialog state management (in other word, context or memory)

- Intent extraction and topic routing

-

I/O

- Wakeword

- Camera and QR Code

-

Platforms

- Windows / Mac / Linux / iOS / Android and anywhere Unity supports

- VR / AR / WebGL / Gatebox

... and more! See ChatdollKit Documentation to learn details.

🚀 Quick start

You can learn how to setup ChatdollKit by watching this video that runs the demo scene(including chat with ChatGPT): https://www.youtube.com/watch?v=rRtm18QSJtc

📦 Import packages

Download the latest version of ChatdollKit.unitypackage and import it into your Unity project after import dependencies;

-

Burstfrom Unity Package Manager (Window > Package Manager) - UniTask(Ver.2.3.1)

- uLipSync(v2.6.1)

- For VRM model: UniVRM(v0.89.0) and VRM Extension

- JSON.NET: If your project doesn't have JSON.NET, add it from Package Manager > [+] > Add package from git URL... > com.unity.nuget.newtonsoft-json

- Azure Speech SDK: (Optional) Required for real-time speech recognition using a stream.

🐟 Resource preparation

Add 3D model to the scene and adjust as you like. Also install required resources for the 3D model like shaders etc. In this README, I use Cygnet-chan that we can perchase at Booth. https://booth.pm/ja/items/1870320

And, import animation clips. In this README, I use Anime Girls Idle Animations Free. I believe it is worth for you to purchase the pro edition👍

🎁 Put ChatdollKit prefab

Put ChatdollKit/Prefabs/ChatdollKit or ChatdollKit/Prefabs/ChatdollKitVRM to the scene. And, create EventSystem to use UI components.

🐈 ModelController

Select Setup ModelController in the context menu of ModelController. If NOT VRM, make sure that shapekey for blink to Blink Blend Shape Name is set after setup. If not correct or blank, set it manually.

💃 Animator

Select Setup Animator in the context menu of ModelController and select the folder that contains animation clips or their parent folder. In this case put animation clips in 01_Idles and 03_Others onto Base Layer for override blending, 02_Layers onto Additive Layer for additive blending.

Next, see the Base Layer of newly created AnimatorController in the folder you selected. Confirm the value for transition to the state you want to set it for idle animation.

Lastly, set the value to Idle Animation Value on the inspector of ModelController.

🦜 DialogController

On the inspector of DialogController, set Wake Word to start conversation (e.g. hello / こんにちは🇯🇵), Cancel Word to stop comversation (e.g. end / おしまい🇯🇵), Prompt Voice to require voice request from user (e.g. what's up? / どうしたの?🇯🇵).

🍣 ChatdollKit

Select the speech service (OpenAI/Azure/Google/Watson) you use and set API key and some properties like Region and BaseUrl on inspector of ChatdollKit.

🍳 Skill

Attach Examples/Echo/Skills/EchoSkill to ChatdollKit. This is a skill for just echo. Or, if you want to enjoy conversation with AI, attach components and set OpenAI API Key to ChatGPTService:

- ChatdollKit/Scripts/LLM/ChatGPT/ChatGPTService

- ChatdollKit/Scripts/LLM/LLMRouter

- ChatdollKit/Scripts/LLM/LLMContentSkill

🤗 Face Expression (NON VRM only)

Select Setup VRC FaceExpression Proxy in the context menu of VRC FaceExpression Proxy. Neutral, Joy, Angry, Sorrow and Fun face expression with all zero value and Blink face with blend shape for blink = 100.0f are automatically created.

You can edit shape keys by editing Face Clip Configuration directly or by capturing on inspector of VRCFaceExpressionProxy.

🥳 Run

Press Play button of Unity editor. You can see the model starts with idling animation and blinking.

- Say the word you set to

Wake Wordon inspector (e.g. hello / こんにちは🇯🇵) - Your model will reply the word you set to

Prompt Voiceon inspector (e.g. what's up? / どうしたの?🇯🇵) - Say something you want to echo like "Hello world!"

- Your model will reply "Hello world"

🌊 Use Azure OpenAI Service

To use Azure OpenAI Service set following info on inspector of ChatGPTService component:

- Endpoint url with configurations to

Chat Completion Url

format: https://{your-resource-name}.openai.azure.com/openai/deployments/{deployment-id}/chat/completions?api-version={api-version}

-

API Key to

Api Key -

Set true to

Is Azure

NOTE: Model on inspector is ignored. Engine in url is used.

👷♀️ Build your own app

See the MultiSkills example. That is more rich application including:

- Dialog Routing:

Routeris an example of how to decide the topic user want to talk - Processing dialog:

TranslateDialogis an example that shows how to process dialog

We are now preparing contents to create more rich virtual assistant using ChatdollKit.

🌐 Run on WebGL

Refer to the following tips for now. We are preparing demo for WebGL.

- It takes 5-10 minutes to build. (It depends on machine spec)

- Very hard to debug. Error message doesn't show the stacktrace:

To use dlopen, you need to use Emscripten’s linking support, see https://github.com/kripken/emscripten/wiki/Linking - Built-in Async/Await doesn't work (app stops at

await) because JavaScript doesn't support threading. Use UniTask instead. - CORS required for HTTP requests.

- Microphone is not supported. Use

ChatdollMicrophonethat is compatible with WebGL. - Compressed audio formats like MP3 are not supported. Use WAV in TTS Loaders.

- OVRLipSync is not supported. Use uLipSync and uLipSyncWebGL instead.

- If you want to show multibyte characters in message window put the font that includes multibyte characters to your project and set it to message windows.