Transformers Versions Save

🤗 Transformers: State-of-the-art Machine Learning for Pytorch, TensorFlow, and JAX.

v4.39.3

2 weeks agoThe AWQ issue persisted, and there was a regression reported with beam search and input embeddings.

Changes

- Fix BC for AWQ quant #29965

- generate fix breaking change for patch #29976

v4.39.2

3 weeks agoSeries of fixes for backwards compatibility (AutoAWQ and other quantization libraries, imports from trainer_pt_utils) and functionality (LLaMA tokenizer conversion)

- Safe import of LRScheduler #29919

- [

BC] Fix BC for other libraries #29934 - [

LlamaSlowConverter] Slow to Fast better support #29797

v4.39.1

4 weeks agoPatch release to fix some breaking changes to LLaVA model, fixes/cleanup for Cohere & Gemma and broken doctest

- Correct llava mask & fix missing setter for

vocab_size#29389 - [

cleanup] vestiges of causal mask #29806 - [

SuperPoint] Fix doc example (https://github.com/huggingface/transformers/pull/29816)

v4.39.0

4 weeks agov4.39.0

🚨 VRAM consumption 🚨

The Llama, Cohere and the Gemma model both no longer cache the triangular causal mask unless static cache is used. This was reverted by #29753, which fixes the BC issues w.r.t speed , and memory consumption, while still supporting compile and static cache. Small note, fx is not supported for both models, a patch will be brought very soon!

New model addition

Cohere open-source model

Command-R is a generative model optimized for long context tasks such as retrieval augmented generation (RAG) and using external APIs and tools. It is designed to work in concert with Cohere's industry-leading Embed and Rerank models to provide best-in-class integration for RAG applications and excel at enterprise use cases. As a model built for companies to implement at scale, Command-R boasts:

- Strong accuracy on RAG and Tool Use

- Low latency, and high throughput

- Longer 128k context and lower pricing

- Strong capabilities across 10 key languages

- Model weights available on HuggingFace for research and evaluation

- Cohere Model Release by @saurabhdash2512 in #29622

LLaVA-NeXT (llava v1.6)

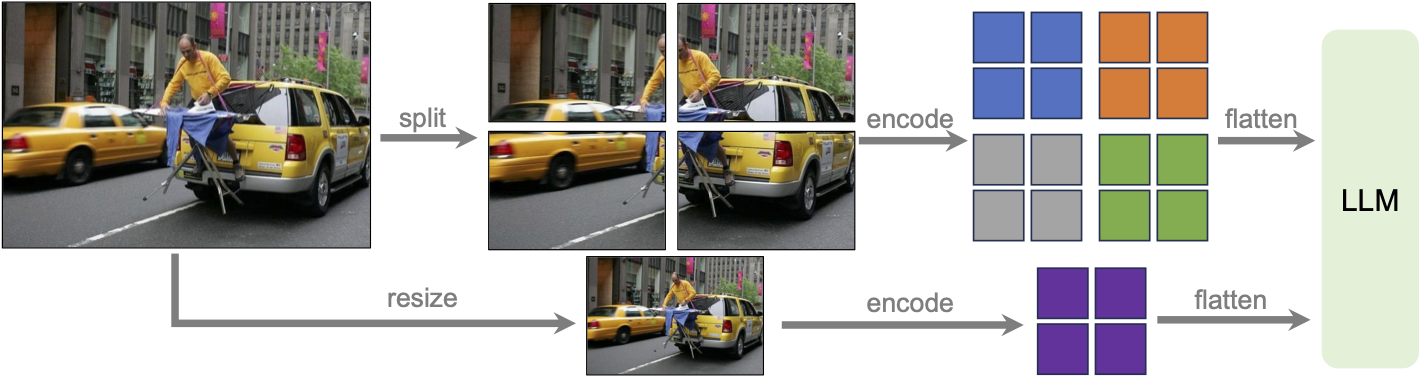

Llava next is the next version of Llava, which includes better support for non padded images, improved reasoning, OCR, and world knowledge. LLaVA-NeXT even exceeds Gemini Pro on several benchmarks.

Compared with LLaVA-1.5, LLaVA-NeXT has several improvements:

- Increasing the input image resolution to 4x more pixels. This allows it to grasp more visual details. It supports three aspect ratios, up to 672x672, 336x1344, 1344x336 resolution.

- Better visual reasoning and OCR capability with an improved visual instruction tuning data mixture.

- Better visual conversation for more scenarios, covering different applications.

- Better world knowledge and logical reasoning.

- Along with performance improvements, LLaVA-NeXT maintains the minimalist design and data efficiency of LLaVA-1.5. It re-uses the pretrained connector of LLaVA-1.5, and still uses less than 1M visual instruction tuning samples. The largest 34B variant finishes training in ~1 day with 32 A100s.*

LLaVa-NeXT incorporates a higher input resolution by encoding various patches of the input image. Taken from the original paper.

MusicGen Melody

The MusicGen Melody model was proposed in Simple and Controllable Music Generation by Jade Copet, Felix Kreuk, Itai Gat, Tal Remez, David Kant, Gabriel Synnaeve, Yossi Adi and Alexandre Défossez.

MusicGen Melody is a single stage auto-regressive Transformer model capable of generating high-quality music samples conditioned on text descriptions or audio prompts. The text descriptions are passed through a frozen text encoder model to obtain a sequence of hidden-state representations. MusicGen is then trained to predict discrete audio tokens, or audio codes, conditioned on these hidden-states. These audio tokens are then decoded using an audio compression model, such as EnCodec, to recover the audio waveform.

Through an efficient token interleaving pattern, MusicGen does not require a self-supervised semantic representation of the text/audio prompts, thus eliminating the need to cascade multiple models to predict a set of codebooks (e.g. hierarchically or upsampling). Instead, it is able to generate all the codebooks in a single forward pass.

- Add MusicGen Melody by @ylacombe in #28819

PvT-v2

The PVTv2 model was proposed in PVT v2: Improved Baselines with Pyramid Vision Transformer by Wenhai Wang, Enze Xie, Xiang Li, Deng-Ping Fan, Kaitao Song, Ding Liang, Tong Lu, Ping Luo, and Ling Shao. As an improved variant of PVT, it eschews position embeddings, relying instead on positional information encoded through zero-padding and overlapping patch embeddings. This lack of reliance on position embeddings simplifies the architecture, and enables running inference at any resolution without needing to interpolate them.

- Add PvT-v2 Model by @FoamoftheSea in #26812

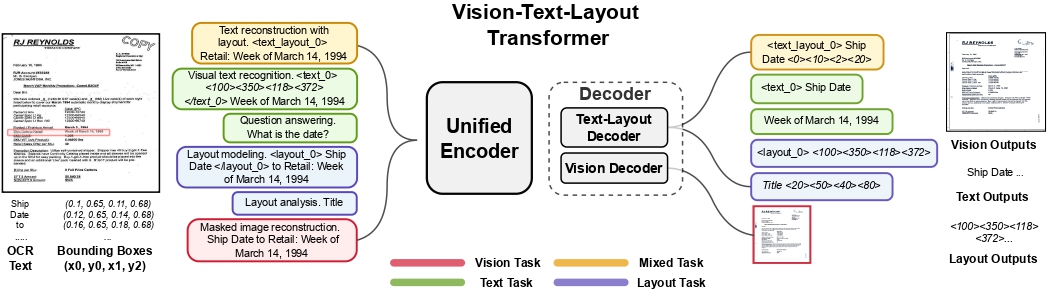

UDOP

The UDOP model was proposed in Unifying Vision, Text, and Layout for Universal Document Processing by Zineng Tang, Ziyi Yang, Guoxin Wang, Yuwei Fang, Yang Liu, Chenguang Zhu, Michael Zeng, Cha Zhang, Mohit Bansal. UDOP adopts an encoder-decoder Transformer architecture based on T5 for document AI tasks like document image classification, document parsing and document visual question answering.

UDOP architecture. Taken from the original paper.

- Add UDOP by @NielsRogge in #22940

Mamba

This model is a new paradigm architecture based on state-space-models, rather than attention like transformer models. The checkpoints are compatible with the original ones

- [

Add Mamba] Adds support for theMambamodels by @ArthurZucker in #28094

StarCoder2

StarCoder2 is a family of open LLMs for code and comes in 3 different sizes with 3B, 7B and 15B parameters. The flagship StarCoder2-15B model is trained on over 4 trillion tokens and 600+ programming languages from The Stack v2. All models use Grouped Query Attention, a context window of 16,384 tokens with a sliding window attention of 4,096 tokens, and were trained using the Fill-in-the-Middle objective.

- Starcoder2 model - bis by @RaymondLi0 in #29215

SegGPT

The SegGPT model was proposed in SegGPT: Segmenting Everything In Context by Xinlong Wang, Xiaosong Zhang, Yue Cao, Wen Wang, Chunhua Shen, Tiejun Huang. SegGPT employs a decoder-only Transformer that can generate a segmentation mask given an input image, a prompt image and its corresponding prompt mask. The model achieves remarkable one-shot results with 56.1 mIoU on COCO-20 and 85.6 mIoU on FSS-1000.

- Adding SegGPT by @EduardoPach in #27735

Galore optimizer

With Galore, you can pre-train large models on consumer-type hardwares, making LLM pre-training much more accessible to anyone from the community.

Our approach reduces memory usage by up to 65.5% in optimizer states while maintaining both efficiency and performance for pre-training on LLaMA 1B and 7B architectures with C4 dataset with up to 19.7B tokens, and on fine-tuning RoBERTa on GLUE tasks. Our 8-bit GaLore further reduces optimizer memory by up to 82.5% and total training memory by 63.3%, compared to a BF16 baseline. Notably, we demonstrate, for the first time, the feasibility of pre-training a 7B model on consumer GPUs with 24GB memory (e.g., NVIDIA RTX 4090) without model parallel, checkpointing, or offloading strategies.

Galore is based on low rank approximation of the gradients and can be used out of the box for any model.

Below is a simple snippet that demonstrates how to pre-train mistralai/Mistral-7B-v0.1 on imdb:

import torch

import datasets

from transformers import TrainingArguments, AutoConfig, AutoTokenizer, AutoModelForCausalLM

import trl

train_dataset = datasets.load_dataset('imdb', split='train')

args = TrainingArguments(

output_dir="./test-galore",

max_steps=100,

per_device_train_batch_size=2,

optim="galore_adamw",

optim_target_modules=["attn", "mlp"]

)

model_id = "mistralai/Mistral-7B-v0.1"

config = AutoConfig.from_pretrained(model_id)

tokenizer = AutoTokenizer.from_pretrained(model_id)

model = AutoModelForCausalLM.from_config(config).to(0)

trainer = trl.SFTTrainer(

model=model,

args=args,

train_dataset=train_dataset,

dataset_text_field='text',

max_seq_length=512,

)

trainer.train()

Quantization

Quanto integration

Quanto has been integrated with transformers ! You can apply simple quantization algorithms with few lines of code with tiny changes. Quanto is also compatible with torch.compile

Check out the announcement blogpost for more details

- [Quantization] Quanto quantizer by @SunMarc in #29023

Exllama 🤝 AWQ

Exllama and AWQ combined together for faster AWQ inference - check out the relevant documentation section for more details on how to use Exllama + AWQ.

- Exllama kernels support for AWQ models by @IlyasMoutawwakil in #28634

MLX Support

Allow models saved or fine-tuned with Apple’s MLX framework to be loaded in transformers (as long as the model parameters use the same names), and improve tensor interoperability. This leverages MLX's adoption of safetensors as their checkpoint format.

- Add mlx support to BatchEncoding.convert_to_tensors by @Y4hL in #29406

- Add support for metadata format MLX by @alexweberk in #29335

- Typo in mlx tensor support by @pcuenca in #29509

- Experimental loading of MLX files by @pcuenca in #29511

Highligted improvements

Notable memory reduction in Gemma/LLaMa by changing the causal mask buffer type from int64 to boolean.

- Use

torch.boolinstead oftorch.int64for non-persistant causal mask buffer by @fxmarty in #29241

Remote code improvements

- Allow remote code repo names to contain "." by @Rocketknight1 in #29175

- simplify get_class_in_module and fix for paths containing a dot by @cebtenzzre in #29262

Breaking changes

The PRs below introduced slightly breaking changes that we believed was necessary for the repository; if these seem to impact your usage of transformers, we recommend checking out the PR description to get more insights in how to leverage the new behavior.

- 🚨🚨[Whisper Tok] Update integration test by @sanchit-gandhi in #29368

- 🚨 Fully revert atomic checkpointing 🚨 by @muellerzr in #29370

- [BC 4.37 -> 4.38] for Llama family, memory and speed #29753 (causal mask is no longer a registered buffer)

Fixes and improvements

- FIX [

Gemma] Fix bad rebase with transformers main by @younesbelkada in #29170 - Add training version check for AQLM quantizer. by @BlackSamorez in #29142

- [Gemma] Fix eager attention by @sanchit-gandhi in #29187

- [Mistral, Mixtral] Improve docs by @NielsRogge in #29084

- Fix

torch.compilewithfullgraph=Truewhenattention_maskinput is used by @fxmarty in #29211 - fix(mlflow): check mlflow version to use the synchronous flag by @cchen-dialpad in #29195

- Fix missing translation in README_ru by @strikoder in #29054

- Improve _update_causal_mask performance by @alessandropalla in #29210

- [

Doc] update model doc qwen2 by @ArthurZucker in #29238 - Use torch 2.2 for daily CI (model tests) by @ydshieh in #29208

- Cache

is_vision_availableresult by @bmuskalla in #29280 - Use

DS_DISABLE_NINJA=1by @ydshieh in #29290 - Add

non_device_testpytest mark to filter out non-device tests by @fxmarty in #29213 - Add feature extraction mapping for automatic metadata update by @merveenoyan in #28944

- Generate: v4.38 removals and related updates by @gante in #29171

- Track each row separately for stopping criteria by @zucchini-nlp in #29116

- [docs] Spanish translation of tasks_explained.md by @aaronjimv in #29224

- [i18n-zh] Translated torchscript.md into Chinese by @windsonsea in #29234

- 🌐 [i18n-ZH] Translate chat_templating.md into Chinese by @shibing624 in #28790

- [i18n-vi] Translate README.md to Vietnamese by @hoangsvit in #29229

- [i18n-zh] Translated task/asr.md into Chinese by @windsonsea in #29233

- Fixed Deformable Detr typo when loading cuda kernels for MSDA by @EduardoPach in #29294

- GenerationConfig validate both constraints and force_words_ids by @FredericOdermatt in #29163

- Add generate kwargs to VQA pipeline by @regisss in #29134

- Cleaner Cache

dtypeanddeviceextraction for CUDA graph generation for quantizers compatibility by @BlackSamorez in #29079 - Image Feature Extraction docs by @merveenoyan in #28973

- Fix

attn_implementationdocumentation by @fxmarty in #29295 - [tests] enable benchmark unit tests on XPU by @faaany in #29284

- Use torch 2.2 for deepspeed CI by @ydshieh in #29246

- Add compatibility with skip_memory_metrics for mps device by @SunMarc in #29264

- Token level timestamps for long-form generation in Whisper by @zucchini-nlp in #29148

- Fix a few typos in

GenerationMixin's docstring by @sadra-barikbin in #29277 - [i18n-zh] Translate fsdp.md into Chinese by @windsonsea in #29305

- FIX [

Gemma/CI] Make sure our runners have access to the model by @younesbelkada in #29242 - Remove numpy usage from owlvit by @fxmarty in #29326

- [

require_read_token] fix typo by @ArthurZucker in #29345 - [

T5 and Llama Tokenizer] remove warning by @ArthurZucker in #29346 - [

Llama ROPE] Fix torch export but also slow downs in forward by @ArthurZucker in #29198 - Disable Mixtral

output_router_logitsduring inference by @LeonardoEmili in #29249 - Idefics: generate fix by @gante in #29320

- RoPE loses precision for Llama / Gemma + Gemma logits.float() by @danielhanchen in #29285

- check if position_ids exists before using it by @jiqing-feng in #29306

- [CI] Quantization workflow by @SunMarc in #29046

- Better SDPA unmasking implementation by @fxmarty in #29318

- [i18n-zh] Sync source/zh/index.md by @windsonsea in #29331

- FIX [

CI/starcoder2] Change starcoder2 path to correct one for slow tests by @younesbelkada in #29359 - FIX [

CI]: Fix failing tests for peft integration by @younesbelkada in #29330 - FIX [

CI]require_read_tokenin the llama FA2 test by @younesbelkada in #29361 - Avoid using uncessary

get_values(MODEL_MAPPING)by @ydshieh in #29362 - Patch YOLOS and others by @NielsRogge in #29353

- Fix @require_read_token in tests by @Wauplin in #29367

- Expose

offload_buffersparameter ofacceleratetoPreTrainedModel.from_pretrainedmethod by @notsyncing in #28755 - Fix Base Model Name of LlamaForQuestionAnswering by @lenglaender in #29258

- FIX [

quantization/ESM] Fix ESM 8bit / 4bit with bitsandbytes by @younesbelkada in #29329 - [

Llama + AWQ] fixprepare_inputs_for_generation🫠 by @ArthurZucker in #29381 - [

YOLOS] Fix - return padded annotations by @amyeroberts in #29300 - Support subfolder with

AutoProcessorby @JingyaHuang in #29169 - Fix llama + gemma accelete tests by @SunMarc in #29380

- Fix deprecated arg issue by @muellerzr in #29372

- Correct zero division error in inverse sqrt scheduler by @DavidAfonsoValente in #28982

- [tests] enable automatic speech recognition pipeline tests on XPU by @faaany in #29308

- update path to hub files in the error message by @poedator in #29369

- [Mixtral] Fixes attention masking in the loss by @DesmonDay in #29363

- Workaround for #27758 to avoid ZeroDivisionError by @tleyden in #28756

- Convert SlimSAM checkpoints by @NielsRogge in #28379

- Fix: Fixed the previous tracking URI setting logic to prevent clashes with original MLflow code. by @seanswyi in #29096

- Fix OneFormer

post_process_instance_segmentationfor panoptic tasks by @nickthegroot in #29304 - Fix grad_norm unserializable tensor log failure by @svenschultze in #29212

- Avoid edge case in audio utils by @ylacombe in #28836

- DeformableDETR support bfloat16 by @DonggeunYu in #29232

- [Docs] Spanish Translation -Torchscript md & Trainer md by @njackman-2344 in #29310

- FIX [

Generation] Fix some issues when running the MaxLength criteria on CPU by @younesbelkada in #29317 - Fix max length for BLIP generation by @zucchini-nlp in #29296

- [docs] Update starcoder2 paper link by @xenova in #29418

- [tests] enable test_pipeline_accelerate_top_p on XPU by @faaany in #29309

- [

UdopTokenizer] Fix post merge imports by @ArthurZucker in #29451 - more fix by @ArthurZucker (direct commit on main)

- Revert-commit 0d52f9f582efb82a12e8d9162b43a01b1aa0200f by @ArthurZucker in #29455

- [

Udop imports] Processor tests were not run. by @ArthurZucker in #29456 - Generate: inner decoding methods are no longer public by @gante in #29437

- Fix bug with passing capture_* args to neptune callback by @AleksanderWWW in #29041

- Update pytest

import_pathlocation by @loadams in #29154 - Automatic safetensors conversion when lacking these files by @LysandreJik in #29390

- [i18n-zh] Translate add_new_pipeline.md into Chinese by @windsonsea in #29432

- 🌐 [i18n-KO] Translated generation_strategies.md to Korean by @AI4Harmony in #29086

- [FIX]

offload_weight()takes from 3 to 4 positional arguments but 5 were given by @faaany in #29457 - [

Docs/Awq] Add docs on exllamav2 + AWQ by @younesbelkada in #29474 - [

docs] Add starcoder2 docs by @younesbelkada in #29454 - Fix TrainingArguments regression with torch <2.0.0 for dataloader_prefetch_factor by @ringohoffman in #29447

- Generate: add tests for caches with

pad_to_multiple_ofby @gante in #29462 - Generate: get generation mode from the generation config instance 🧼 by @gante in #29441

- Avoid dummy token in PLD to optimize performance by @ofirzaf in #29445

- Fix test failure on DeepSpeed by @muellerzr in #29444

- Generate: torch.compile-ready generation config preparation by @gante in #29443

- added the max_matching_ngram_size to GenerationConfig by @mosheber in #29131

- Fix

TextGenerationPipeline.__call__docstring by @alvarobartt in #29491 - Substantially reduce memory usage in _update_causal_mask for large batches by using .expand instead of .repeat [needs tests+sanity check] by @nqgl in #29413

- Fix: Disable torch.autocast in RotaryEmbedding of Gemma and LLaMa for MPS device by @currybab in #29439

- Enable BLIP for auto VQA by @regisss in #29499

- v4.39 deprecations 🧼 by @gante in #29492

- Revert "Automatic safetensors conversion when lacking these files by @LysandreJik in #2…

- fix: Avoid error when fsdp_config is missing xla_fsdp_v2 by @ashokponkumar in #29480

- Flava multimodal add attention mask by @zucchini-nlp in #29446

- test_generation_config_is_loaded_with_model - fall back to pytorch model for now by @amyeroberts in #29521

- Set

inputsas kwarg inTextClassificationPipelineby @alvarobartt in #29495 - Fix

VisionEncoderDecoderPositional Arg by @nickthegroot in #29497 - Generate: left-padding test, revisited by @gante in #29515

- [tests] add the missing

require_sacremosesdecorator by @faaany in #29504 - fix image-to-text batch incorrect output issue by @sywangyi in #29342

- Typo fix in error message by @clefourrier in #29535

- [tests] use

torch_deviceinstead ofautofor model testing by @faaany in #29531 - StableLM: Fix dropout argument type error by @liangjs in #29236

- Make sliding window size inclusive in eager attention by @jonatanklosko in #29519

- fix typos in FSDP config parsing logic in

TrainingArgumentsby @yundai424 in #29189 - Fix WhisperNoSpeechDetection when input is full silence by @ylacombe in #29065

- [tests] use the correct

n_gpuinTrainerIntegrationTest::test_train_and_eval_dataloadersfor XPU by @faaany in #29307 - Fix eval thread fork bomb by @muellerzr in #29538

- feat: use

warning_advicefor tensorflow warning by @winstxnhdw in #29540 - [

Mamba doc] Post merge updates by @ArthurZucker in #29472 - [

Docs] fixed minor typo by @j-gc in #29555 - Add Fill-in-the-middle training objective example - PyTorch by @tanaymeh in #27464

- Bark model Flash Attention 2 Enabling to pass on check_device_map parameter to super() by @damithsenanayake in #29357

- Make torch xla available on GPU by @yitongh in #29334

- [Docs] Fix FastSpeech2Conformer model doc links by @khipp in #29574

- Don't use a subset in test fetcher if on

mainbranch by @ydshieh in #28816 - fix error: TypeError: Object of type Tensor is not JSON serializable … by @yuanzhoulvpi2017 in #29568

- Add missing localized READMEs to the copies check by @khipp in #29575

- Fixed broken link by @amritgupta98 in #29558

- Tiny improvement for doc by @fzyzcjy in #29581

- Fix Fuyu doc typos by @zucchini-nlp in #29601

- Fix minor typo: softare => software by @DriesVerachtert in #29602

- Stop passing None to compile() in TF examples by @Rocketknight1 in #29597

- Fix typo (determine) by @koayon in #29606

- Implemented add_pooling_layer arg to TFBertModel by @tomigee in #29603

- Update legacy Repository usage in various example files by @Hvanderwilk in #29085

- Set env var to hold Keras at Keras 2 by @Rocketknight1 in #29598

- Update flava tests by @ydshieh in #29611

- Fix typo ; Update quantization.md by @furkanakkurt1335 in #29615

- Add tests for batching support by @zucchini-nlp in #29297

- Fix: handle logging of scalars in Weights & Biases summary by @parambharat in #29612

- Examples: check

max_position_embeddingsin the translation example by @gante in #29600 - [

Gemma] Supports converting directly in half-precision by @younesbelkada in #29529 - [Flash Attention 2] Add flash attention 2 for GPT-J by @bytebarde in #28295

- Core: Fix copies on main by @younesbelkada in #29624

- [Whisper] Deprecate forced ids for v4.39 by @sanchit-gandhi in #29485

- Warn about tool use by @LysandreJik in #29628

- Adds pretrained IDs directly in the tests by @LysandreJik in #29534

- [generate] deprecate forced ids processor by @sanchit-gandhi in #29487

- Fix minor typo: infenrece => inference by @DriesVerachtert in #29621

- [

MaskFormer,Mask2Former] Use einsum where possible by @amyeroberts in #29544 - Llama: allow custom 4d masks by @gante in #29618

- [PyTorch/XLA] Fix extra TPU compilations introduced by recent changes by @alanwaketan in #29158

- [docs] Spanish translate chat_templating.md & yml addition by @njackman-2344 in #29559

- Add support for FSDP+QLoRA and DeepSpeed ZeRO3+QLoRA by @pacman100 in #29587

- [

Mask2Former] Move normalization for numerical stability by @amyeroberts in #29542 - [tests] make

test_trainer_log_level_replicato run on accelerators with more than 2 devices by @faaany in #29609 - Refactor TFP call to just sigmoid() by @Rocketknight1 in #29641

- Fix batching tests for new models (Mamba and SegGPT) by @zucchini-nlp in #29633

- Fix

multi_gpu_data_parallel_forwardforMusicgenTestby @ydshieh in #29632 - [docs] Remove broken ChatML format link from chat_templating.md by @aaronjimv in #29643

- Add newly added PVTv2 model to all README files. by @robinverduijn in #29647

- [

PEFT] Fixsave_pretrainedto make sure adapters weights are also saved on TPU by @shub-kris in #29388 - Fix TPU checkpointing inside Trainer by @shub-kris in #29657

- Add

dataset_revisionargument toRagConfigby @ydshieh in #29610 - Fix PVT v2 tests by @ydshieh in #29660

- Generate: handle

cache_positionupdate ingenerateby @gante in #29467 - Allow apply_chat_template to pass kwargs to the template and support a dict of templates by @Rocketknight1 in #29658

- Inaccurate code example within inline code-documentation by @MysteryManav in #29661

- Extend import utils to cover "editable" torch versions by @bhack in #29000

- Trainer: fail early in the presence of an unsavable

generation_configby @gante in #29675 - Pipeline: use tokenizer pad token at generation time if the model pad token is unset. by @gante in #29614

- [tests] remove deprecated tests for model loading by @faaany in #29450

- Fix AutoformerForPrediction example code by @m-torhan in #29639

- [tests] ensure device-required software is available in the testing environment before testing by @faaany in #29477

- Fix wrong condition used in

filter_modelsby @ydshieh in #29673 - fix: typos by @testwill in #29653

- Rename

gluetonyu-mll/glueby @lhoestq in #29679 - Generate: replace breaks by a loop condition by @gante in #29662

- [FIX] Fix speech2test modeling tests by @ylacombe in #29672

- Revert "Fix wrong condition used in

filter_models" by @ydshieh in #29682 - [docs] Spanish translation of attention.md by @aaronjimv in #29681

- CI / generate: batch size computation compatible with all models by @gante in #29671

- Fix

filter_modelsby @ydshieh in #29710 - FIX [

bnb] Makeunexpected_keysoptional by @younesbelkada in #29420 - Update the pipeline tutorial to include

gradio.Interface.from_pipelineby @abidlabs in #29684 - Use logging.warning instead of warnings.warn in pipeline.call by @tokestermw in #29717

Significant community contributions

The following contributors have made significant changes to the library over the last release:

- @windsonsea

- [i18n-zh] Translated torchscript.md into Chinese (#29234)

- [i18n-zh] Translated task/asr.md into Chinese (#29233)

- [i18n-zh] Translate fsdp.md into Chinese (#29305)

- [i18n-zh] Sync source/zh/index.md (#29331)

- [i18n-zh] Translate add_new_pipeline.md into Chinese (#29432)

- @hoangsvit

- [i18n-vi] Translate README.md to Vietnamese (#29229)

- @EduardoPach

- Fixed Deformable Detr typo when loading cuda kernels for MSDA (#29294)

- Adding SegGPT (#27735)

- @RaymondLi0

- Starcoder2 model - bis (#29215)

- @njackman-2344

- [Docs] Spanish Translation -Torchscript md & Trainer md (#29310)

- [docs] Spanish translate chat_templating.md & yml addition (#29559)

- @tanaymeh

- Add Fill-in-the-middle training objective example - PyTorch (#27464)

- @Hvanderwilk

- Update legacy Repository usage in various example files (#29085)

- @FoamoftheSea

- Add PvT-v2 Model (#26812)

- @saurabhdash2512

- Cohere Model Release (#29622)

v4.38.2

1 month agoFix backward compatibility issues with Llama and Gemma:

We mostly made sure that performances are not affected by the new change of paradigm with ROPE. Fixed the ROPE computation (should always be in float32) and the causal_mask dtype was set to bool to take less RAM.

YOLOS had a regression, and Llama / T5Tokenizer had a warning popping for random reasons

- FIX [Gemma] Fix bad rebase with transformers main (#29170)

- Improve _update_causal_mask performance (#29210)

- [T5 and Llama Tokenizer] remove warning (#29346)

- [Llama ROPE] Fix torch export but also slow downs in forward (#29198)

- RoPE loses precision for Llama / Gemma + Gemma logits.float() (#29285)

- Patch YOLOS and others (#29353)

- Use torch.bool instead of torch.int64 for non-persistant causal mask buffer (#29241)

v4.38.1

1 month agoFix eager attention in Gemma!

- [Gemma] Fix eager attention #29187 by @sanchit-gandhi

TLDR:

- attn_output = attn_output.reshape(bsz, q_len, self.hidden_size)

+ attn_output = attn_output.view(bsz, q_len, -1)

v4.38.0

1 month agoNew model additions

💎 Gemma 💎

Gemma is a new opensource Language Model series from Google AI that comes with a 2B and 7B variant. The release comes with the pre-trained and instruction fine-tuned versions and you can use them via AutoModelForCausalLM, GemmaForCausalLM or pipeline interface!

Read more about it in the Gemma release blogpost: https://hf.co/blog/gemma

from transformers import AutoTokenizer, AutoModelForCausalLM

tokenizer = AutoTokenizer.from_pretrained("google/gemma-2b")

model = AutoModelForCausalLM.from_pretrained("google/gemma-2b", device_map="auto", torch_dtype=torch.float16)

input_text = "Write me a poem about Machine Learning."

input_ids = tokenizer(input_text, return_tensors="pt").to("cuda")

outputs = model.generate(**input_ids)

You can use the model with Flash Attention, SDPA, Static cache and quantization API for further optimizations !

- Flash Attention 2

from transformers import AutoTokenizer, AutoModelForCausalLM

tokenizer = AutoTokenizer.from_pretrained("google/gemma-2b")

model = AutoModelForCausalLM.from_pretrained(

"google/gemma-2b", device_map="auto", torch_dtype=torch.float16, attn_implementation="flash_attention_2"

)

input_text = "Write me a poem about Machine Learning."

input_ids = tokenizer(input_text, return_tensors="pt").to("cuda")

outputs = model.generate(**input_ids)

- bitsandbytes-4bit

from transformers import AutoTokenizer, AutoModelForCausalLM

tokenizer = AutoTokenizer.from_pretrained("google/gemma-2b")

model = AutoModelForCausalLM.from_pretrained(

"google/gemma-2b", device_map="auto", load_in_4bit=True

)

input_text = "Write me a poem about Machine Learning."

input_ids = tokenizer(input_text, return_tensors="pt").to("cuda")

outputs = model.generate(**input_ids)

- Static Cache

from transformers import AutoTokenizer, AutoModelForCausalLM

tokenizer = AutoTokenizer.from_pretrained("google/gemma-2b")

model = AutoModelForCausalLM.from_pretrained(

"google/gemma-2b", device_map="auto"

)

model.generation_config.cache_implementation = "static"

input_text = "Write me a poem about Machine Learning."

input_ids = tokenizer(input_text, return_tensors="pt").to("cuda")

outputs = model.generate(**input_ids)

Depth Anything Model

The Depth Anything model was proposed in Depth Anything: Unleashing the Power of Large-Scale Unlabeled Data by Lihe Yang, Bingyi Kang, Zilong Huang, Xiaogang Xu, Jiashi Feng, Hengshuang Zhao. Depth Anything is based on the DPT architecture, trained on ~62 million images, obtaining state-of-the-art results for both relative and absolute depth estimation.

- Add Depth Anything by @NielsRogge in #28654

Stable LM

StableLM 3B 4E1T was proposed in StableLM 3B 4E1T: Technical Report by Stability AI and is the first model in a series of multi-epoch pre-trained language models.

StableLM 3B 4E1T is a decoder-only base language model pre-trained on 1 trillion tokens of diverse English and code datasets for four epochs. The model architecture is transformer-based with partial Rotary Position Embeddings, SwiGLU activation, LayerNorm, etc.

The team also provides StableLM Zephyr 3B, an instruction fine-tuned version of the model that can be used for chat-based applications.

- Add

StableLMby @jon-tow in #28810

⚡️ Static cache was introduced in the following PRs ⚡️

Static past key value cache allows LlamaForCausalLM' s forward pass to be compiled using torch.compile !

This means that (cuda) graphs can be used for inference, which speeds up the decoding step by 4x!

A forward pass of Llama2 7B takes around 10.5 ms to run with this on an A100! Equivalent to TGI performances! ⚡️

- [

Core generation] Adds support for static KV cache by @ArthurZucker in #27931 - [

CLeanup] Revert SDPA attention changes that got in the static kv cache PR by @ArthurZucker in #29027 - Fix static generation when compiling! by @ArthurZucker in #28937

- Static Cache: load models with MQA or GQA by @gante in #28975

- Fix symbolic_trace with kv cache by @fxmarty in #28724

⚠️ Support for generate is not included yet. This feature is experimental and subject to changes in subsequent releases.

from transformers import AutoTokenizer, AutoModelForCausalLM, StaticCache

import torch

import os

# compilation triggers multiprocessing

os.environ["TOKENIZERS_PARALLELISM"] = "true"

tokenizer = AutoTokenizer.from_pretrained("meta-llama/Llama-2-7b-hf")

model = AutoModelForCausalLM.from_pretrained(

"meta-llama/Llama-2-7b-hf",

device_map="auto",

torch_dtype=torch.float16

)

# set up the static cache in advance of using the model

model._setup_cache(StaticCache, max_batch_size=1, max_cache_len=128)

# trigger compilation!

compiled_model = torch.compile(model, mode="reduce-overhead", fullgraph=True)

# run the model as usual

input_text = "A few facts about the universe: "

input_ids = tokenizer(input_text, return_tensors="pt").to("cuda").input_ids

model_outputs = compiled_model(input_ids)

Quantization

🧼 HF Quantizer 🧼

HfQuantizer makes it easy for quantization method researchers and developers to add inference and / or quantization support in 🤗 transformers. If you are interested in adding the support for new methods, please refer to this documentation page: https://huggingface.co/docs/transformers/main/en/hf_quantizer

-

HfQuantizerclass for quantization-related stuff inmodeling_utils.pyby @poedator in #26610 - [

HfQuantizer] Move it to "Developper guides" by @younesbelkada in #28768 - [

HFQuantizer] Removecheck_packages_compatibilitylogic by @younesbelkada in #28789 - [docs] HfQuantizer by @stevhliu in #28820

⚡️AQLM ⚡️

AQLM is a new quantization method that enables no-performance degradation in 2-bit precision. Check out this demo about how to run Mixtral in 2-bit on a free-tier Google Colab instance: https://huggingface.co/posts/ybelkada/434200761252287

- AQLM quantizer support by @BlackSamorez in #28928

- Removed obsolete attribute setting for AQLM quantization. by @BlackSamorez in #29034

🧼 Moving canonical repositories 🧼

The canonical repositories on the hugging face hub (models that did not have an organization, like bert-base-cased), have been moved under organizations.

You can find the entire list of models moved here: https://huggingface.co/collections/julien-c/canonical-models-65ae66e29d5b422218567567

Redirection has been set up so that your code continues working even if you continue calling the previous paths. We, however, still encourage you to update your code to use the new links so that it is entirely future proof.

- canonical repos moves by @julien-c in #28795

- Update all references to canonical models by @LysandreJik in #29001

Flax Improvements 🚀

The Mistral model was added to the library in Flax.

- Flax mistral by @kiansierra in #26943

TensorFlow Improvements 🚀

With Keras 3 becoming the standard version of Keras in TensorFlow 2.16, we've made some internal changes to maintain compatibility. We now have full compatibility with TF 2.16 as long as the tf-keras compatibility package is installed. We've also taken the opportunity to do some cleanup - in particular, the objects like BatchEncoding that are returned by our tokenizers and processors can now be directly passed to Keras methods like model.fit(), which should simplify a lot of code and eliminate a long-standing source of annoyances.

- Add tf_keras imports to prepare for Keras 3 by @Rocketknight1 in #28588

- Wrap Keras methods to support BatchEncoding by @Rocketknight1 in #28734

- Fix Keras scheduler import so it works for older versions of Keras by @Rocketknight1 in #28895

Pre-Trained backbone weights 🚀

Enable loading in pretrained backbones in a new model, where all other weights are randomly initialized. Note: validation checks are still in place when creating a config. Passing in use_pretrained_backbone will raise an error. You can override by setting

config.use_pretrained_backbone = True after creating a config. However, it is not yet guaranteed to be fully backwards compatible.

from transformers import MaskFormerConfig, MaskFormerModel

config = MaskFormerConfig(

use_pretrained_backbone=False,

backbone="microsoft/resnet-18"

)

config.use_pretrained_backbone = True

# Both models have resnet-18 backbone weights and all other weights randomly

# initialized

model_1 = MaskFormerModel(config)

model_2 = MaskFormerModel(config)

- Enable instantiating model with pretrained backbone weights by @amyeroberts in #28214

Introduce a helper function load_backbone to load a backbone from a backbone's model config e.g. ResNetConfig, or from a model config which contains backbone information. This enables cleaner modeling files and crossloading between timm and transformers backbones.

from transformers import ResNetConfig, MaskFormerConfig

from transformers.utils.backbone_utils import load_backbone

# Resnet defines the backbone model to load

config = ResNetConfig()

backbone = load_backbone(config)

# Maskformer config defines a model which uses a resnet backbone

config = MaskFormerConfig(use_timm_backbone=True, backbone="resnet18")

backbone = load_backbone(config)

config = MaskFormerConfig(backbone_config=ResNetConfig())

backbone = load_backbone(config)

- [

Backbone] Useload_backboneinstead ofAutoBackbone.from_configby @amyeroberts in #28661 - Backbone kwargs in config by @amyeroberts in #28784

Add in API references, list supported backbones, updated examples, clarification and moving information to better reflect usage and docs

- [docs] Backbone by @stevhliu in #28739

- Improve Backbone API docs by @merveenoyan in #28666

Image Processor work 🚀

- Raise unused kwargs image processor by @molbap in #29063

- Abstract image processor arg checks by @molbap in #28843

Bugfixes and improvements 🚀

- Fix id2label assignment in run_classification.py by @jheitmann in #28590

- Add missing key to TFLayoutLM signature by @Rocketknight1 in #28640

- Avoid root logger's level being changed by @ydshieh in #28638

- Add config tip to custom model docs by @Rocketknight1 in #28601

- Fix lr_scheduler in no_trainer training scripts by @bofenghuang in #27872

- [

Llava] Update convert_llava_weights_to_hf.py script by @isaac-vidas in #28617 - [

GPTNeoX] Fix GPTNeoX + Flash Attention 2 issue by @younesbelkada in #28645 - Update image_processing_deformable_detr.py by @sounakdey in #28561

- [

SigLIP] Only import tokenizer if sentencepiece available by @amyeroberts in #28636 - Fix phi model doc checkpoint by @amyeroberts in #28581

- get default device through

PartialState().default_deviceas it has been officially released by @statelesshz in #27256 - integrations: fix DVCLiveCallback model logging by @dberenbaum in #28653

- Enable safetensors conversion from PyTorch to other frameworks without the torch requirement by @LysandreJik in #27599

-

tensor_size- fix copy/paste error msg typo by @scruel in #28660 - Fix windows err with checkpoint race conditions by @muellerzr in #28637

- add dataloader prefetch factor in training args and trainer by @qmeeus in #28498

- Support single token decode for

CodeGenTokenizerby @cmathw in #28628 - Remove deprecated eager_serving fn by @Rocketknight1 in #28665

- fix a hidden bug of

GenerationConfig, now thegeneration_config.jsoncan be loaded successfully by @ParadoxZW in #28604 - Update README_es.md by @vladydev3 in #28612

- Exclude the load balancing loss of padding tokens in Mixtral-8x7B by @khaimt in #28517

- Use save_safetensor to disable safe serialization for XLA by @jeffhataws in #28669

- Add back in generation types by @amyeroberts in #28681

- [docs] DeepSpeed by @stevhliu in #28542

- Improved type hinting for all attention parameters by @nakranivaibhav in #28479

- improve efficient training on CPU documentation by @faaany in #28646

- [docs] Fix doc format by @stevhliu in #28684

- [

chore] Add missing space in warning by @tomaarsen in #28695 - Update question_answering.md by @yusyel in #28694

- [

Vilt] align input and model dtype in the ViltPatchEmbeddings forward pass by @faaany in #28633 - [

docs] Improve visualization for vertical parallelism by @petergtz in #28583 - Don't fail when

LocalEntryNotFoundErrorduringprocessor_config.jsonloading by @ydshieh in #28709 - Fix duplicate & unnecessary flash attention warnings by @fxmarty in #28557

- support PeftMixedModel signature inspect by @Facico in #28321

- fix: corrected misleading log message in save_pretrained function by @mturetskii in #28699

- [

docs] Update preprocessing.md by @velaia in #28719 - Initialize _tqdm_active with hf_hub_utils.are_progress_bars_disabled(… by @ShukantPal in #28717

- Fix

weights_onlyby @ydshieh in #28725 - Stop confusing the TF compiler with ModelOutput objects by @Rocketknight1 in #28712

- fix: suppress

GatedRepoErrorto use cache file (fix #28558). by @scruel in #28566 - Unpin pydantic by @ydshieh in #28728

- [docs] Fix datasets in guides by @stevhliu in #28715

- [Flax] Update no init test for Flax v0.7.1 by @sanchit-gandhi in #28735

- Falcon: removed unused function by @gante in #28605

- Generate: deprecate old src imports by @gante in #28607

- [

Siglip] protect from imports if sentencepiece not installed by @amyeroberts in #28737 - Add serialization logic to pytree types by @angelayi in #27871

- Fix

DepthEstimationPipeline's docstring by @ydshieh in #28733 - Fix input data file extension in examples by @khipp in #28741

- [Docs] Fix Typo in English & Japanese CLIP Model Documentation (TMBD -> TMDB) by @Vinyzu in #28751

- PatchtTST and PatchTSMixer fixes by @wgifford in #28083

- Enable Gradient Checkpointing in Deformable DETR by @FoamoftheSea in #28686

- small doc update for CamemBERT by @julien-c in #28644

- Pin pytest version <8.0.0 by @amyeroberts in #28758

- Mark test_constrained_beam_search_generate as flaky by @amyeroberts in #28757

- Fix typo of

Block. by @xkszltl in #28727 - [Whisper] Make tokenizer normalization public by @sanchit-gandhi in #28136

- Support saving only PEFT adapter in checkpoints when using PEFT + FSDP by @AjayP13 in #28297

- Add French translation: french README.md by @ThibaultLengagne in #28696

- Don't allow passing

load_in_8bitandload_in_4bitat the same time by @osanseviero in #28266 - Move CLIP _no_split_modules to CLIPPreTrainedModel by @lz1oceani in #27841

- Use Conv1d for TDNN by @gau-nernst in #25728

- Fix transformers.utils.fx compatibility with torch<2.0 by @fxmarty in #28774

- Further pin pytest version (in a temporary way) by @ydshieh in #28780

- Task-specific pipeline init args by @amyeroberts in #28439

- Pin Torch to <2.2.0 by @Rocketknight1 in #28785

- [

bnb] Fix bnb slow tests by @younesbelkada in #28788 - Prevent MLflow exception from disrupting training by @codiceSpaghetti in #28779

- don't initialize the output embeddings if we're going to tie them to input embeddings by @tom-p-reichel in #28192

- [Whisper] Refactor forced_decoder_ids & prompt ids by @patrickvonplaten in #28687

- Resolve DeepSpeed cannot resume training with PeftModel by @lh0x00 in #28746

- Wrap Keras methods to support BatchEncoding by @Rocketknight1 in #28734

- DeepSpeed: hardcode

torch.arangedtype onfloatusage to avoid incorrect initialization by @gante in #28760 - Add artifact name in job step to maintain job / artifact correspondence by @ydshieh in #28682

- Split daily CI using 2 level matrix by @ydshieh in #28773

- [docs] Correct the statement in the docstirng of compute_transition_scores in generation/utils.py by @Ki-Seki in #28786

- Adding [T5/MT5/UMT5]ForTokenClassification by @hackyon in #28443

- Make

is_torch_bf16_available_on_devicemore strict by @ydshieh in #28796 - Add tip on setting tokenizer attributes by @Rocketknight1 in #28764

- enable graident checkpointing in DetaObjectDetection and add tests in Swin/Donut_Swin by @SangbumChoi in #28615

- [docs] fix some bugs about parameter description by @zspo in #28806

- Add models from deit by @rajveer43 in #28302

- [Docs] Fix spelling and grammar mistakes by @khipp in #28825

- Explicitly check if token ID's are None in TFBertTokenizer constructor by @skumar951 in #28824

- Add missing None check for hf_quantizer by @jganitkevitch in #28804

- Fix issues caused by natten by @ydshieh in #28834

- fix / skip (for now) some tests before switch to torch 2.2 by @ydshieh in #28838

- Use

-vforpyteston CircleCI by @ydshieh in #28840 - Reduce GPU memory usage when using FSDP+PEFT by @pacman100 in #28830

- Mark

test_encoder_decoder_model_generateforvision_encoder_deocderas flaky by @amyeroberts in #28842 - Support custom scheduler in deepspeed training by @VeryLazyBoy in #26831

- [Docs] Fix bad doc: replace save with logging by @chenzizhao in #28855

- Ability to override clean_code_for_run by @w4ffl35 in #28783

- [WIP] Hard error when ignoring tensors. by @Narsil in #27484

- [

Doc] update contribution guidelines by @ArthurZucker in #28858 - Correct wav2vec2-bert inputs_to_logits_ratio by @ylacombe in #28821

- Image Feature Extraction pipeline by @amyeroberts in #28216

- ClearMLCallback enhancements: support multiple runs and handle logging better by @eugen-ajechiloae-clearml in #28559

- Do not use mtime for checkpoint rotation. by @xkszltl in #28862

- Adds LlamaForQuestionAnswering class in modeling_llama.py along with AutoModel Support by @nakranivaibhav in #28777

- [Docs] Update project names and links in awesome-transformers by @khipp in #28878

- Fix LongT5ForConditionalGeneration initialization of lm_head by @eranhirs in #28873

- Raise error when using

save_only_modelwithload_best_model_at_endfor DeepSpeed/FSDP by @pacman100 in #28866 - Fix

FastSpeech2ConformerModelTestand skip it on CPU by @ydshieh in #28888 - Revert "[WIP] Hard error when ignoring tensors." by @ydshieh in #28898

- unpin torch by @ydshieh in #28892

- Explicit server error on gated model by @Wauplin in #28894

- [Docs] Fix backticks in inline code and documentation links by @khipp in #28875

- Hotfix - make

torchaudioget the correct version intorch_and_flax_jobby @ydshieh in #28899 - [Docs] Add missing language options and fix broken links by @khipp in #28852

- fix: Fixed the documentation for

logging_first_stepby removing "evaluate" by @Sai-Suraj-27 in #28884 - fix Starcoder FA2 implementation by @pacman100 in #28891

- Fix Keras scheduler import so it works for older versions of Keras by @Rocketknight1 in #28895

- ⚠️ Raise

Exceptionwhen trying to generate 0 tokens ⚠️ by @danielkorat in #28621 - Update the cache number by @ydshieh in #28905

- Add npu device for pipeline by @statelesshz in #28885

- [Docs] Fix placement of tilde character by @khipp in #28913

- [Docs] Revert translation of '@slow' decorator by @khipp in #28912

- Fix utf-8 yaml load for marian conversion to pytorch in Windows by @SystemPanic in #28618

- Remove dead TF loading code by @Rocketknight1 in #28926

- fix: torch.int32 instead of torch.torch.int32 by @vodkaslime in #28883

- pass kwargs in stopping criteria list by @zucchini-nlp in #28927

- Support batched input for decoder start ids by @zucchini-nlp in #28887

- [Docs] Fix broken links and syntax issues by @khipp in #28918

- Fix max_position_embeddings default value for llama2 to 4096 #28241 by @karl-hajjar in #28754

- Fix a wrong link to CONTRIBUTING.md section in PR template by @B-Step62 in #28941

- Fix type annotations on neftune_noise_alpha and fsdp_config TrainingArguments parameters by @peblair in #28942

- [i18n-de] Translate README.md to German by @khipp in #28933

- [Nougat] Fix pipeline by @NielsRogge in #28242

- [Docs] Update README and default pipelines by @NielsRogge in #28864

- Convert

torch_dtypeasstrto actual torch data type (i.e. "float16" …totorch.float16) by @KossaiSbai in #28208 - [

pipelines] updated docstring with vqa alias by @cmahmut in #28951 - Tests: tag

test_save_load_fast_init_from_baseas flaky by @gante in #28930 - Updated requirements for image-classification samples: datasets>=2.14.0 by @alekseyfa in #28974

- Always initialize tied output_embeddings if it has a bias term by @hackyon in #28947

- Clean up staging tmp checkpoint directory by @woshiyyya in #28848

- [Docs] Add language identifiers to fenced code blocks by @khipp in #28955

- [Docs] Add video section by @NielsRogge in #28958

- [i18n-de] Translate CONTRIBUTING.md to German by @khipp in #28954

- [

NllbTokenizer] refactor with added tokens decoder by @ArthurZucker in #27717 - Add sudachi_projection option to BertJapaneseTokenizer by @hiroshi-matsuda-rit in #28503

- Update configuration_llama.py: fixed broken link by @AdityaKane2001 in #28946

- [

DETR] Update the processing to adapt masks & bboxes to reflect padding by @amyeroberts in #28363 - ENH: Do not pass warning message in case

quantization_configis in config but not passed as an arg by @younesbelkada in #28988 - ENH [

AutoQuantizer]: enhance trainer + not supported quant methods by @younesbelkada in #28991 - Add SiglipForImageClassification and CLIPForImageClassification by @NielsRogge in #28952

- [

Doc] Fix docbuilder - makeBackboneMixinandBackboneConfigMixinimportable fromutils. by @amyeroberts in #29002 - Set the dataset format used by

test_trainerto float32 by @statelesshz in #28920 - Introduce AcceleratorConfig dataclass by @muellerzr in #28664

- Fix flaky test vision encoder-decoder generate by @zucchini-nlp in #28923

- Mask Generation Task Guide by @merveenoyan in #28897

- Add tie_weights() to LM heads and set bias in set_output_embeddings() by @hackyon in #28948

- [TPU] Support PyTorch/XLA FSDP via SPMD by @alanwaketan in #28949

- FIX [

Trainer/ tags]: Fix trainer + tags when users do not pass"tags"totrainer.push_to_hub()by @younesbelkada in #29009 - Add cuda_custom_kernel in DETA by @SangbumChoi in #28989

- DeformableDetrModel support fp16 by @DonggeunYu in #29013

- Fix copies between DETR and DETA by @amyeroberts in #29037

- FIX: Fix error with

logger.warning+ inline with recent refactor by @younesbelkada in #29039 - Patch to skip failing

test_save_load_low_cpu_mem_usagetests by @amyeroberts in #29043 - Fix a tiny typo in

generation/utils.py::GenerateEncoderDecoderOutput's docstring by @sadra-barikbin in #29044 - add test marker to run all tests with @require_bitsandbytes by @Titus-von-Koeller in #28278

- Update important model list by @LysandreJik in #29019

- Fix max_length criteria when using inputs_embeds by @zucchini-nlp in #28994

- Support : Leverage Accelerate for object detection/segmentation models by @Tanmaypatil123 in #28312

- fix num_assistant_tokens with heuristic schedule by @jmamou in #28759

- fix failing trainer ds tests by @pacman100 in #29057

-

auto_find_batch_sizeisn't yet supported with DeepSpeed/FSDP. Raise error accrodingly. by @pacman100 in #29058 - Honor trust_remote_code for custom tokenizers by @rl337 in #28854

- Feature: Option to set the tracking URI for MLflowCallback. by @seanswyi in #29032

- Fix trainer test wrt DeepSpeed + auto_find_bs by @muellerzr in #29061

- Add chat support to text generation pipeline by @Rocketknight1 in #28945

- [Docs] Spanish translation of task_summary.md by @aaronjimv in #28844

- [

Awq] Add peft support for AWQ by @younesbelkada in #28987 - FIX [

bnb/tests]: Fix currently failing bnb tests by @younesbelkada in #29092 - fix the post-processing link by @davies-w in #29091

- Fix the

bert-base-casedtokenizer configuration test by @LysandreJik in #29105 - Fix a typo in

examples/pytorch/text-classification/run_classification.pyby @Ja1Zhou in #29072 - change version by @ArthurZucker in #29097

- [Docs] Add resources by @NielsRogge in #28705

- ENH: added new output_logits option to generate function by @mbaak in #28667

- Bnb test fix for different hardwares by @Titus-von-Koeller in #29066

- Fix two tiny typos in

pipelines/base.py::Pipeline::_sanitize_parameters()'s docstring by @sadra-barikbin in #29102 - storing & logging gradient norm in trainer by @shijie-wu in #27326

- Fixed nll with label_smoothing to just nll by @nileshkokane01 in #28708

- [

gradient_checkpointing] default to use it for torch 2.3 by @ArthurZucker in #28538 - Move misplaced line by @kno10 in #29117

- FEAT [

Trainer/bnb]: Add RMSProp frombitsandbytesto HFTrainerby @younesbelkada in #29082 - Abstract image processor arg checks. by @molbap in #28843

- FIX [

bnb/tests] Propagate the changes from #29092 to 4-bit tests by @younesbelkada in #29122 - Llama: fix batched generation by @gante in #29109

- Generate: unset GenerationConfig parameters do not raise warning by @gante in #29119

- [

cuda kernels] only compile them when initializing by @ArthurZucker in #29133 - FIX [

PEFT/Trainer] Handle better peft + quantized compiled models by @younesbelkada in #29055 - [

Core tokenization]add_dummy_prefix_spaceoption to help with latest issues by @ArthurZucker in #28010 - Revert low cpu mem tie weights by @amyeroberts in #29135

- Add support for fine-tuning CLIP-like models using contrastive-image-text example by @tjs-intel in #29070

- Save (circleci) cache at the end of a job by @ydshieh in #29141

- [Phi] Add support for sdpa by @hackyon in #29108

- Generate: missing generation config eos token setting in encoder-decoder tests by @gante in #29146

- Added image_captioning version in es and included in toctree file by @gisturiz in #29104

- Fix drop path being ignored in DINOv2 by @fepegar in #29147

- [

pipeline] Add pool option to image feature extraction pipeline by @amyeroberts in #28985

Significant community contributions

The following contributors have made significant changes to the library over the last release:

- @nakranivaibhav

- Improved type hinting for all attention parameters (#28479)

- Adds LlamaForQuestionAnswering class in modeling_llama.py along with AutoModel Support (#28777)

- @khipp

- Fix input data file extension in examples (#28741)

- [Docs] Fix spelling and grammar mistakes (#28825)

- [Docs] Update project names and links in awesome-transformers (#28878)

- [Docs] Fix backticks in inline code and documentation links (#28875)

- [Docs] Add missing language options and fix broken links (#28852)

- [Docs] Fix placement of tilde character (#28913)

- [Docs] Revert translation of '@slow' decorator (#28912)

- [Docs] Fix broken links and syntax issues (#28918)

- [i18n-de] Translate README.md to German (#28933)

- [Docs] Add language identifiers to fenced code blocks (#28955)

- [i18n-de] Translate CONTRIBUTING.md to German (#28954)

- @ThibaultLengagne

- Add French translation: french README.md (#28696)

- @poedator

-

HfQuantizerclass for quantization-related stuff inmodeling_utils.py(#26610)

-

- @kiansierra

- Flax mistral (#26943)

- @hackyon

- Adding [T5/MT5/UMT5]ForTokenClassification (#28443)

- Always initialize tied output_embeddings if it has a bias term (#28947)

- Add tie_weights() to LM heads and set bias in set_output_embeddings() (#28948)

- [Phi] Add support for sdpa (#29108)

- @SangbumChoi

- enable graident checkpointing in DetaObjectDetection and add tests in Swin/Donut_Swin (#28615)

- Add cuda_custom_kernel in DETA (#28989)

- @rajveer43

- Add models from deit (#28302)

- @jon-tow

- Add

StableLM(#28810)

- Add

v4.37.2

2 months agoSelection of fixes

- Protecting the imports for SigLIP's tokenizer if sentencepiece isn't installed

- Fix permissions issue on windows machines when using trainer in multi-node setup

- Allow disabling safe serialization when using Trainer. Needed for Neuron SDK

- Fix error when loading processor from cache

- torch < 1.13 compatible

torch.load

Commits

- [Siglip] protect from imports if sentencepiece not installed (#28737)

- Fix weights_only (#28725)

- Enable safetensors conversion from PyTorch to other frameworks without the torch requirement (#27599)

- Don't fail when LocalEntryNotFoundError during processor_config.json loading (#28709)

- Use save_safetensor to disable safe serialization for XLA (#28669)

- Fix windows err with checkpoint race conditions (#28637)

- [SigLIP] Only import tokenizer if sentencepiece available (#28636)

v4.37.1

2 months agoA patch release to resolve import errors from removed custom types in generation utils

- Add back in generation types #28681

v4.37.0

2 months agoModel releases

Qwen2

Qwen2 is the new model series of large language models from the Qwen team. Previously, the Qwen series was released, including Qwen-72B, Qwen-1.8B, Qwen-VL, Qwen-Audio, etc.

Qwen2 is a language model series including decoder language models of different model sizes. For each size, we release the base language model and the aligned chat model. It is based on the Transformer architecture with SwiGLU activation, attention QKV bias, group query attention, mixture of sliding window attention and full attention, etc. Additionally, we have an improved tokenizer adaptive to multiple natural languages and codes.

- Add qwen2 by @JustinLin610 in #28436

Phi-2

Phi-2 is a transformer language model trained by Microsoft with exceptionally strong performance for its small size of 2.7 billion parameters. It was previously available as a custom code model, but has now been fully integrated into transformers.

- [Phi2] Add support for phi2 models by @susnato in #28211

- [Phi] Extend implementation to use GQA/MQA. by @gugarosa in #28163

- update docs to add the

phi-2example by @susnato in #28392 - Fixes default value of

softmax_scaleinPhiFlashAttention2. by @gugarosa in #28537

SigLIP

The SigLIP model was proposed in Sigmoid Loss for Language Image Pre-Training by Xiaohua Zhai, Basil Mustafa, Alexander Kolesnikov, Lucas Beyer. SigLIP proposes to replace the loss function used in CLIP by a simple pairwise sigmoid loss. This results in better performance in terms of zero-shot classification accuracy on ImageNet.

- Add SigLIP by @NielsRogge in #26522

- [SigLIP] Don't pad by default by @NielsRogge in #28578

ViP-LLaVA

The VipLlava model was proposed in Making Large Multimodal Models Understand Arbitrary Visual Prompts by Mu Cai, Haotian Liu, Siva Karthik Mustikovela, Gregory P. Meyer, Yuning Chai, Dennis Park, Yong Jae Lee.

VipLlava enhances the training protocol of Llava by marking images and interact with the model using natural cues like a “red bounding box” or “pointed arrow” during training.

- Adds VIP-llava to transformers by @younesbelkada in #27932

- Fix Vip-llava docs by @younesbelkada in #28085

FastSpeech2Conformer

The FastSpeech2Conformer model was proposed with the paper Recent Developments On Espnet Toolkit Boosted By Conformer by Pengcheng Guo, Florian Boyer, Xuankai Chang, Tomoki Hayashi, Yosuke Higuchi, Hirofumi Inaguma, Naoyuki Kamo, Chenda Li, Daniel Garcia-Romero, Jiatong Shi, Jing Shi, Shinji Watanabe, Kun Wei, Wangyou Zhang, and Yuekai Zhang.

FastSpeech 2 is a non-autoregressive model for text-to-speech (TTS) synthesis, which develops upon FastSpeech, showing improvements in training speed, inference speed and voice quality. It consists of a variance adapter; duration, energy and pitch predictor and waveform and mel-spectrogram decoder.

- Add FastSpeech2Conformer by @connor-henderson in #23439

Wav2Vec2-BERT

The Wav2Vec2-BERT model was proposed in Seamless: Multilingual Expressive and Streaming Speech Translation by the Seamless Communication team from Meta AI.

This model was pre-trained on 4.5M hours of unlabeled audio data covering more than 143 languages. It requires finetuning to be used for downstream tasks such as Automatic Speech Recognition (ASR), or Audio Classification.

- Add new meta w2v2-conformer BERT-like model by @ylacombe in #28165

- Add w2v2bert to pipeline by @ylacombe in #28585

4-bit serialization

Enables saving and loading transformers models in 4bit formats - you can now push bitsandbytes 4-bit weights on Hugging Face Hub. To save 4-bit models and push them on the hub, simply install the latest bitsandbytes package from pypi pip install -U bitsandbytes, load your model in 4-bit precision and call save_pretrained / push_to_hub. An example repo here

from transformers import AutoModelForCausalLM, AutoTokenizer

model_id = "facebook/opt-125m"

model = AutoModelForCausalLM.from_pretrained(model_id, load_in_4bit=True)

model.push_to_hub("ybelkada/opt-125m-bnb-4bit")

- [bnb] Let's make serialization of 4bit models possible by @poedator in #26037

- [

Docs] Add 4-bit serialization docs by @younesbelkada in #28182

4D Attention mask

Enable passing in 4D attention masks to models that support it. This is useful for reducing memory footprint of certain generation tasks.

- 4D

attention_masksupport by @poedator in #27539

Improved quantization support

Ability to customise which modules are quantized and which are not.

- [

Awq] Enable the possibility to skip quantization for some target modules by @younesbelkada in #27950 - add

modules_in_block_to_quantizearg in GPTQconfig by @SunMarc in #27956

Added fused modules support

- [docs] Fused AWQ modules by @stevhliu in #27896

- [

Awq] Add llava fused modules support by @younesbelkada in #28239 - [

Mixtral/Awq] Add mixtral fused modules for Awq by @younesbelkada in #28240

SDPA Support for LLaVa, Mixtral, Mistral

- Fix SDPA correctness following torch==2.1.2 regression by @fxmarty in #27973

- [

Llava/Vip-Llava] Add SDPA into llava by @younesbelkada in #28107 - [

Mixtral&Mistral] Add support for sdpa by @ArthurZucker in #28133 - [SDPA] Make sure attn mask creation is always done on CPU by @patrickvonplaten in #28400

- Fix SDPA tests by @fxmarty in #28552

Whisper: Batched state-of-the-art long-form transcription

All decoding strategies (temperature fallback, compression/log-prob/no-speech threshold, ...) of OpenAI's long-form transcription (see: https://github.com/openai/whisper or section 4.5 in paper) have been added. Contrary to https://github.com/openai/whisper, Transformers long-form transcription is fully compatible with pure FP16 and Batching!

For more information see: https://github.com/huggingface/transformers/pull/27658.

- [Whisper] Finalize batched SOTA long-form generation by @patrickvonplaten in #27658

Generation: assisted generation upgrades, speculative decoding, and ngram speculation

Assisted generation was reworked to accept arbitrary sources of candidate sequences. This enabled us to smoothly integrate ngram speculation, and opens the door for new candidate generation methods. Additionally, we've added the speculative decoding strategy on top of assisted generation: when you call assisted generation with an assistant model and do_sample=True, you'll benefit from the faster speculative decoding sampling 🏎️💨

- Generate:

assisted_decodingnow accepts arbitrary candidate generators by @gante in #27751 - Generate: assisted decoding now uses

generatefor the assistant by @gante in #28031 - Generate: speculative decoding by @gante in #27979

- Generate: fix speculative decoding by @gante in #28166

- Adding Prompt lookup decoding by @apoorvumang in #27775

- Fix _speculative_sampling implementation by @ofirzaf in #28508

torch.load pickle protection

Adding pickle protection via weights_only=True in the torch.load calls.

- make torch.load a bit safer by @julien-c in #27282

Build methods for TensorFlow Models

Unlike PyTorch, TensorFlow models build their weights "lazily" after model initialization, using the shape of their inputs to figure out what their weight shapes should be. We previously needed a full forward pass through TF models to ensure that all layers received an input they could use to build their weights, but with this change we now have proper build() methods that can correctly infer shapes and build model weights. This avoids a whole range of potential issues, as well as significantly accelerating model load times.

- Proper build() methods for TF by @Rocketknight1 in #27794

- Replace build() with build_in_name_scope() for some TF tests by @Rocketknight1 in #28046

- More TF fixes by @Rocketknight1 in #28081

- Even more TF test fixes by @Rocketknight1 in #28146

Remove support for torch 1.10

The last version to support PyTorch 1.10 was 4.36.x. As it has been more than 2 years, and we're looking forward to using features available in PyTorch 1.11 and up, we do not support PyTorch 1.10 for v4.37 (i.e. we don't run the tests against torch 1.10).

- Byebye torch 1.10 by @ydshieh in #28207

Model tagging

You can now add custom tags into your model before pushing it on the Hub! This enables you to filter models that contain that tag on the Hub with a simple URL filter. For example if you want to filter models that have trl tag you can search: https://huggingface.co/models?other=trl&sort=created

- [

core/ FEAT] Add the possibility to push custom tags usingPreTrainedModelitself by @younesbelkada in #28405 - e.g.

from transformers import AutoModelForCausalLM

model_name = "HuggingFaceM4/tiny-random-LlamaForCausalLM"

model = AutoModelForCausalLM.from_pretrained(model_name)

model.add_model_tags(["tag-test"])

model.push_to_hub("llama-tagged")

Bugfixes and improvements

- Fix PatchTSMixer Docstrings by @vijaye12 in #27943

- use logger.warning_once to avoid massive outputs by @ranchlai in #27428

- Docs for AutoBackbone & Backbone by @merveenoyan in #27456

- Fix test for auto_find_batch_size on multi-GPU by @muellerzr in #27947

- Update import message by @NielsRogge in #27946

- Fix parameter count in readme for mixtral 45b by @CyberTimon in #27945

- In PreTrainedTokenizerBase add missing word in error message by @petergtz in #27949

- Fix AMD scheduled CI not triggered by @ydshieh in #27951

- Add deepspeed test to amd scheduled CI by @echarlaix in #27633

- Fix a couple of typos and add an illustrative test by @rjenc29 in #26941

- fix bug in mask2former: cost matrix is infeasible by @xuchenhao001 in #27897

- Fix for stochastic depth decay rule in the TimeSformer implementation by @atawari in #27875

- fix no sequence length models error by @AdamLouly in #27522

- [

Mixtral] Change mistral op order by @younesbelkada in #27955 - Update bounding box format everywhere by @NielsRogge in #27944

- Support PeftModel signature inspect by @dancingpipi in #27865

- fixed typos (issue 27919) by @asusevski in #27920

- Hot-fix-mixstral-loss by @ArthurZucker in #27948

- Fix link in README.md of Image Captioning by @saswatmeher in #27969

- Better key error for AutoConfig by @Rocketknight1 in #27976

- [doc] fix typo by @stas00 in #27981

- fix typo in dvclive callback by @dberenbaum in #27983

- [

Tokenizer Serialization] Fix the broken serialisation by @ArthurZucker in #27099 - [

Whisper] raise better errors by @ArthurZucker in #27971 - Fix PatchTSMixer slow tests by @ajati in #27997

- [

CI slow] Fix expected values by @ArthurZucker in #27999 - Fix bug with rotating checkpoints by @muellerzr in #28009

- [Doc] Spanish translation of glossary.md by @aaronjimv in #27958

- Add model_docs from cpmant.md to derformable_detr.md by @rajveer43 in #27884

- well well well by @ArthurZucker in #28011

- [

SeamlessM4TTokenizer] Safe import by @ArthurZucker in #28026 - [

core/modeling] Fix training bug with PEFT + GC by @younesbelkada in #28031 - Fix AMD push CI not triggered by @ydshieh in #28029

- SeamlessM4T:

test_retain_grad_hidden_states_attentionsis flaky by @gante in #28035 - Fix languages covered by M4Tv2 by @ylacombe in #28019

- Fixed spelling error in T5 tokenizer warning message (s/thouroughly/t… by @jeddobson in #28014

- Generate: Mistral/Mixtral FA2 cache fix when going beyond the context window by @gante in #28037

- [Seamless] Fix links in docs by @sanchit-gandhi in #27905

- Remove warning when Annotion enum is created by @amyeroberts in #28048

- [

FA-2] Fix fa-2 issue when passingconfigtofrom_pretrainedby @younesbelkada in #28043 - [

Modeling/Mixtral] Fix GC + PEFT issues with Mixtral by @younesbelkada in #28061 - [Flax BERT] Update deprecated 'split' method by @sanchit-gandhi in #28012

- [Flax LLaMA] Fix attn dropout by @sanchit-gandhi in #28059

- Remove SpeechT5 deprecated argument by @ylacombe in #28062

- doc: Correct spelling mistake by @caiyili in #28064

- [

Mixtral] update conversion script to reflect new changes by @younesbelkada in #28068 - Skip M4T

test_retain_grad_hidden_states_attentionsby @ylacombe in #28060 - [LLaVa] Add past_key_values to _skip_keys_device_placement to fix multi-GPU dispatch by @aismlv in #28051

- Make GPT2 traceable in meta state by @kwen2501 in #28054

- Fix bug for checkpoint saving on multi node training setting by @dumpmemory in #28078

- Update fixtures-image-utils by @lhoestq in #28080

- Fix

low_cpu_mem_usageFlag Conflict with DeepSpeed Zero 3 infrom_pretrainedfor Models withkeep_in_fp32_modules" by @kotarotanahashi in #27762 - Fix wrong examples in llava usage. by @Lyken17 in #28020

- [docs] Trainer by @stevhliu in #27986

- [docs] MPS by @stevhliu in #28016

- fix resuming from ckpt when using FSDP with FULL_STATE_DICT by @pacman100 in #27891

- Fix the deprecation warning of _torch_pytree._register_pytree_node by @cyyever in #27803

- Spelling correction by @saeneas in #28110

- in peft finetune, only the trainable parameters need to be saved by @sywangyi in #27825

- fix ConversationalPipeline docstring by @not-lain in #28091

- Disable jitter noise during evaluation in SwitchTransformers by @DaizeDong in #28077

- Remove warning if

DISABLE_TELEMETRYis used by @Wauplin in #28113 - Fix indentation error - semantic_segmentation.md by @rajveer43 in #28117

- [docs] General doc fixes by @stevhliu in #28087

- Fix a typo in tokenizer documentation by @mssalvatore in #28118

- [Doc] Fix token link in What 🤗 Transformers can do by @aaronjimv in #28123

- When save a model on TPU, make a copy to be moved to CPU by @qihqi in #27993

- Update split string in doctest to reflect #28087 by @amyeroberts in #28135

- [

Mixtral] Fix loss + nits by @ArthurZucker in #28115 - Update modeling_utils.py by @mzelling in #28127

- [docs] Fix mistral link in mixtral.md by @aaronjimv in #28143

- Remove deprecated CPU dockerfiles by @ashahba in #28149

- Fix FA2 integration by @pacman100 in #28142

- [gpt-neox] Add attention_bias config to support model trained without attention biases by @dalgarak in #28126

- move code to Trainer.evaluate to enable use of that function with multiple datasets by @peter-sk in #27844

- Fix weights not properly initialized due to shape mismatch by @ydshieh in #28122

- Avoid unnecessary warnings when loading

CLIPConfigby @ydshieh in #28108 - Update FA2 exception msg to point to hub discussions by @amyeroberts in #28161

- Align backbone stage selection with out_indices & out_features by @amyeroberts in #27606

- [docs] Trainer docs by @stevhliu in #28145

- Fix yolos resizing by @amyeroberts in #27663

- disable test_retain_grad_hidden_states_attentions on SeamlessM4TModelWithTextInputTest by @dwyatte in #28169

- Fix

input_embedsdocstring in encoder-decoder architectures by @gante in #28168 - [Whisper] Use torch for stft if available by @sanchit-gandhi in #26119

- Fix slow backbone tests - out_indices must match stage name ordering by @amyeroberts in #28186

- Update YOLOS slow test values by @amyeroberts in #28187

- Update

docs/source/en/perf_infer_gpu_one.mdby @ydshieh in #28198 - Fix ONNX export for causal LM sequence classifiers by removing reverse indexing by @dwyatte in #28144

- Add Swinv2 backbone by @NielsRogge in #27742

- Fix: [SeamlessM4T - S2TT] Bug in batch loading of audio in torch.Tensor format in the SeamlessM4TFeatureExtractor class by @nicholasneo78 in #27914

- Bug:

training_args.pyfix missing import with accelerate with versionaccelerate==0.20.1by @michaelfeil in #28171 - Fix the check of models supporting FA/SDPA not run by @ydshieh in #28202

- Drop

feature_extractor_typewhen loading an image processor file by @ydshieh in #28195 - [Whisper] Fix word-level timestamps with bs>1 or num_beams>1 by @ylacombe in #28114

- Fixing visualization code for object detection to support both types of bounding box. by @Anindyadeep in #27842

- update the logger message with accordant weights_file_name by @izyForever in #28181

- [

Llava] Fix llava index errors by @younesbelkada in #28032 - fix FA2 when using quantization by @pacman100 in #28203

- small typo by @stas00 in #28229

- Update docs around mixing hf scheduler with deepspeed optimizer by @dwyatte in #28223

- Fix trainer saving safetensors: metadata is None by @hiyouga in #28219

- fix bug:divide by zero in _maybe_log_save_evaluate() by @frankenliu in #28251

- [Whisper] Fix errors with MPS backend introduced by new code on word-level timestamps computation by @ercaronte in #28288

- Remove fast tokenization warning in Data Collators by @dbuos in #28213

- fix documentation for zero_shot_object_detection by @not-lain in #28267

- Remove token_type_ids from model_input_names (like #24788) by @Apsod in #28325

- Translate contributing.md into Chinese by @Mayfsz in #28243

- [docs] Sort es/toctree.yml | Translate performance.md by @aaronjimv in #28262

- Fix error in M4T feature extractor by @ylacombe in #28340

- README: install transformers from conda-forge channel by @kevherro in #28313

- Don't check the device when device_map=auto by @yuanwu2017 in #28351

- Fix pos_mask application and update tests accordingly by @ferjorosa in #27892

- fix FA2 when using quantization for remaining models by @susnato in #28341

- Update VITS modeling to enable ONNX export by @echarlaix in #28141

- chore: Fix typo s/exclusivelly/exclusively/ by @hugo-syn in #28361

- Enhancing Code Readability and Maintainability with Simplified Activation Function Selection. by @hi-sushanta in #28349

- Fix building alibi tensor when num_heads is not a power of 2 by @abuelnasr0 in #28380

- remove two deprecated function by @statelesshz in #28220

- Bugfix / ffmpeg input device (mic) not working on Windows by @Teapack1 in #27051

- [AttentionMaskConverter] fix sdpa unmask unattended by @zspo in #28369

- Remove shell=True from subprocess.Popen to Mitigate Security Risk by @avimanyu786 in #28299

- Add segmentation map processing to SAM Image Processor by @rwood-97 in #27463

- update warning for image processor loading by @ydshieh in #28209

- Fix initialization for missing parameters in

from_pretrainedunder ZeRO-3 by @XuehaiPan in #28245 - Fix

_merge_input_ids_with_image_featuresfor llava model by @VictorSanh in #28333 - Use mmap option to load_state_dict by @weimingzha0 in #28331

- [BUG] BarkEosPrioritizerLogitsProcessor eos_token_id use list, tensor size mismatch by @inkinworld in #28201

- Skip now failing test in the Trainer tests by @muellerzr in #28421

- Support

DeepSpeedwhen using auto find batch size by @muellerzr in #28088 - Fix number of models in README.md by @prasatee in #28430

- CI: limit natten version by @gante in #28432

- Fix for checkpoint rename race condition by @tblattner in #28364

- Fix load correct tokenizer in Mixtral model documentation by @JuanFKurucz in #28437

- [docstring] Fix docstring for ErnieConfig, ErnieMConfig by @Sparty in #27029

- [Whisper] Fix slow test by @patrickvonplaten in #28407

- Assitant model may on a different device by @jiqing-feng in #27995

- Enable multi-label image classification in pipeline by @amyeroberts in #28433

- Optimize the speed of the truncate_sequences function. by @ikkvix in #28263

- Use python 3.10 for docbuild by @ydshieh in #28399

- Fix docker file by @ydshieh in #28452

- Set

cache_dirforevaluate.load()in example scripts by @aphedges in #28422 - Optionally preprocess segmentation maps for MobileViT by @harisankar95 in #28420

- Correctly resolve trust_remote_code=None for AutoTokenizer by @Rocketknight1 in #28419

- Fix load balancing loss func for mixtral by @liangxuZhang in #28256

- Doc by @jiqing-feng in #28431

- Fix docstring checker issues with PIL enums by @Rocketknight1 in #28450

- Fix broken link on page by @keenranger in #28451

- Mark two logger tests as flaky by @amyeroberts in #28458

- Update metadata loading for oneformer by @amyeroberts in #28398

- Fix torch.ones usage in xlnet by @sungho-ham in #28471

- Generate: deprecate old public functions by @gante in #28478

- Docs: add model paths by @gante in #28475

- Generate: refuse to save bad generation config files by @gante in #28477

- TF: purge

TFTrainerby @gante in #28483 - Fix docstrings and update docstring checker error message by @Rocketknight1 in #28460

- Change progress logging to once across all nodes by @siddartha-RE in #28373